Thats my expectation as well. I think we'll see 30% of the raster performance of a 3080 with RT when head to head without DLSS. I think AMD's DLSS solution will need to get here quickly to be able to compete though.

As far as I'm aware, AMD's solution for RT is a little better than turing but behind ampere. At the very minimum a 6800XT would perform at slightly above a 2080ti with RT turned on. Then if you factor in the fact that the 6800XT is more powerful at rasterization than the 2080ti, those gains should increase a little as well. No idea where you are getting 30% of the performance of a 3080 when 2080ti is already way higher than that.

While I think the 3080 is going to be ahead of the 6800XT in RT performance when all is said and done, this will be mostly noticable in fully path traced games such as Minecraft and Quake. I don't think the difference in performance is going to be anywhere as massive a difference as some people believe. I mean already in hybrid rendering scenarios (99% of games) Ampere does not pull massively ahead of Turning in performance.

We have recently seen a leaked benchmark for Shadow of The Tomb Raider with RT for the 6800 (non XT) at both 4K and 1440p, this benchmark shows the card performing quite admirably, beating out a 2080ti and 3070 with Ray Tracing turned on. Of course this could have been faked, or could be an outlier for whatever reason and not represent performance across the board in multiple titles so wait for real benchmarks.

But if this does represent the general performance level, then that is pretty impressive and would indicate that in real world scenarios in hybrid rendering scenarios the performance of the AMD cards might be quite close to the 3000 series cards. Maybe a few FPS difference, nothing monumental the way people are currently making out.

If the 6800 benchmark is real and representative of general RT performance then what is especially impressive is that it got this performance on DXR 1.0, which is designed as a collaboration with MS and Nvidia and designed around Nvidia's RT solution, AMD worked with MS on DXR 1.1 which is optimized for AMD's solution. So any RTX games currently are optimized for Nvidia hardware/DXR 1.0

Not saying this will necessarily make a difference in the end as we haven't seen any DXR 1.1 games yet, and I don't know what kind of performance "loss" AMD has with DXR 1.0 compared to any kind of hypothetical "gain" they might have with DXR 1.1, but just something worth taking into account.

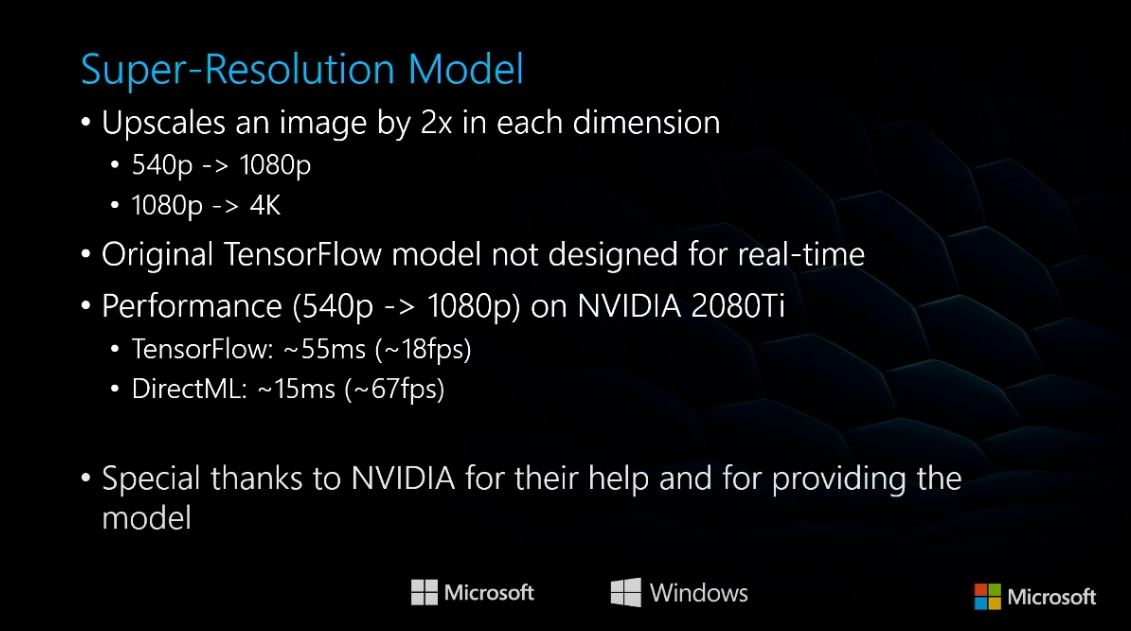

Of course all of this is without taking into account DLSS, when you turn that on obviously the Nvidia cards pull ahead. AMD have mentioned we should hear more about Super Resolution soon, presumably before launch of the cards. I've heard rumours it might launch in Dec/Jan as in a driver update. Right now we don't really know much about it so we will have to wait and see what happens. But for those shouting from the rooftops about DLSS being the be-all, end-all feature and that AMD need to have a similar solution, it looks like they will have one shortly.