Shmunter

Member

And this:

Anyone still latching on to "well, muh cpu can handle that" is living in a dreamland.

Yeah probably repeating what’s already been covered here…

Even if the most powerful CPU’s were to do it in real-time in parallel while running game code and gfx draw calls. It first needs to load it to ram, decompress back into ram and copy it into vram. Highly inefficient

On PS5 you just request it, and the asset lands in ram decompressed ready to use, super fast, low latency and free of computation resources.

The entire software decompression served its purpose when the data rate was limited by hdd speed. Once you open the tap to nvme speeds, the workload just exponentially increases and cannot be managed during gameplay for streaming.

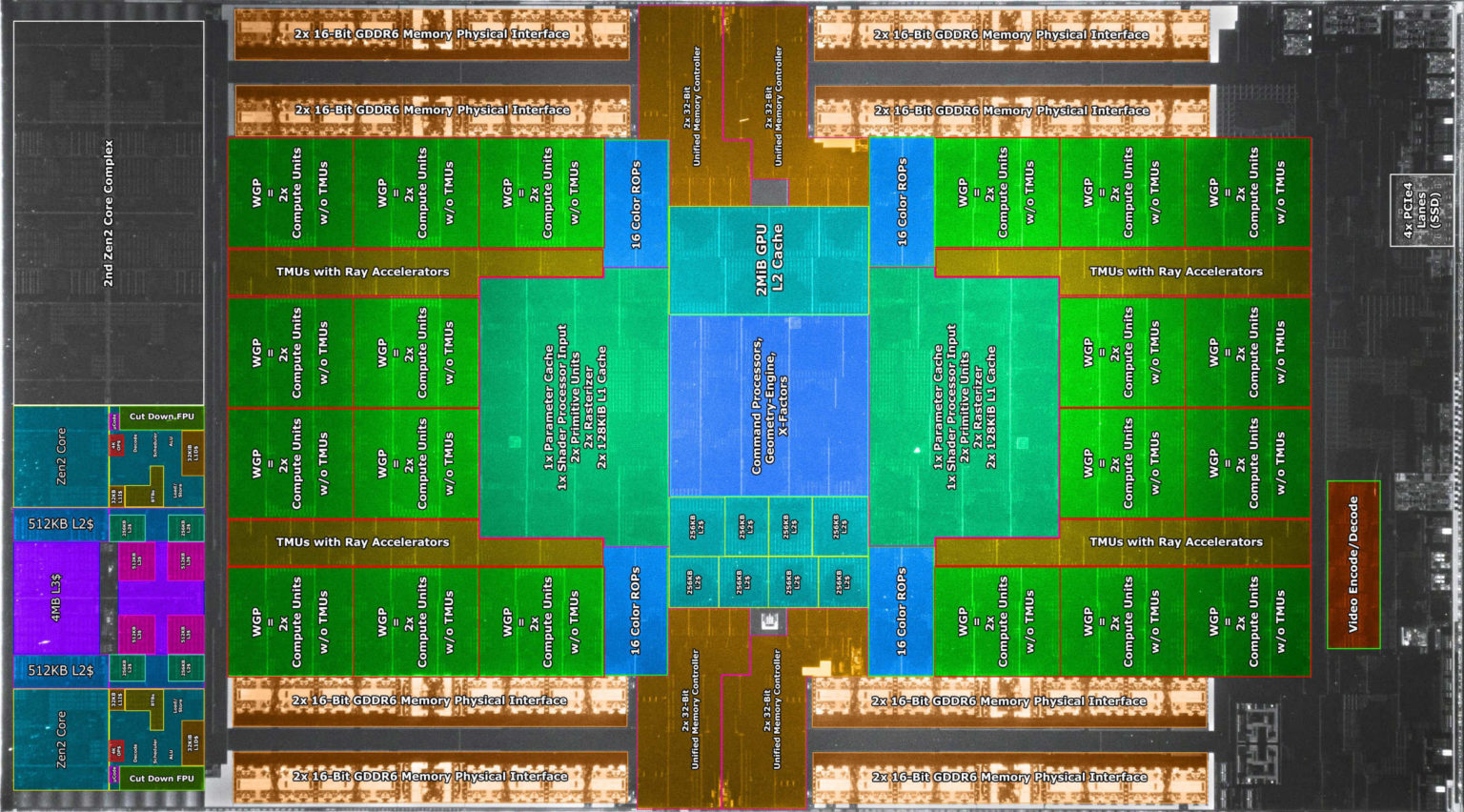

Its why gfx card manufacturers are proclaiming to do it on GPU to overcome the cpu work and the double handling. Will be curious if it’s successful or just lip service.