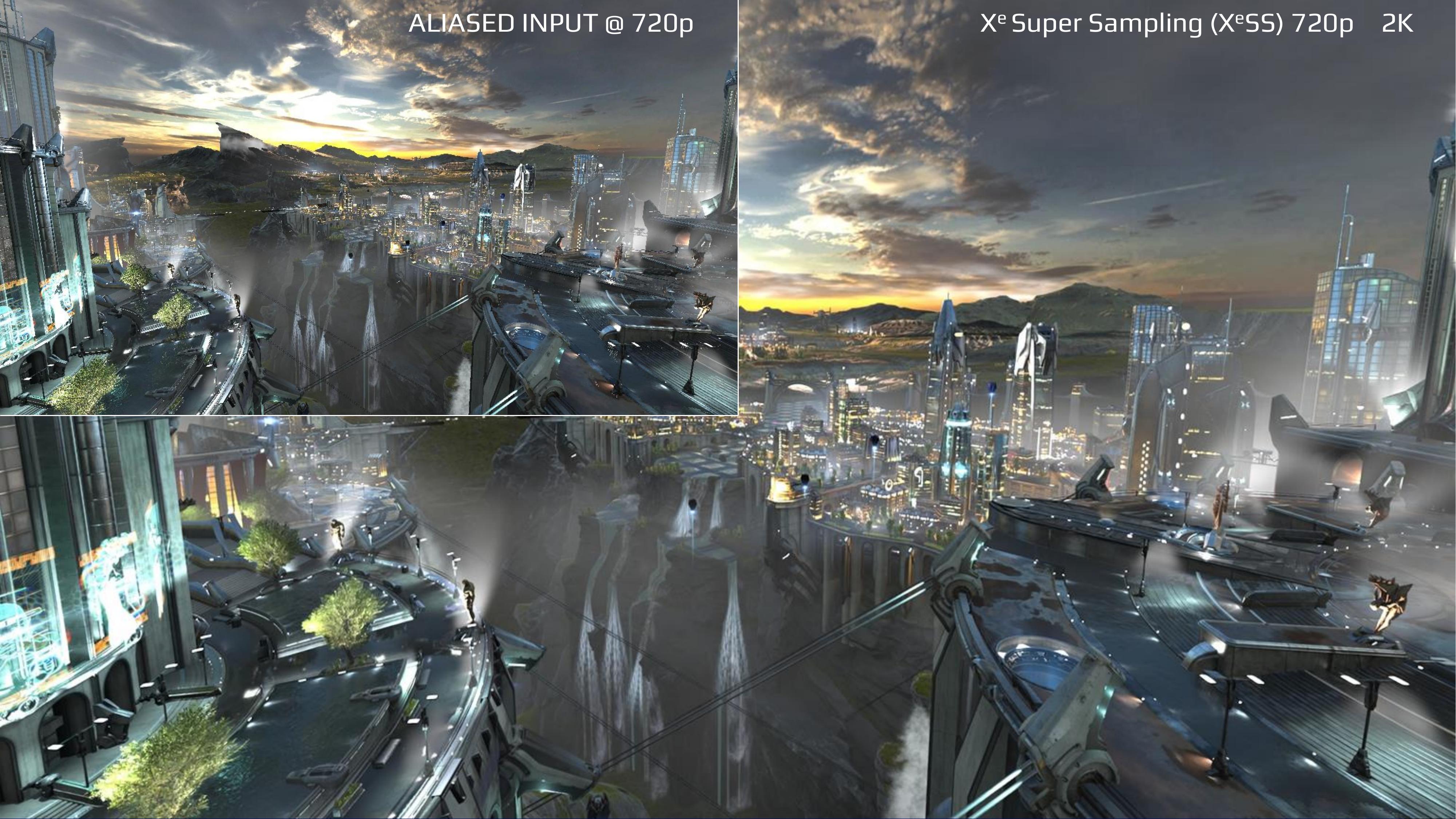

Intel software engineers behind XeSS technology mention that the upscaling and anti-aliasing should be treated as a single problem. But the use of temporal super sampling may not always be the first choice for developers. Older games and older GPUs may see better results and quicker implementation with spatial upscalers. Games supporting ray-tracing and other graphics-intensive technologies with support from the latest GPU hardware will undoubtedly see better performance and visuals with temporal upscalers.

Intel intends to support all GPU vendors with XeSS. The company reaffirms that all GPUs with support for Shader Model 6.4+ and DP4a instructions will support the technology. Still, XeSS will work best with their own Arc and other graphics architectures with Matrix Extensions (XMX) acceleration support. For those, a special version of XeSS will be available. Game developers should not worry, though, as XeSS will have a single API and the use of either XMX or DP4a models will be managed internally.

The XeSS might not be available yet, but the company is already working on overcoming the most common issues with temporal upscalers, such as ghosting or blurring. For those issues, Intel introduces their own algorithms that will either eliminate or reduce the effect to the minimum.

Unlike AMD FSR 2.0 and NVIDIA DLSS 2.3, Intel XeSS will feature five image quality modes, including Ultra Quality mode with a 1.3X scaling factor. This scaling factor is not available with DLSS or FSR, although there were rumors that they might at some point in the future.

Intel claims that their technology can achieve higher scaling rations than other temporal and spatial upscaling techniques. The Ultra-Quality mode will improve performance by 21% to 27% for 1440p and 4K resolutions respectively, while Ultra-Performance will offer 97% to 153% better framerate. Those numbers are based on the Rens demo powered by Arc Alchemist GPU running at fixed (undisclosed) frequency.

Intel does not yet have a launch date for XeSS or a full list of games that will support it. We might learn more soon, though, at official introduction of Arc GPUs on March 30th.