You are giving to much credit to those e-cores.

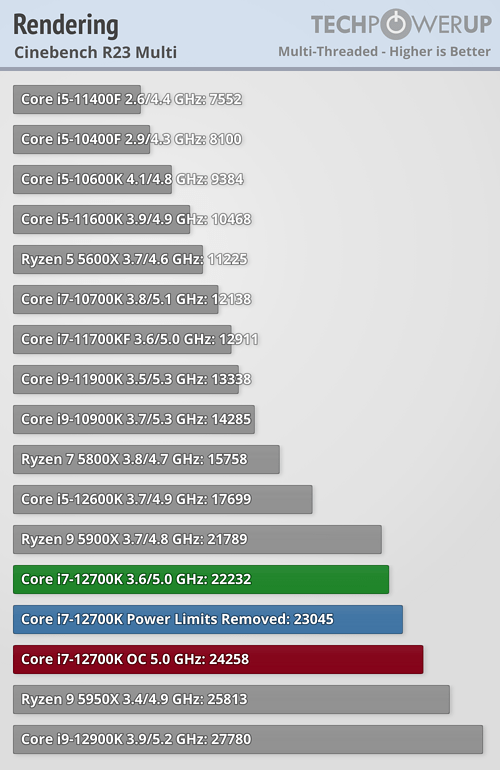

Look at the 12700K. it only has 4 e-cores less, and lower clocks than the 12900K. With OC, it gets closer to the 12900K.

That's a difference of 3500 points. So the e-cores in total, on the 12900K, are worth 7000 points. Or 880 points per e-core.

So the 8 p-cores are worth 20780 points. Or 2600 points per P-core.

Those e-cores are pathetic. Intel should just put 2 or 4 more P-cores.

The real reason why the Alder Lake beats Zen3, is because it released a year later. And because the Zen4 CPU has a 5-wide execution pipeline. While Alder Lake has a 6-wide.

And since cinebench scales really well with pipelines it gets a nice edge.