Tickle My Tendies

Member

Experience the Action RPG, Nioh 2 - The Complete Edition, enhanced with NVIDIA DLSS for the ultimate boost in performance. Available now.

Apparently the DLSS update is available now?

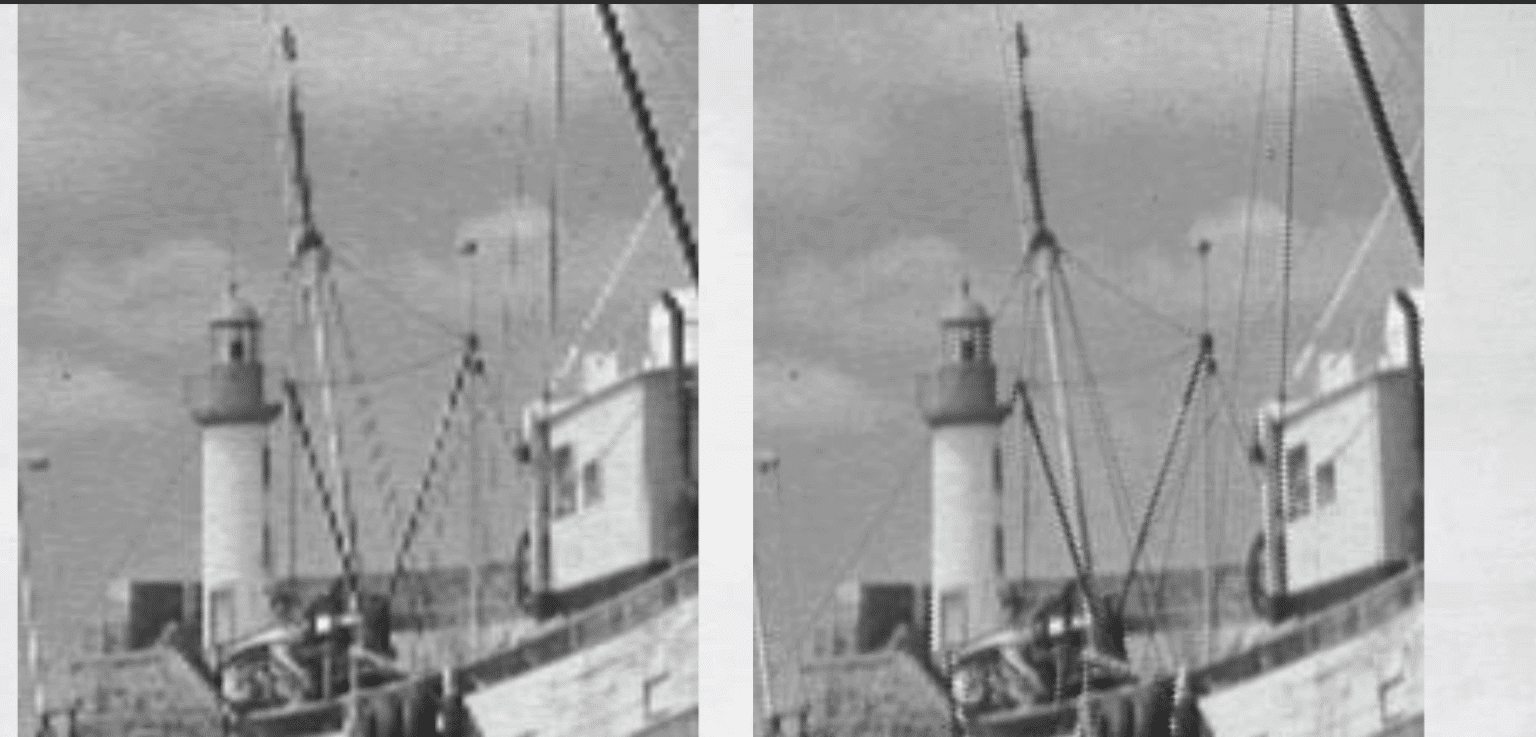

Screen grabs from the video (basically a 30-ish FPS boost)

---------------------------

Found a more in depth comparison for the game, native vs DLSS. Video is available up to 4k/60. Video is nice quality, we can judge for ourselves. DLSS looks fantastic, to me. I own the game on PS5, so not gonna double dip just to check on my 3080. There are other comparisons out there too, if people want more examples. I won't flood the thread further.

Many people on Reddit saying the game looks better in some areas with DLSS on due to the better AA (guess the game on PC has shoddy AA without DLSS?). Yes, the source is Reddit, so YMMV. Just sayin. Here is one thread but many more are out there

Last edited: