winjer

Gold Member

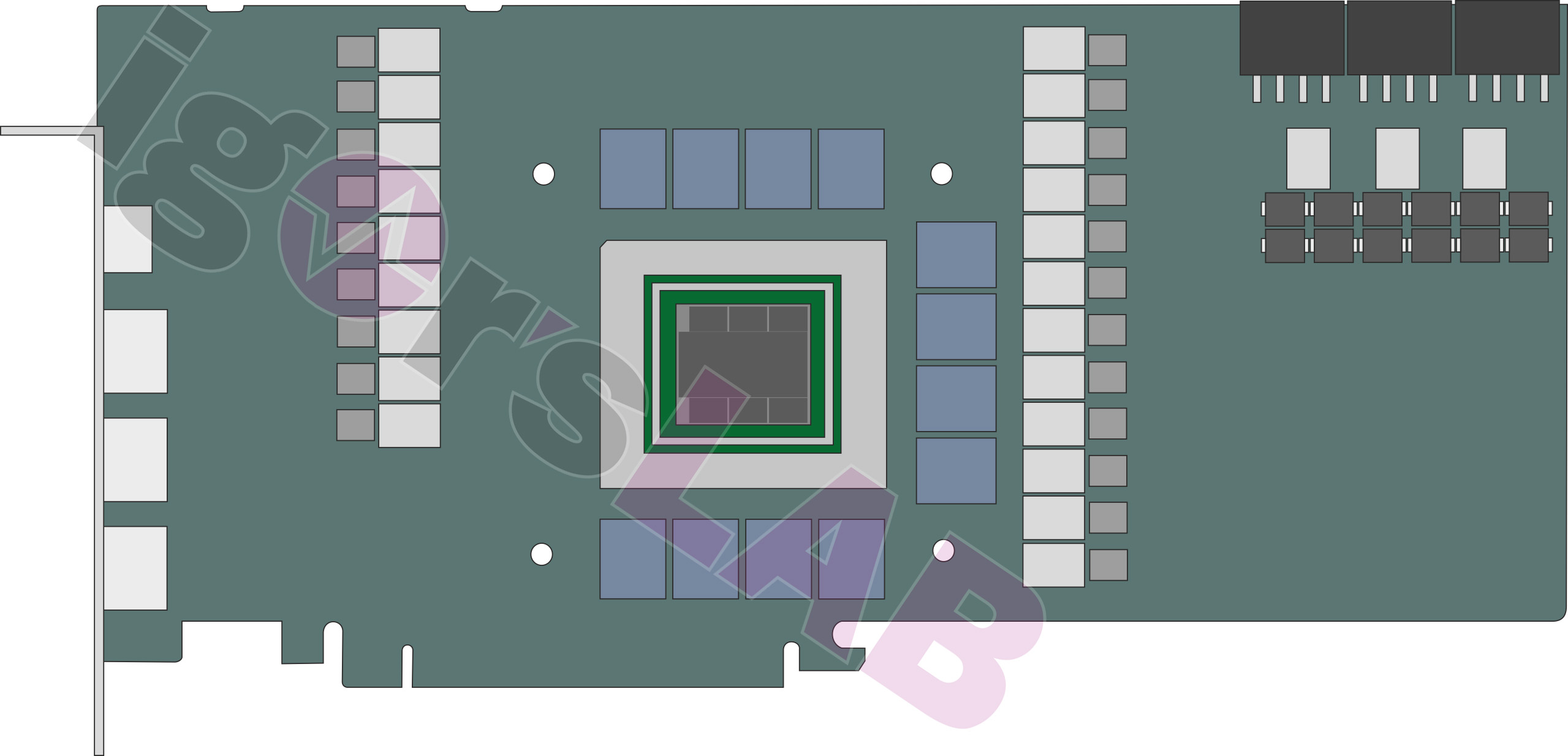

AMD Radeon RX 7000 RDNA 3 GPUs Confirmed For Launch on 3rd November

AMD has just confirmed the launch of its next-generation RDNA 3 GPU-powered Radeon RX 7000 graphics cards on the 3rd of November.

The 40 Series press conference is in 2 hours. We'll see shortly!Eagerly awaiting this for my new PC build.

When is Nvidia’s supposed to be?

What a day to reveal this launch 2 hour before NVidia showThe 40 Series press conference is in 2 hours. We'll see shortly!

And.... its showtime, folks! Techmas has come! I am really curious to see what AMD has been able to do with the MCM design. Hoping the raytracing is on par with Nvidia this go around. Exciting times, folks!

Exactly. Killing the nvidia hype … sadly they are doing it wrong lol.What a day to reveal this launch 2 hour before NVidia show

Love this version of AMD

My guess is it’ll be in between Ampere and Lovelace.Sort out your RT performance, AMD and I'll at least then consider your cards....but not until.

Sort out your RT performance, AMD and I'll at least then consider your cards....but not until.

Slimy “100 Teraflops” Snake.100 tflops is so close I can feel it.

That said, I hope they invest in machine learning and dedicated RT cores than just pushing standard rasterization performance.

More vram lolWill be interesting to see what they bring to the table this time.

for 99$ and with just 23W power draw, including free lifetime subscriptions of GP, PS+, Prime, Stadia, Netflix, Disney+, HBO, Spotify and daily foot massages from Lisa, Phil and Jimbo.Exactly. Killing the nvidia hype … sadly they are doing it wrong lol.

If you really wanted to kill the hype, you release a figure that beats the 4090 or 2x more powerful 3090ti etc.

More vram lol

I don't think they have an answer for the 4090, they seem to be just letting nvidia totally have their own way with it's launch. You would think if they had something they were going to run against that you would have had some leaks or tweets and stuff like they do with cpus. The Nov launch means they might have something to deal with the 4080 16gb seeing that's supposed to launch that month. Only another few weeks to find out which way im going anyway.The 4090 has a power draw similar to 3090 but doubles it in performance. So effectively performance per watt jump of 100 percent? I guess we'll have to see whether those benchmarks were done at 600 W or 450 W.

Either way, only a 50 percent performance per watt bump for AMD likely won't suffice. They will increase the power too. If they go up 100 W relative to the 6900xt, then that will give them 2x (1.5 from node and *1.33 from power increase), which will be competitive with Nvidia

Question is how good will raytracing performance be. I don't expect they will forgo the opportunity to price their cards as obnoxiously as Nvidia. Likely slightly cheaper. They know Nvidia can cut prices so AMD will likely just follow suite and ride the high margins rather than gain market share.

Not sure AMD is going to be much better than NV in the power department. This seems to suggest they are going to have similar power requirements.

Enermax PSU calculator already mentions unreleased Radeon RX 7000XT and GeForce RTX 40 GPUs - VideoCardz.com

Radeon RX 7950XT, 7900XT, 7800XT and 7700XT confirmed by Enermax? The power supply maker now has recommendations for next-gen GPU series. It is unclear if Enermax has confidential information on Radeon RX 7000 series or GeForce RTX 40 series, but it is a fact that as many as eight new graphics...videocardz.com

November 3rd is the presentation and the launch will probably be a few weeks beyond that.If they launch on nov 3 it means that the presentation is some days before that? Or they just present and launch in the same day?

Not sure AMD is going to be much better than NV in the power department. This seems to suggest they are going to have similar power requirements.

Enermax PSU calculator already mentions unreleased Radeon RX 7000XT and GeForce RTX 40 GPUs - VideoCardz.com

Radeon RX 7950XT, 7900XT, 7800XT and 7700XT confirmed by Enermax? The power supply maker now has recommendations for next-gen GPU series. It is unclear if Enermax has confidential information on Radeon RX 7000 series or GeForce RTX 40 series, but it is a fact that as many as eight new graphics...videocardz.com

Maaaybe PS5 pro?are any of the RDNA3 features gonna pop up on PS5 and Xbox series consoles? I know chipset will be different but I wonder if some feature will find there way over

In pure raster AMD was already matching Nvidia, in price/performance they were beating Nvidia.RDNA3 needs to pull off some magic here. Obi-Wan isn't our only hope, to prevent the Dark Lord Nvidi-sidius from prevailing!

Their Ryzen moment happened when Nvidia went to samsung this gen and they failed to take advantage of it. It gave them a massive frequency advantage they lose this time because nvidia were not going to do an Intel and keep making the same mistake for multiple generations. I'm waiting for the Announcements personally because I will buy AMD myself if they live up to expectations, but the more info that comes the more it seems they are not going to change the landscape much.In pure raster AMD was already matching Nvidia, in price/performance they were beating Nvidia.

Nvidia wins in DLSS, DLAA, Raytracing, Freestyle and Mindshare.

AMD needs to steal mindshare this generation with good RT, hopefully some ML hardware and a damn good price.

Their Ryzen moment hasnt happened in the GPU space just yet......we are still waiting for it.

Unfortunately im locked into the CUDA/OptiX workspace so I cant really support AMD directly, but for any friends who need a machine built for them, assuming the prices are reasonable, i will probably be recommending Ampere and/or RDNA3.

AMD got the frequency advantage going from RDNA 1 to RDNA 2 on 7nm. Now Nvidia are merely catching up to RDNA 2 despite having full process advantage. It remains to be seen what kind of clocks RDNA 3 can hit.Their Ryzen moment happened when Nvidia went to samsung this gen and they failed to take advantage of it. It gave them a massive frequency advantage they lose this time because nvidia were not going to do an Intel and keep making the same mistake for multiple generations. I'm waiting for the Announcements personally because I will buy AMD myself if they live up to expectations, but the more info that comes the more it seems they are not going to change the landscape much.

Depends if u care about bs like dlss and rt you will keep going nvidia. If u care about raster and price amd will easily delivery.RDNA3 needs to pull off some magic here. Obi-Wan isn't our only hope, to prevent the Dark Lord Nvidi-sidius from prevailing!

I think this is not the case anymore at 1080p/1440. AMD cards are beating their equivalents (price-wise) quite handily. It changes when it comes to 4K though.They didn’t “kill” ampere at rasterization with RDNA 2. It was on par or even worse. 3080 had overall +3% avg soon as you went beyond 1080p.

Which is massively disappointing considering the sacrifices they made to their RT and ML to actually have better rasterization performances than competition. It should have been much better.