TheRedRiders

Member

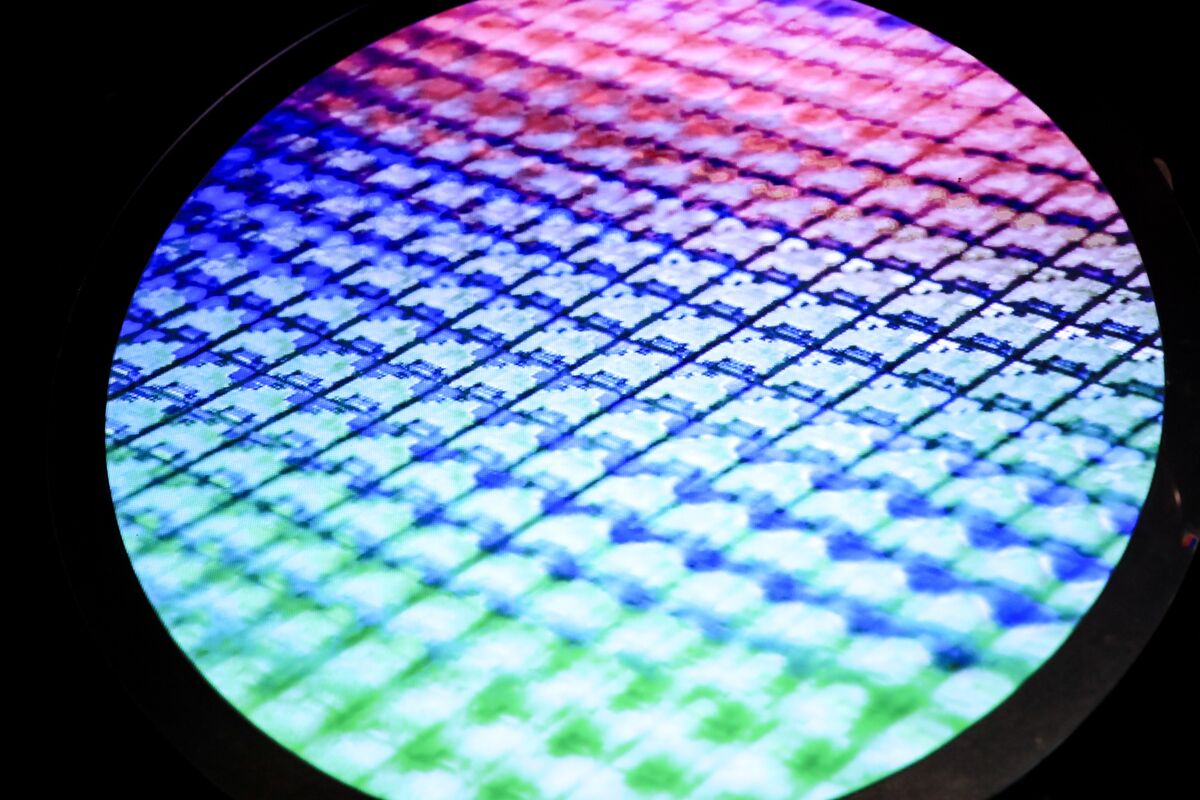

AMD has confirmed RDNA 3 will offer more than 50% performance per watt over RDNA 2.

Confirmed to be chiplet based and using 5nm Process.

Rearchitected Compute Unit

Offers “optimised graphics pipeline” and “Next-Generation Infinity Cache."

RDNA 4 confirmed to land in 2024.

EDIT : My first ever thread, go easy on me folks.

Confirmed to be chiplet based and using 5nm Process.

Rearchitected Compute Unit

Offers “optimised graphics pipeline” and “Next-Generation Infinity Cache."

RDNA 4 confirmed to land in 2024.

EDIT : My first ever thread, go easy on me folks.

Last edited: