64bitmodels

Reverse groomer.

the new AD106 GPU from Nvidia that was leaked (assumed to be a 4060) suggests performance similar to a 3070 ti, which is not a very good deal to begin with considering the gap between the 3090 and 4090, but the thing that really cuckles my fuckles is the rumored VRAM of 8gb. in 2023.

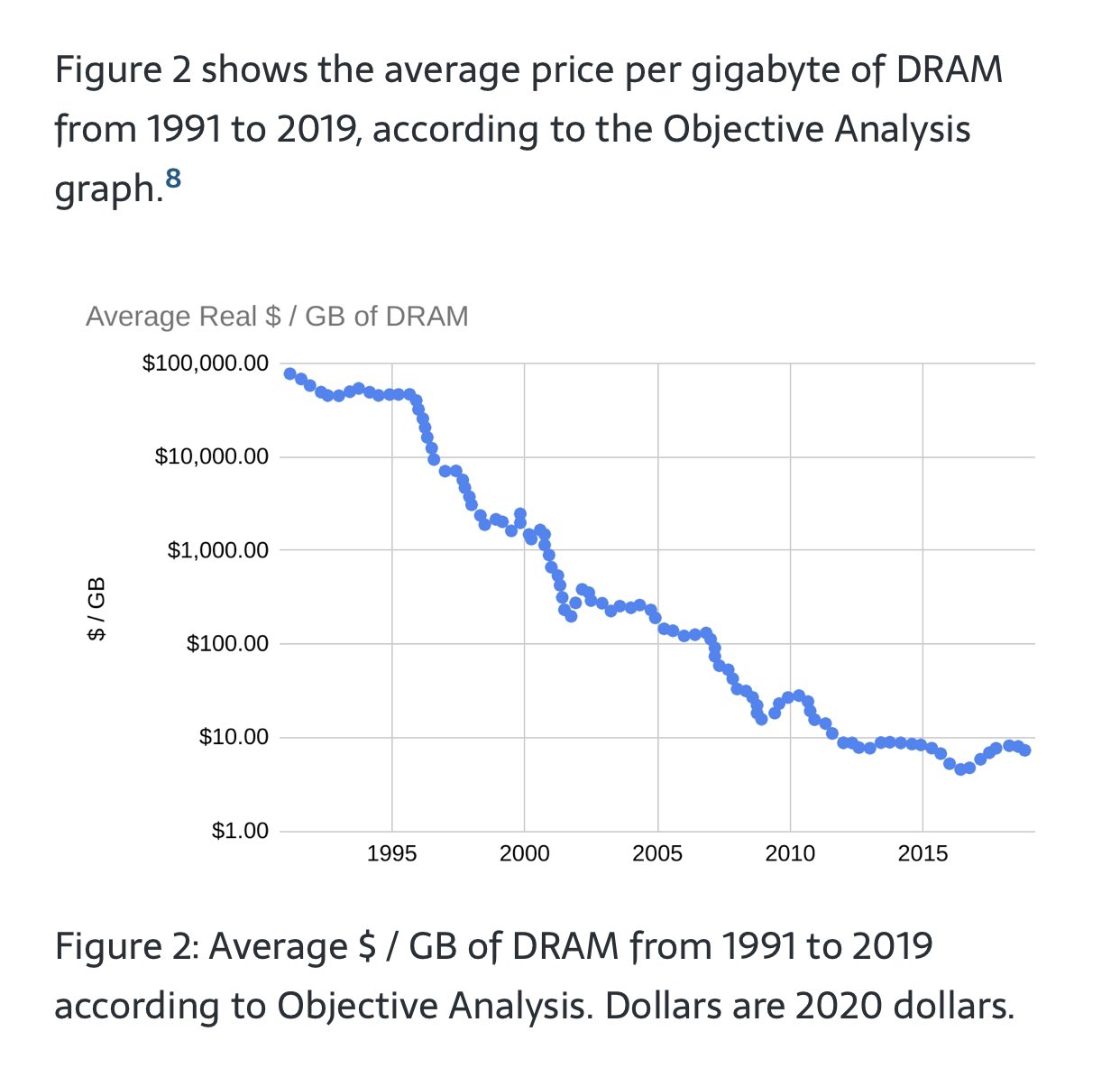

I don't get this shit, it feels like this card should be 12gb at the very least considering today's modern system requirements for many games. the 3060 previously was 12gb so why not just extend that to the TI version, why are we regressing again here? is VRAM not cheap or something? I felt like this would be the generation where we truly jump up in VRAM and leave the days of single digit vram behind us yet everything below the 70 ti is 8gb.

Why can't we live in a world where the 4060ti is 12gb?

I don't get this shit, it feels like this card should be 12gb at the very least considering today's modern system requirements for many games. the 3060 previously was 12gb so why not just extend that to the TI version, why are we regressing again here? is VRAM not cheap or something? I felt like this would be the generation where we truly jump up in VRAM and leave the days of single digit vram behind us yet everything below the 70 ti is 8gb.

Why can't we live in a world where the 4060ti is 12gb?