Ascend

Member

Just wanted to add... Even though I did not make the statement that with the SSD you can load data mid-frame from the GPU... There's this;

There are loads of things for us to still explore in the new hardware but I’m most intrigued to see what we can do with the new SSD drive and the hardware decompression capabilities in the Xbox Velocity Architecture.

The drive is so fast that I can load data mid-frame, use it, consume it, unload and replace it with something else in the middle of a frame, treating GPU memory like a virtual disk. How much texture data can I now load?

I’m looking forward to pushing this as far as I can to see what kind of beautiful experiences we can deliver.

news.xbox.com

news.xbox.com

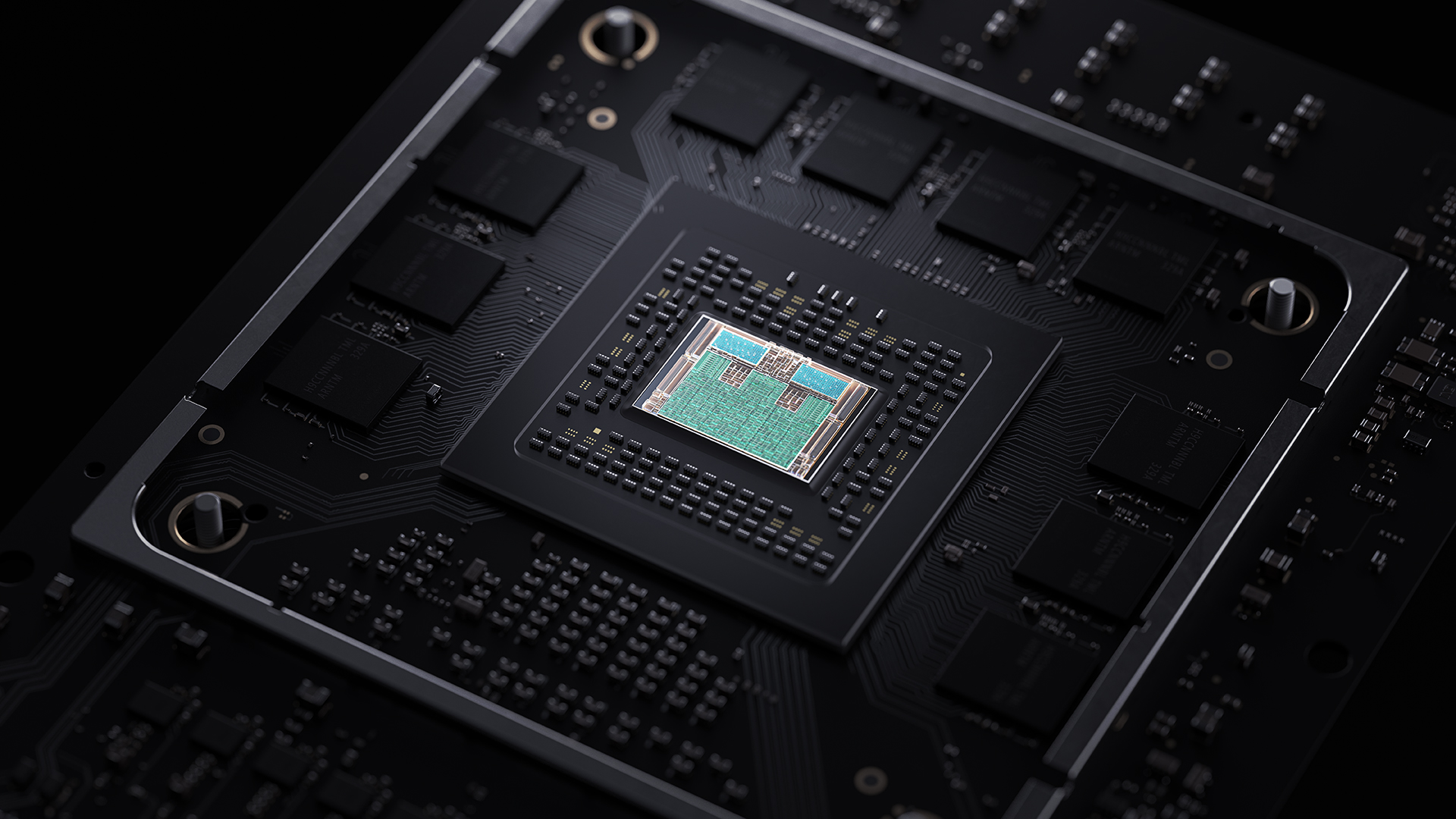

There are loads of things for us to still explore in the new hardware but I’m most intrigued to see what we can do with the new SSD drive and the hardware decompression capabilities in the Xbox Velocity Architecture.

The drive is so fast that I can load data mid-frame, use it, consume it, unload and replace it with something else in the middle of a frame, treating GPU memory like a virtual disk. How much texture data can I now load?

I’m looking forward to pushing this as far as I can to see what kind of beautiful experiences we can deliver.

Inside Xbox Series X Optimized: Dirt 5 - Xbox Wire

When it launches this holiday, Xbox Series X will be the most powerful console the world has ever seen. One of the biggest benefits of all that power is giving developers the ability to make games that are Xbox Series X Optimized. This means that they’ve taken full advantage of the unique...

news.xbox.com

news.xbox.com