I'd love to hear what an optimised load is in this regard as it falls back to loading what is visible. A thing people say already happens on all old xbox one games/engines.The ps4 Spiderman demo load was 8.10 seconds on a PS4 (im sure that that was NOT the whole game)

The optimized demo loaded in .8 seconds.

The State of Decay demo was a 45 second full game load.

That same game with no optimization loaded in 6.5 seconds. Sure an optimized load would be less... if an optimized load of the same game approached 4.5 seconds (optimization reducing load by 2 seconds) then the ratio is virtually the same.

-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

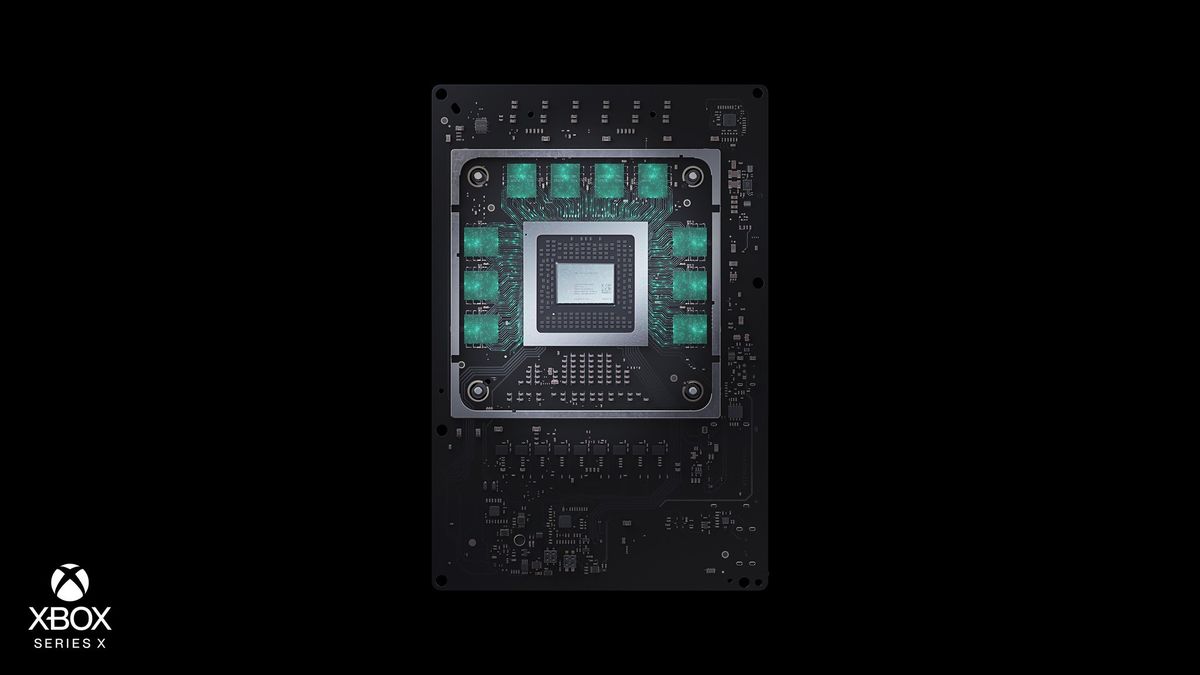

Xbox Velocity Architecture - 100 GB is instantly accessible by the developer through a custom hardware decompression block

- Thread starter Bernkastel

- Start date

- Platform

Ascend

Member

Which is a blatant lie. And even IF it was true (which it's not), the advertised 2x-3x effective bandwidth increase was in comparison to the Xbox One X, so... The improvement is still there regardless. But it gets dismissed as 'secret sauce' or or 'magic' or whatever other dismissive term is available.I'd love to hear what an optimised load is in this regard as it falls back to loading what is visible. A thing people say already happens on all old xbox one games/engines.

As for what an optimized load is, it's quite simple. It means it's optimized for what you're actually using. Currently, the games are optimized for an HDD, but not for an SSD. By default it makes it inefficient for the SSD. Any game that is BC and is dropped into the XSX, even though it will work and load a lot faster, is far from the best that the console can achieve.

You're still loading everything from the perspective of HDD technology, which means you're loading as if you have seek times, as if you need to use duplicate data on disc, and as if you're limited to double digit MB/s. You will have unnecessary CPU overhead, unnecessary idle time and you most likely even have duplicate readings that are not necessary, reducing the SSD performance unnecessarily.

And above all that, in the case of loading an untouched Xbox One game on the XSX, you're not taking advantage of the hardware decompression block, the newer decompression techniques, or the lower overhead API.

I hope we can finally lay this to rest.

too much eslim

Member

If someone doesn't understand the concept that none of the games shown were optimized then they are set in their agenda. No point in trying to explain anything else.Which is a blatant lie. And even IF it was true (which it's not), the advertised 2x-3x effective bandwidth increase was in comparison to the Xbox One X, so... The improvement is still there regardless. But it gets dismissed as 'secret sauce' or or 'magic' or whatever other dismissive term is available.

As for what an optimized load is, it's quite simple. It means it's optimized for what you're actually using. Currently, the games are optimized for an HDD, but not for an SSD. By default it makes it inefficient for the SSD. Any game that is BC and is dropped into the XSX, even though it will work and load a lot faster, is far from the best that the console can achieve.

You're still loading everything from the perspective of HDD technology, which means you're loading as if you have seek times, as if you need to use duplicate data on disc, and as if you're limited to double digit MB/s. You will have unnecessary CPU overhead, unnecessary idle time and you most likely even have duplicate readings that are not necessary, reducing the SSD performance unnecessarily.

And above all that, in the case of loading an untouched Xbox One game on the XSX, you're not taking advantage of the hardware decompression block, the newer decompression techniques, or the lower overhead API.

I hope we can finally lay this to rest.

I don't why still going on Microsoft is clearly lying they recycled the io from the OG xbox to maintain backwards compatibility and thew the SSD in at the last second phil went to the local best buy and busted out his corporate credit card and bought a few million SSDs. That kid at best buy almost pissed him self having to carry that many to uncle Phil's low rider caddie. He got him self enough reward points to get a switch and ps5 out of the deal!

Last edited:

I know it's a lie that's exactly the point. but posts you've liked...Which is a blatant lie. And even IF it was true (which it's not), the advertised 2x-3x effective bandwidth increase was in comparison to the Xbox One X, so... The improvement is still there regardless. But it gets dismissed as 'secret sauce' or or 'magic' or whatever other dismissive term is available.

This is difference is very clarifying for our disagreement. You think that PRT and texture streaming are broadly available but seldom used. I believe they are frequently used (and I'm pretty sure thats correct). So you and I both see "2-3X better than an XBox One X game" and you think "...without texture streaming" and I think "...with texture streaming".

Wher

Where did you get the confirmation regarding the code optimization?The ps4 Spiderman demo load was 8.10 seconds on a PS4 (im sure that that was NOT the whole game)

The optimized demo loaded in .8 seconds.

The State of Decay demo was a 45 second full game load.

That same game with no optimization loaded in 6.5 seconds. Sure an optimized load would be less... if an optimized load of the same game approached 4.5 seconds (optimization reducing load by 2 seconds) then the ratio is virtually the same.

MoreJRPG

Suffers from extreme PDS

6.5 or 10 seconds is still absurdly fast. Not sure what people are trying to nitpick(well, I mean, I know why they're nitpicking.) After that 10 seconds it never loads again and you forget it ever happened.It wasn't even 10 seconds, either. It was 6.5. Dunno about that dude but 6.5 is not the same number value as 10 when both are using the same base system.

Last edited:

Andodalf

Banned

6.5 or 10 seconds is still absurdly fast. Not sure what people are trying to nitpick(well, I mean, I know why they're nitpicking.) After that 10 seconds it never loads again and you forget it ever happened.

not to mention the visible improvements in pop in

Trueblakjedi

Member

Wher

Where did you get the confirmation regarding the code optimization?

Because you asked nicely

Xbox Series X loading times demo shows State of Decay 2 load in just seven seconds

Xbox Series X changes the game for load times.

www.windowscentral.com

www.windowscentral.com

State of Decay X One X vs X Series X loading demo

Last edited:

I think he is asking where it states there was optimisation done for spiderman. Where did you hear such a thing?Because you asked nicely

"State of Decay 2 goes from loading in about 45 seconds on Xbox One X to just seven seconds on Xbox Series X. Even more impressively, this is with no extra work or optimization done, that's just how much State of Decay 2 benefits when installed on an Xbox Series X."

Xbox Series X loading times demo shows State of Decay 2 load in just seven seconds

Xbox Series X changes the game for load times.www.windowscentral.com

State of Decay X One X vs X Series X loading demo

JareBear: Remastered

Banned

I mean, my question was related to Spidey demo...

In addition, this is interisting as well:

Did the Spidey footage appear to be the actual full/regular game from what you saw?

Ascend

Member

I mean, my question was related to Spidey demo...

In addition, this is interesting as well:

Just for the record;

Both the PS5 and the XSX have hardware decompression that does not rely on the CPU.

Both the XSX and PS5 designed their SSDs to have an as constant as possible throughput without performance falling off a cliff after a short period of time, which is an advantage over the average PC SSD.

Both the XSX and the PS5 reduce/eliminate the driver overhead that PCs have.

The following is contrary to popular belief, but Sony took a brute force approach to the SSD with the PS5, while the XSX has the transfer efficiency approach.

And if you want an answer to your question regarding the spidey demo vs the XSX loading, check these two posts of mine;

Xbox Velocity Architecture - 100 GB is instantly accessible by the developer through a custom hardware decompression block

And this is just ( one of ) the reason why i had right to doubt about Discord. Even members of ordinary Xbox Discord are doing that. Continuously spreading FUD about PS5 is astonishing Again that post AND THIS thread is about XVA, SFS etc...in the XSX. Not about PS5 directly. Only PS5 mentions...

Xbox Velocity Architecture - 100 GB is instantly accessible by the developer through a custom hardware decompression block

The ps4 Spiderman demo load was 8.10 seconds on a PS4 (im sure that that was NOT the whole game) The optimized demo loaded in .8 seconds. The State of Decay demo was a 45 second full game load. That same game with no optimization loaded in 6.5 seconds. Sure an optimized load would be less...

This is an Xbox Series X thread. Let's try and keep it about that, shall we? If you want to focus on the PS5 tech, there are a gazillion other threads for that, including this one and this one.

thicc_girls_are_teh_best

Member

I mean, my question was related to Spidey demo...

In addition, this is interesting as well:

That method actually might have a potential disadvantage/tradeoff that I've thought of, but I'm gonna sit on it for right now

Last edited:

Fair enough, however I just wanted to know if it was confirmed or not that Spidey demo was actually a different code of the orignal game since it was mentioned right here in this thread..Just for the record;

Both the PS5 and the XSX have hardware decompression that does not rely on the CPU.

Both the XSX and PS5 designed their SSDs to have an as constant as possible throughput without performance falling off a cliff after a short period of time, which is an advantage over the average PC SSD.

Both the XSX and the PS5 reduce/eliminate the driver overhead that PCs have.

The following is contrary to popular belief, but Sony took a brute force approach to the SSD with the PS5, while the XSX has the transfer efficiency approach.

And if you want an answer to your question regarding the spidey demo vs the XSX loading, check these two posts of mine;

Xbox Velocity Architecture - 100 GB is instantly accessible by the developer through a custom hardware decompression block

And this is just ( one of ) the reason why i had right to doubt about Discord. Even members of ordinary Xbox Discord are doing that. Continuously spreading FUD about PS5 is astonishing Again that post AND THIS thread is about XVA, SFS etc...in the XSX. Not about PS5 directly. Only PS5 mentions...www.neogaf.com

Xbox Velocity Architecture - 100 GB is instantly accessible by the developer through a custom hardware decompression block

The ps4 Spiderman demo load was 8.10 seconds on a PS4 (im sure that that was NOT the whole game) The optimized demo loaded in .8 seconds. The State of Decay demo was a 45 second full game load. That same game with no optimization loaded in 6.5 seconds. Sure an optimized load would be less...www.neogaf.com

This is an Xbox Series X thread. Let's try and keep it about that, shall we? If you want to focus on the PS5 tech, there are a gazillion other threads for that, including this one and this one.

If we also go back some pages we can find several twitts related to PS5 SSD trying to imply that Sony's solution is not abble to stream assets from SSD direct to the GPU...

So, this is the season why I thought it would be relevant...

Last edited:

thicc_girls_are_teh_best

Member

Fair enough, however I just wanted to know if it was confirmed or not that Spidey demo was actually a different code of the orignal game since it was mentioned right here in this thread..

If we also go back some pages we can find several twitts related to PS5 SSD trying to imply that Sony's solution is not abble to stream assets from SSD direct to the GPU...

So, this is the season why I thought it would be relevant...

I've personally speculated that and stand by it, because if you watch for the graph in Road to PS5 that shows the I/O complex, GPU/CPU APU, and system memory they highlight the I/O complex having DMA to the RAM but never actually mention anything about SSD asset streaming to the GPU.

Which, I think given the focus Cerny had on the SSD at that presentation and how it was targeted at developers, that would've been the perfect time to mention it. Granted, I don't think PS5 actually needs that feature to make optimal use of its SSD, since it can send data to and from RAM so quickly as-is, and that seems to be the approach Sony has favored. There's a lot of nuance to it though, like anything tech-related.

Bernkastel

Ask me about my fanboy energy!

Leaving this here. Kirby Louise is definitely a dude lol.

yup he is.

Louise Kirby is a dude

He basically continued the conversation after that confirming what I said.

Bernkastel

Ask me about my fanboy energy!

Definitely more than 2,3shame they have or showed for now like 2,3 exclusives games that are proper AAA level .still waiting for their new forza to show and what pc specs it will reveal.

And all of this before the actual game reveal event on July. They may still reveal something on the June.

JareBear: Remastered

Banned

Definitely more than 2,3

And all of this before the actual game reveal event on July. They may still reveal something on the June.

When is their June show currently scheduled?

Bernkastel

Ask me about my fanboy energy!

Other exclusives

Last edited:

oldergamer

Member

I thought it was June 12th?When is their June show currently scheduled?

Bernkastel

Ask me about my fanboy energy!

Date yet to be revealedWhen is their June show currently scheduled?

June Xbox 20/20 event rumoured to focus on the Xbox Series X itself

Microsoft's June Xbox 20/20 event, part of a monthly reveal of the Xbox Series X and its games, is rumoured to focus on the Xbox Series X itself. Microsoft d

stevivor.com

stevivor.com

Bernkastel

Ask me about my fanboy energy!

thats PS5.I thought it was June 12th?

jimbojim

Banned

I mean, my question was related to Spidey demo...

In addition, this is interesting as well:

That's RAID setup. Individually is around 3 GB/s. Dumb comparison anyway.

Last edited:

Bernkastel

Ask me about my fanboy energy!

I mean, my question was related to Spidey demo...

In addition, this is interesting as well:

Its in the OP. XSX also does this

funking giblet

Member

What a whole load of horseshit from a layman...

What do you think they actually explained there?

What do you think they actually explained there?

Last edited:

JareBear: Remastered

Banned

GamingBolt interview with Scorn dev just went up, a couple quotes about SSDs and Velocity architecture

There is a difference in Zen 2 CPU. The Xbox series X features 8x Zen 2 Cores at 3.8GHz, whereas the PS5 features 8x Zen 2 Cores at 3.5GHz. Your thoughts on this difference?

The most important thing is that we are done with those Jaguar CPUs from current gen. If we are talking direct comparison it will be a minuscule difference.

The PS5 features an incredibly fast SSD with 5.5GB/s read bandwidth. This is faster than anything that is available out there. How can developers can take advantage of this and what will it result to, and how does this compare to Series X’s 2.4GB/s SSD read bandwidth?

For a system to take the full advantage of the next gen CPU/GPUs the amount of data needed to be streamed in and out of memory is pretty big. That’s the main reason why both console manufactures went with the SSDs and a specialized I/O approach. This approach was pretty much a necessity. You could for example get similar results with average SSD speeds and more memory. You would have to preload more game data into memory, but on the other hand your SSD wouldn’t need to fetch that much data every second. When next gen engines start to incorporate these kinds of workflows, some new possibilities will open up in theory. Like having an open world game with high fidelity assets found in smaller scale games, or as they said in the Unreal 5 tech demo, movie quality assets.

What are your thoughts on the Xbox One X’s Velocity architecture and how will it make development easier on it? Additionally, some reports have suggested that the Xbox Series X’s BCPack Texture Compression technique is better than the PS5’s Kraken. What are your thoughts on all this?

People tend to look only at the raw speed numbers of SSDs but that doesn’t tell you the whole story. Let’s say you have an SSD with a real-life performance of 2GB/s. Does that mean that 10GB of game data would be loaded in 5 sec? No, it doesn’t. Getting files off the SSD is only one part of loading the game, there are other process that need to happen as well. That’s where these special hardware and software techniques come in, to speed up all these processes as much as possible. What combination of features and techniques will come on top and by how much is hard to say at the moment.

Last edited:

Bernkastel

Ask me about my fanboy energy!

How many interviews is this guy gonna make ?

GamingBolt interview with Scorn dev just went up, a couple quotes about SSDs and Velocity architecture

Dory16

Banned

Was it too complex for you?What a whole load of horseshit from a layman...

What do you think they actually explained there?

funking giblet

Member

No, it was made up nonsense?Was it too complex for you?

Did you not get that? Maybe I can go through it in fine detail!?

Dory16

Banned

Go ahead. What did he say that was made up?No, it was made up nonsense?

Did you not get that? Maybe I can go through it in fine detail!?

funking giblet

Member

Let's seeGo ahead. What did he say that was made up?

They are conflating using Bank Switching, which he says was directly addressable by memory in a CPU (not what it was used for, and no, it's not addressable, hence SWITCHING) Used in the old days when CPUs had limited address space (They can address Terabytes of memory now directly), for something which now supersedes it.Virtual Memory (which exists in any OS these days and has done for years).

By passing VRAM to read directly into the GPU, that's just not a thing, sorry. Made up as it were. CPUs use read-through caching (the through being, RAM). Caches are too small and variable to actually pre-warm them effectively, and you certainly wouldn't need an SSD for 96kb of L1 cache or 4mb of L2 cache (numbers might be off, but close).

XVA is the following.

Hardware compression, SFS + support for DIrectStorage (and a nice SSD).

They most likely reserve either a partition or dedicated Page file (100gb) so a game can wipe, and use it.

SFS is a hardware feedback buffer which replaces software ones used for a number of years now. Hardware filtering already exists for PRT (ask me why hardware works for PRT better, and why you need it).

I can even explain how feedback buffers work and why SFS won't actually help if your game already uses a buffer to figure out your current residency, as well as how effective they are in software.

Or anything else?

Last edited:

CurtBizzy

Member

So the SSD is basically ram ?Its in the OP. XSX also does this

Ascend

Member

What about the hardware texture filters?Let's see

They are conflating using Bank Switching, which he says was directly addressable by memory in a CPU (not what it was used for, and no, it's not addressable, hence SWITCHING) Used in the old days when CPUs had limited address space (They can address Terabytes of memory now directly), for something which now supersedes it.Virtual Memory (which exists in any OS these days and has done for years).

By passing VRAM to read directly into the GPU, that's just not a thing, sorry. Made up as it were. CPUs use read-through caching (the through being, RAM). Caches are too small and variable to actually pre-warm them effectively, and you certainly wouldn't need an SSD for 96kb of L1 cache or 4mb of L2 cache (numbers might be off, but close).

XVA is the following.

Hardware compression, SFS + support for DIrectStorage (and a nice SSD).

They most likely reserve either a partition or dedicated Page file (100gb) so a game can wipe, and use it.

SFS is a hardware feedback buffer which replaces software ones used for a number of years now. Hardware filtering already exists for PRT (ask me why hardware works for PRT better, and why you need it).

I can even explain how feedback buffers work and why SFS won't actually help if your game already uses a buffer to figure out your current residency, as well as how effective they are in software.

Or anything else?

Dory16

Banned

No doubt that you know your subject.Let's see

They are conflating using Bank Switching, which he says was directly addressable by memory in a CPU (not what it was used for, and no, it's not addressable, hence SWITCHING) Used in the old days when CPUs had limited address space (They can address Terabytes of memory now directly), for something which now supersedes it.Virtual Memory (which exists in any OS these days and has done for years).

By passing VRAM to read directly into the GPU, that's just not a thing, sorry. Made up as it were. CPUs use read-through caching (the through being, RAM). Caches are too small and variable to actually pre-warm them effectively, and you certainly wouldn't need an SSD for 96kb of L1 cache or 4mb of L2 cache (numbers might be off, but close).

XVA is the following.

Hardware compression, SFS + support for DIrectStorage (and a nice SSD).

They most likely reserve either a partition or dedicated Page file (100gb) so a game can wipe, and use it.

SFS is a hardware feedback buffer which replaces software ones used for a number of years now. Hardware filtering already exists for PRT (ask me why hardware works for PRT better, and why you need it).

I can even explain how feedback buffers work and why SFS won't actually help, as well as how effective they are in software.

Or anything else?

In sum, I gathered that the 100GB are a portion of the SSd that the XSX is capable of making the CPU "treat" as VRAM, just very slow VRAM. And the CPU has therefore almost no overhead in accessing that data.

What it can do with it seemed more blurry, he said either discarding it (big deal then) or writing it to RAM (that bus is 560GB/s, so we are 20 times beyond the best SSD speeds and I see why that would be interesting).

You are saying based on your expertise that it is impossible to happen as such and therefore made up or that you just don't believe it because of some other information you have about the Velocity architecture?

Last edited:

funking giblet

Member

What about the hardware texture filters?

Well let's talk about what it brings to the table.

When you are traditionally filtering a texture, you grab the full texture, create a sampling kernel function of some size and algorithm, and run the kernel over the image to create filtered image.

A high quality / filtered texture spread over a flat plane would look very weird at distance, so you may move to anisotropic filtering, and include sampling the various mipmaps to improve rendering at a distance.

With PRT, your textures are split across pages, and of course, mips. Just sampling them will mean you get weird seams when you join two pages with two parts of the same texture together, so you want those other pages too when you sample, and also their mips!

This is a lot of data to track in software, and is a pain in the ass speed wise. Hardware which stores these locations, and improves data locality as well as doing the filtering makes this a better experience for the dev.

The issue with this feature is it exists in hardware for the last 11 years.

The feature you describe is just plain ol' Virtual Memory which the phone in your pocket can do. There can be optimizations around granting a dedicated partition or file for this purpose, but again, nothing I can't do right now on my PC (I mean, your bog standard web dev might tinker with this!). The SSD itself does make Virtual Memory more useful, so let them advertise it as so, but it's a rote feature.No doubt that you know your subject.

In sum, I gathered that the 100GB are a portion of the SSd that the XSX is capable of making the CPU "treat" as VRAM, just very slow VRAM. And the CPU has therefore almost no overhead in accessing that data.

What it can do with it seemed more blurry, he said either discarding it (big deal then) or writing it to RAM (that bus is 560GB/s, so we are 20 times beyond the best SSD speeds and I see why that would be interesting).

You are saying based on your expertise that it is impossible to happen as such and therefore made up or that you just don't believe it because of some other information you have about the Velocity architecture?

All of this together is very very good for consoles, but I hesitate to round them all up, and say "XVA is the killer feature" when it's just a lot of features coming together with a fast disk too.

Last edited:

Dory16

Banned

Ok. So they're right but it's not new.Well let's talk about what it brings to the table.

When you are traditionally filtering a texture, you grab the full texture, create a sampling kernel function of some size and algorithm, and run the kernel over the image to create filtered image.

A high quality / filtered texture spread over a flat plane would look very weird at distance, so you may move to anisotropic filtering, and include sampling the various mipmaps to improve rendering at a distance.

With PRT, your textures are split across pages, and of course, mips. Just sampling them will mean you get weird seams when you join two pages with two parts of the same texture together, so you want those other pages too when you sample, and also their mips!

This is a lot of data to track in software, and is a pain in the ass speed wise. Hardware which stores these locations, and improves data locality as well as doing the filtering makes this a better experience for the dev.

The issue with this feature is it exists in hardware for the last 11 years.

The feature you describe is just plain ol' Virtual Memory which the phone in your pocket can do. There can be optimizations around granting a dedicated partition or file for this purpose, but again, nothing I can't do right now on my PC (I mean, your bog standard web dev might tinker with this!). The SSD itself does make Virtual Memory more useful, so let them advertise it as so, but it's a rote feature.

All of this together is very very good for consoles, but I hesitate to round them all up, and say "XVA is the killer feature" when it's just a lot of features coming together with a fast disk too.

It could be knew in the console world. Would be strange of them to advertise that as part of their next gen glossary if it's a feature that every electronic device in the world already has, like you said.

The proof will be in the pudding.

funking giblet

Member

Ok. So they're right but it's not new.

No I disagree fundamentally with how they stumbled around the topic and came up with just wrong answers. The issue I have is they are saying XVA somehow bypasses VRAM into the CPU because the CPU can reference the data on the SSD via something similar to Bank Switching.

You know when The Colonel says a bunch of gibberish at the end of MGS2? It's kinda like that.

You throw enough crap at a wall...

Last edited:

Ascend

Member

Then why are they calling it custom for the Xbox?Well let's talk about what it brings to the table.

When you are traditionally filtering a texture, you grab the full texture, create a sampling kernel function of some size and algorithm, and run the kernel over the image to create filtered image.

A high quality / filtered texture spread over a flat plane would look very weird at distance, so you may move to anisotropic filtering, and include sampling the various mipmaps to improve rendering at a distance.

With PRT, your textures are split across pages, and of course, mips. Just sampling them will mean you get weird seams when you join two pages with two parts of the same texture together, so you want those other pages too when you sample, and also their mips!

This is a lot of data to track in software, and is a pain in the ass speed wise. Hardware which stores these locations, and improves data locality as well as doing the filtering makes this a better experience for the dev.

The issue with this feature is it exists in hardware for the last 11 years.

Seems like nothing the Xbox does is (allowed to be) new

Last edited:

funking giblet

Member

Nothing new under the sun. What is new is having a hardware decomp and a fast enough SSD to make Virtual Memory usage viable. Page swapping is slow. If SRS works automatically it maybe also will encourage use of PRT because the headaches of managing feedback is gone. The actual full tilt speed of the SSD really ain’t so important when playing a game, it’s about swapping data into RAM as fast as possible before the next frame. You may only need a little data but you want it to be ready to use ASAP.

Last edited:

sendit

Member

Just for the record;

Both the PS5 and the XSX have hardware decompression that does not rely on the CPU.

Both the XSX and PS5 designed their SSDs to have an as constant as possible throughput without performance falling off a cliff after a short period of time, which is an advantage over the average PC SSD.

Both the XSX and the PS5 reduce/eliminate the driver overhead that PCs have.

The following is contrary to popular belief, but Sony took a brute force approach to the SSD with the PS5, while the XSX has the transfer efficiency approach.

And if you want an answer to your question regarding the spidey demo vs the XSX loading, check these two posts of mine;

Xbox Velocity Architecture - 100 GB is instantly accessible by the developer through a custom hardware decompression block

And this is just ( one of ) the reason why i had right to doubt about Discord. Even members of ordinary Xbox Discord are doing that. Continuously spreading FUD about PS5 is astonishing Again that post AND THIS thread is about XVA, SFS etc...in the XSX. Not about PS5 directly. Only PS5 mentions...www.neogaf.com

Xbox Velocity Architecture - 100 GB is instantly accessible by the developer through a custom hardware decompression block

The ps4 Spiderman demo load was 8.10 seconds on a PS4 (im sure that that was NOT the whole game) The optimized demo loaded in .8 seconds. The State of Decay demo was a 45 second full game load. That same game with no optimization loaded in 6.5 seconds. Sure an optimized load would be less...www.neogaf.com

This is an Xbox Series X thread. Let's try and keep it about that, shall we? If you want to focus on the PS5 tech, there are a gazillion other threads for that, including this one and this one.

Yep, lets call PS5s approach to I/O brute force.

Last edited:

Ascend

Member

Do you have anything of value to add or are you just going to make baseless posts as feeble attempts at ridicule?Yep, lets call PS5s approach to I/O brute force.

sendit

Member

Do you have anything of value to add or are you just going to make baseless posts as feeble attempts at ridicule?

Just laughing at your baseless post. Nothing to see here.

Ascend

Member

I guess it's the latter then. Alright. Thanks for telling me upfront. I can safely put you on ignore without losing anything of value. Goodbye.Just laughing at your baseless post. Nothing to see here.

Is what is being said impossible, or is what is being said simply different than the traditional way of doing things? I am asking because, sometimes people have trouble seeing new possibilities when they have been in something for too long. Like, it's hard for a tennis player to bat properly in baseball, and vice versa.Nothing new under the sun. What is new is having a hardware decomp and a fast enough SSD to make Virtual Memory usage viable. Page swapping is slow. If SRS works automatically it maybe also will encourage use of PRT because the headaches of managing feedback is gone. The actual full tilt speed of the SSD really ain’t so important when playing a game, it’s about swapping data into RAM as fast as possible before the next frame. You may only need a little data but you want it to be ready to use ASAP.

sendit

Member

I guess it's the latter then. Alright. Thanks for telling me upfront. I can safely put you on ignore without losing anything of value. Goodbye.

Called out for spreading misinformation:

Neo_game

Member

Leaving this here. Kirby Louise is definitely a dude lol.

I knew it. A college going chick who happens to code game engine for Xbox, Switch in her free time. Just did not sound right. lol

Trueblakjedi

Member

I guess it's the latter then. Alright. Thanks for telling me upfront. I can safely put you on ignore without losing anything of value. Goodbye.

Is what is being said impossible, or is what is being said simply different than the traditional way of doing things? I am asking because, sometimes people have trouble seeing new possibilities when they have been in something for too long. Like, it's hard for a tennis player to bat properly in baseball, and vice versa.

Texture residency hardware enhancements for graphics processors

WO2018151870A1 - Texture residency hardware enhancements for graphics processors - Google Patents

Systems, methods, apparatuses, and software for graphics processing systems in computing environments are provided herein. In one example, a method of handling tiled resources in graphics processing environments is presented. The method includes establishing, in a graphics processing unit, a...

patents.google.com

[0019] A first enhancement includes a hardware residency map feature comprising a low-resolution residency map that is paired with a much larger PRT, and both are provided to hardware at the same time. The residency map stores the mipmap level of detail resident for each rectangular region of the texture. PRT textures are currently difficult to sample given sparse residency. Software-only residency map solutions typically perform two fetches of two different buffers in the shader. , namely the residency map and the actual texture map. The primary PRT texture sample is dependent on the results of a residency map sample. These solutions are effective, but require considerable implementation changes to shader and application code, especially to perform filtering the residency map in order to mask unsightly transitions between levels of detail, and may have undesirable performance characteristics. The improvements herein can streamline the concept of a residency map and move the residency map into a hardware implementation.

[0020] A second enhancement includes an enhanced type of texture sample operation called a "residency sample." The residency sample operates similarly to a traditional texture sampling, except the part of the texture sample that requests texture data from cache/memory and filters the texture data to provide an output value is removed from the residency sample operation. The purpose of the residency sample is to generate memory addresses that reach the page table hardware in the graphics processor but do not continue on to become full memory requests. Instead, the residency of the PRT at those addresses is checked and missing pages are non-redundantly logged and requested to be filled by the OS or a delegate.

[0078] Example 19: A method of handling partially resident textures in a graphics processing unit (GPU), the method comprising establishing, in a GPU, a hardware residency map with values indicating residency properties of a texture resource, and sampling from the hardware residency map at specified locations to determine corresponding residency map samples each comprising an integer portion and fractional portion, where the integer portion indicates an associated lowest level of detail presently resident for the texture resource, and where the fractional portion represents an associated smoothing component for transitioning in a blending process with a next level of detail concurrently resident for the texture resource.

Thats from the HW texture filter patent.