JonnyMP3

Member

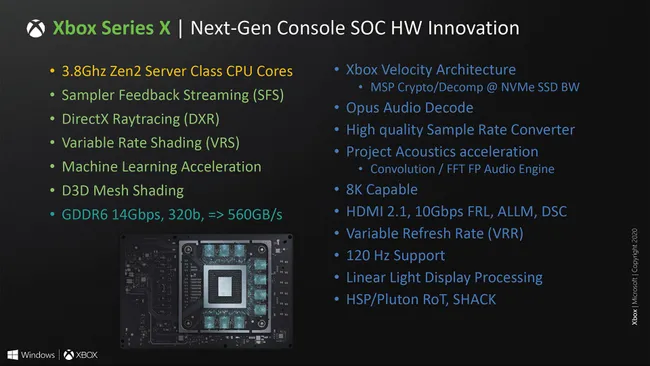

What is the XSX going to use?Mesh Shaders still require the hardware to support them to use the full capabilities. not all GPUs support Mesh Shaders. In fact, the only GPUs that currently support mesh shaders are the RTX 20xx series of cards. Please provide evidence to the contrary if you're going to continue making unfounded claims. Here's an article on Mesh Shaders

Mesh Shaders.

Does it have Nvidia card? No, it's AMD.

Not all GPU's can use Mesh Shaders but somehow RDNA2 allows Microsoft to do that on the XSX.