Ronaldo8

SF is not SFS, as you so eloquently explained. SF without the texture filters is not very useful since there will always be a delay between determining that a mip is not resident and it being made available. If you are targeting mip change on a nearly per frame transition basis (as I suspect MS is trying to do), then specialised hardware need to be available to smooth transitions or else it will be pop-in galore which defeats the purpose of texture streaming.

Shifty Geezer

I don't think so. You can just prefetch a little earlier instead of later. SF improves the implementation of tiled resources to make it easier and more efficient. SFS helps hide situations where your prefetch has failed, but if your prefetch is good enough, that shouldn't happen that often (if ever!). Potentially, the faster your storage, the less that is a problem. In RAGE for example, SFS would have softened the texture transitions so they were less jarring, as I understand it. But if running from a modern M.2 SSD, that pop-in wouldn't happen in the first place for SFS to help at all.

Ronaldo8

MS invested in silicon to address situations that will almost never happen... Good to know.

Shifty Geezer

I didn't say that, but it wouldn't be unheard of either. How much did the tessellation hardware in Xenos see? How much action has the ID buffer of PS4 Pro had?

This is a technical discussion. We should discuss the technology without leaning on faith that every choice is awesome or ground-breaking. Talk about what SF is, where SFS fits in, and how they'll be used.

Ronaldo8

Leaning on faith ? I am not the one pulling conclusions about a core next-gen feature based on speculative techniques (that failed) from generations past. James Stanard himself made it clear that those filters were necessary to pull correctly pull off SFS in a tweet convo. Straight from the horse's mouth itself.

Shifty Geezer

Yes. Your discussion isn't technical. In that reply, you aren't talking about how SF and SFS will be used, and what advantages SFS could bring; you've just said, "MS have included it so it must be useful." That's a non-technical argument of faith.

From your tweet, you may notice pop-in at tile boundary. So now let's talk about tile boundaries and possible solutions to tile pop-in and where SFS features and various technical discussions.

Ronaldo8

You doth protest too much. James Stanard, one of the system architects of the XSX, said it was useful. I don't assume to know more than him unlike others.

Tech talk? How about this:

There is an excellent GDC talk by Sean Barret about the case where a page is not yet resident. What to do? Using bilinear filtering (in hardware, as in Barret's own words, software implementation is an unnecessary hassle) on the residency map after "padding" it astutely to solve the issue of sampling texels from adjacent pages that are inherently decorrelated. Sampling from adjacent pages will introduce artifacts as mentioned by Stanard:

Those patented MS "texture filters" are in fact a modified form of bilinear filtering as explained in the patent (

https://patentimages.storage.googleapis.com/ae/20/a0/313511519c3caa/US20180232940A1.pdf).

More importantly, texture filtering and blending is done any time a transition to the next LOD is occurring irrespective of whether the next LOD is resident or not. MS explicitly provide an example where a PRT is created and the residency map is constantly updated with successive mip levels corrected by a fractional blending factor.

Also, you can only prefetch what you can foresee and not what you figure out after sampling by which time you already need it.

Shifty Geezer

That's not happening. You can't load a texture from storage the moment the GPU realises it's needed; that's just too slow. If SSD's could work that fast, there'd be no market for Optane. SSD access times are in microseconds, versus nanoseconds for DRAM (which in itself if horrifically slow compared to the working storage of processor caches).

Ronaldo8

SFS as a VRAM capacity (distinct from bandwidth) saver/multiplier implies exactly that.

Shifty Geezer

How do you address the microseconds of latency? Is the GPU going to sit waiting for the data to arrive, or stop what it's drawing, draw something else (or compute something else), then come back to drawing with the texture when it finally arrives and the object with the correct LOD in the right place without messing up the drawing it's already done?

Also, if you can fetch data on demand from disk, why would you need a mechanism for soft transitioning between LODs - SFS? Users would never see a transition because the correct LOD would always be present on demand, no?

iroboto

just thinking about frustum culling etc.

i think from further away say MIP10 because it’s so far away you pull that on demand, and it’s a blend of uggo at that draw distance.

If you’re strafing left and right, you’re loading in tiles that are out of view before you can actually see it I suspect. I don’t know how much of this translates to how tight you can cut it with SSD. But some testing would be required.

Shifty Geezer

Why is a high MIP something you'd pull on demand? As in, why is that more latency tolerant? The issue isn't BW but the time it takes from sampler feedback stating during texture sampling (as the object is being drawn), "I need a higher LOD on this texture" and that texture sampler getting new texture data from the SSD.

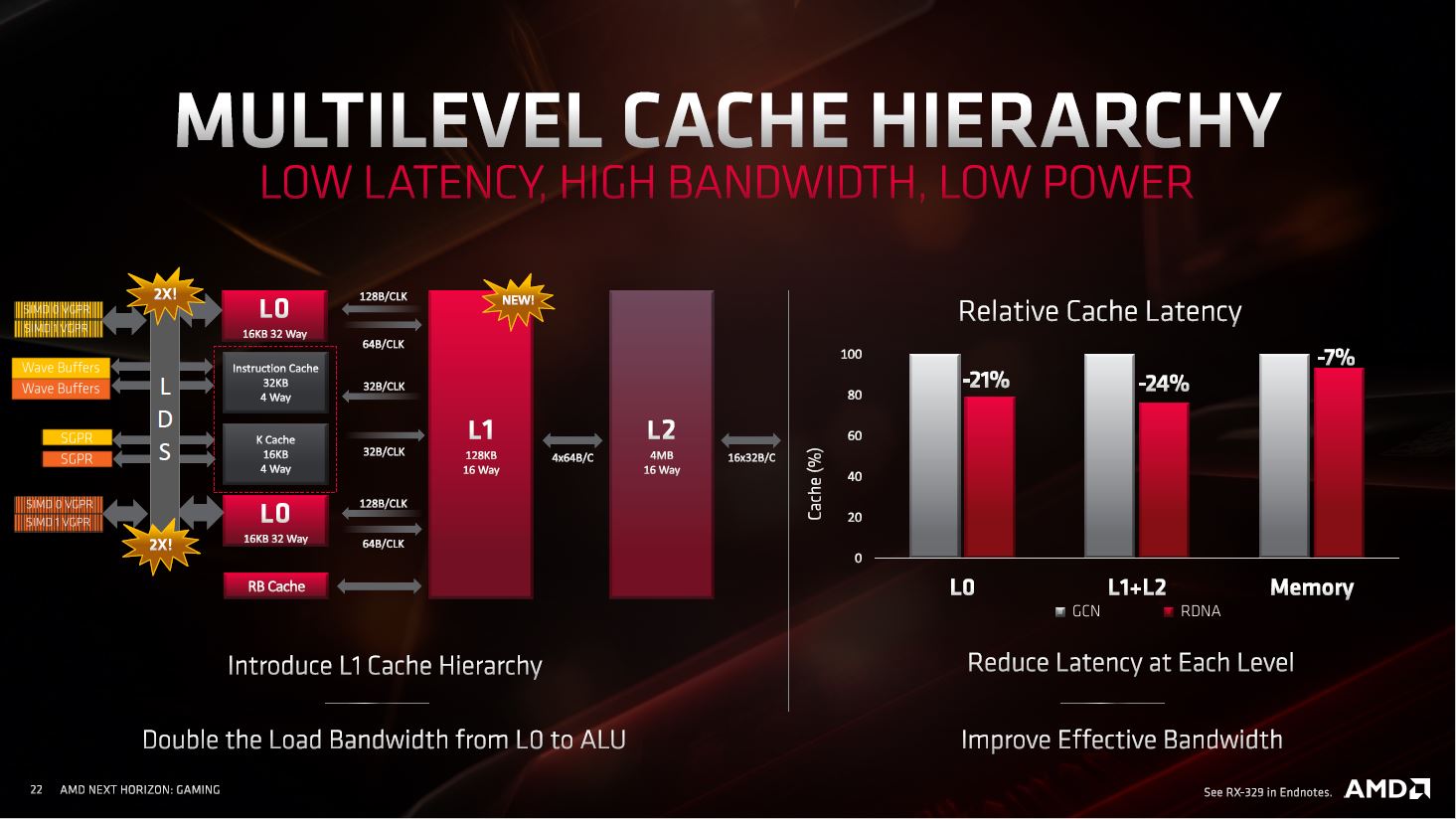

Texturing on GPUs is only fast and effective because the textures are pre-loaded into the GPU caches for the texture samplers to read. The regular 2D data structure and data access makes caching very effective. The moment texture data isn't in the texture cache, you have a cache miss and stall until the missing texture data, many nanoseconds away, is loaded. At that point, fetching data from SSD is clearly an impossible ask.

The described systems included mip mapping and feedback to load and blend better data in subsequent frames. You want to render a surface. The required LOD isn't in RAM so you use the existing lower LOD to draw that surface, and start the fetching process. When the higher quality LOD is loaded a frame or two later, you either have pop-in or you can blend between LOD levels, aided by SFS if that is present.

When it comes to mid-frame loads as described in that theoretical suggestion in the earlier interview (things to look into for the future), we'd be talking about replacing data that's no longer needed this frame. There's no way mid-rendering data from storage is every going to happen on anything that's not approaching DRAM speeds. The latencies are just too high.