winjer

Gold Member

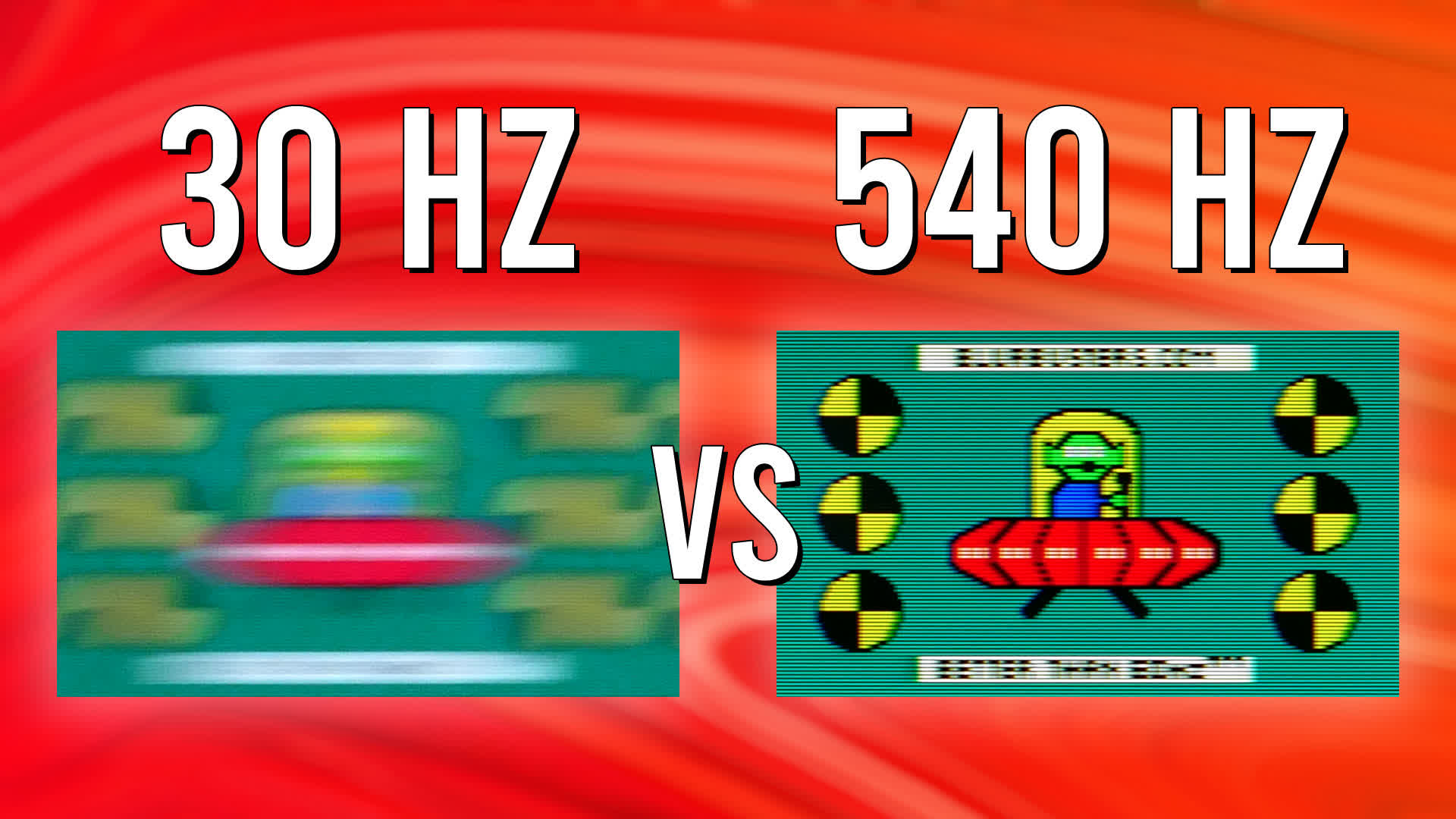

Why Refresh Rates Matter: From 30Hz to 540Hz

Are higher refresh rates better for gaming? Yes, they are. In this article we'll explain why refresh rates often labeled as "overkill" might actually offer more improvement...

www.techspot.com

www.techspot.com

Higher refresh rates in gaming are superior for three primary reasons: input latency, smoothness, and visual clarity. We'll mainly discuss the last reason in this article, but first, let's briefly address input latency and smoothness, as they are also significant benefits of high refresh rate monitors.