-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Microsoft Xbox Series X's AMD Architecture Deep Dive at Hot Chips 2020

VideodromeX

Member

How trustworthy is Matt when it comes to hardware? From what I remember he used to know some people at Microsoft. But is he an expert in hardware or is he just accepted as such, because of his mod status at REeeee?

Good question...

TheGreatWhiteShark

Member

those 6gb are going to be used for OS and cpu stuff.The ram is one part of a “System”. In fact 6/10 gig on XsX is slower than PS5 if you want to compare just one aspect of the whole thing. But thanks for proving the point of my original post.

also, take note that it is the part of the memory where SSD will stream directly to the cpu to process, and in that part gpu has direct snoop, as we learned 2 days ago.

trying to make this sound like it is a negative is just trying too hard for nothing

M1chl

Currently Gif and Meme Champion

Not sure, but he is respected, as far as I know. Not sure how much he is person in the industry outside of being on Reee and bullying Asian people.How trustworthy is Matt when it comes to hardware? From what I remember he used to know some people at Microsoft. But is he an expert in hardware or is he just accepted as such, because of his mod status at REeeee?

geordiemp

Member

I interpret his claim about 2.23Ghz as two things. 1) performance gains above this are marginal with the amount of memory bandwidth they have and 2) it is possible the voltage required to go above this for their 'baseline'* apu is higher than they want as it creates too much silicon degradation.

*Since they want all PS5's to behave the same regardless of silicon quality they need to find the worst passable examples and use those to create the smartshift profiles all consoles will use.

Well beg to differ I take it as propagation logic in the phrasing he used. I aslo assume the patented compression from Vector shader to pixel shader and large wavefront transfer and cache storage also helps acheive that.

I am sure sony engineers have allot of balance and petformance data and have tuned accordingly to their design, as will have microsoft. Your just guessing now.

Dont agree on silicon degredation voltage, thats not correct for GPU.

Also memory bandwidth although a factor in balancing te overall system design, performance is helped by all caches and functions operating in less cycles so no on that one either.

Just accept what Cerny says until we receieve a) Ps5 teardown b) PC parts.

But that would on presumption that the CPU/GPU is not going to have any sort power throttle and it would run always at full frequency and full load. I don't think that's the situation here. Basically if you lock your CPU, GPU on some frequency, it's not really consuming that much more resources. Load factor is also a factor.

However, we know nothing ho much headroom XSX has with the PSU, which is only like 300W (rated), but plug is up to 250W, so in that case it prove beneficial. Something like Turing architecture, is bound by power, not temps (surface area for the die is so big, that you easily just take whole heat by cooler).

But if that SoC is power well within the spec of the PSU, which is smart things to do, because otherwiseor monitoring power delivery inside the APU (seems unrealistic, based on the common methods which are used in these cases - not smart shift) then I don't see the reason for chip with sustained clocks to loose performance. BUT, we don't know if XSX does not throttle those sustained clocks down, because that's not some glamorous technology, that's fail safe mechanism.

But obviously within the console itself is hard to draw some conclusions as of now. I am just discussing the concept how would that work.

I imagine Sony are doing something like upto 80% (for instance as a number to use) APU utilisation you get full clockspeed but above this you have to start reducing clocks.

Since the thermal and power limit is set at below 100% utilisation you can have higher clocks than if you were setting the clockspeed based on power and thermals at 100% utilisation so it adds performance in the majority of real use cases and only reduces performance in a few edge cases.

With MS they have a system where their thermal and power solution can handle the APU being at 100% utilisation so in the majority of cases they have left clockspeed headroom on the table.

Sony

Nintendo

The variable clocks discussion is tiring and pointless without knowing what the sustained clocks are.

You have max clocks and sustained clocks. That means the PS5 frequencies are variable but capped at 3.5 GHz for the CPU and 2.23 GHz for the GPU. Those are the official, max frequencies. The 100%.

Some posters here imply that variable frequencies allows the CPU and GPU to go beyond 3.5 Ghz and 2.23 Ghz. They won't and they can't. That's also why I don't like Matt's wording T of "they are variable to get more performance out of the hardware". That IS the performance of the hardware.

In other words, The CPU's 100% is 3.5Ghz and the GPU's 100% is 2.23 Ghz.

Any variation below that will have the CPU run at [100%-X%] and the GPU run at [100%-Y%], where X and Y can differ per scene.

That's why it's so important to know what the PS5's sustained clocks are/ base clocks if developers don't choose to variate.

Overly simplicied, this means that for the PS5:

CPU Clock = X Ghz + CPUvar ≤ 3.5 Ghz

GPU Clock = Y Ghz + GPUvar ≤ 2.23 Ghz

Where:

X = base/sustained/minimum clock speed of the CPU

Y = base/sustained/minimum clock speed of the GPU

CPUvar = variable clock range of the CPU

GPUvar = variable clock range of the GPU

We don't know what X and Y are and we don't know what the conditions for CPUvar and GPUvar are.

Sony's smart shift technology dictates the CPUvar and GPUvar based on workload. But we don't know from which setpoint.

You have max clocks and sustained clocks. That means the PS5 frequencies are variable but capped at 3.5 GHz for the CPU and 2.23 GHz for the GPU. Those are the official, max frequencies. The 100%.

Some posters here imply that variable frequencies allows the CPU and GPU to go beyond 3.5 Ghz and 2.23 Ghz. They won't and they can't. That's also why I don't like Matt's wording T of "they are variable to get more performance out of the hardware". That IS the performance of the hardware.

In other words, The CPU's 100% is 3.5Ghz and the GPU's 100% is 2.23 Ghz.

Any variation below that will have the CPU run at [100%-X%] and the GPU run at [100%-Y%], where X and Y can differ per scene.

That's why it's so important to know what the PS5's sustained clocks are/ base clocks if developers don't choose to variate.

Overly simplicied, this means that for the PS5:

CPU Clock = X Ghz + CPUvar ≤ 3.5 Ghz

GPU Clock = Y Ghz + GPUvar ≤ 2.23 Ghz

Where:

X = base/sustained/minimum clock speed of the CPU

Y = base/sustained/minimum clock speed of the GPU

CPUvar = variable clock range of the CPU

GPUvar = variable clock range of the GPU

We don't know what X and Y are and we don't know what the conditions for CPUvar and GPUvar are.

Sony's smart shift technology dictates the CPUvar and GPUvar based on workload. But we don't know from which setpoint.

Last edited:

TBiddy

Member

The ram is one part of a “System”. In fact 6/10 gig on XsX is slower than PS5 if you want to compare just one aspect of the whole thing. But thanks for proving the point of my original post.

As a side note I seem to remember the PS4 operating somewhat in the same manner. There's a thread about it here. I'm not sure if it compares directly, though.

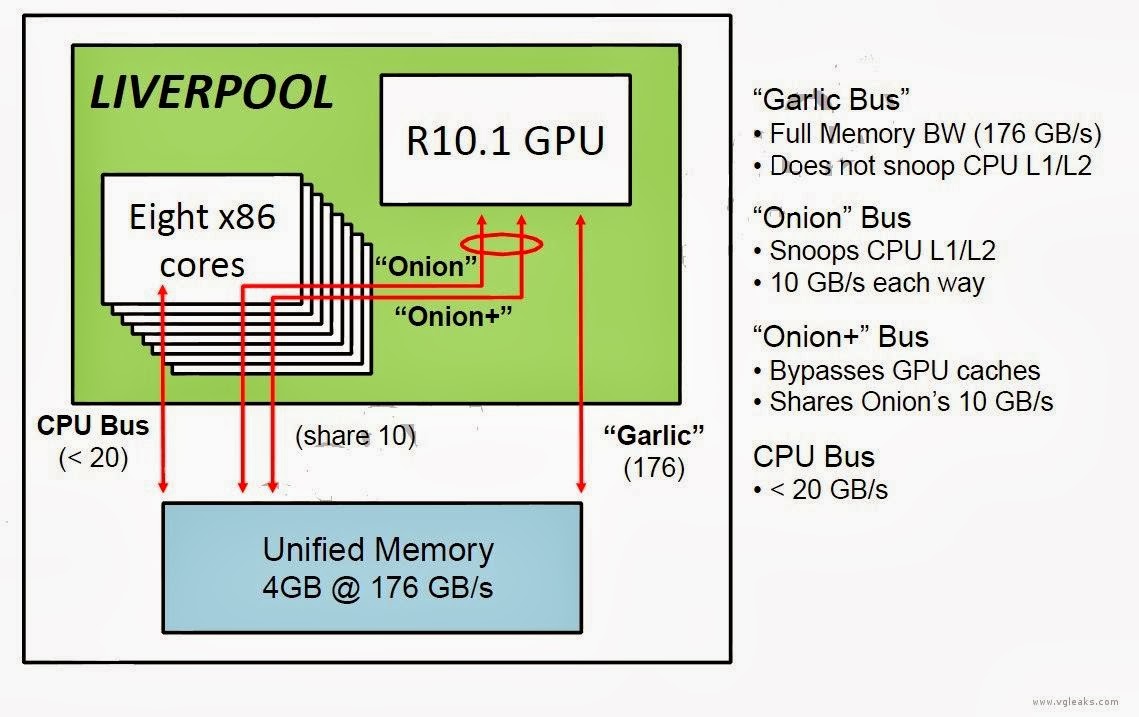

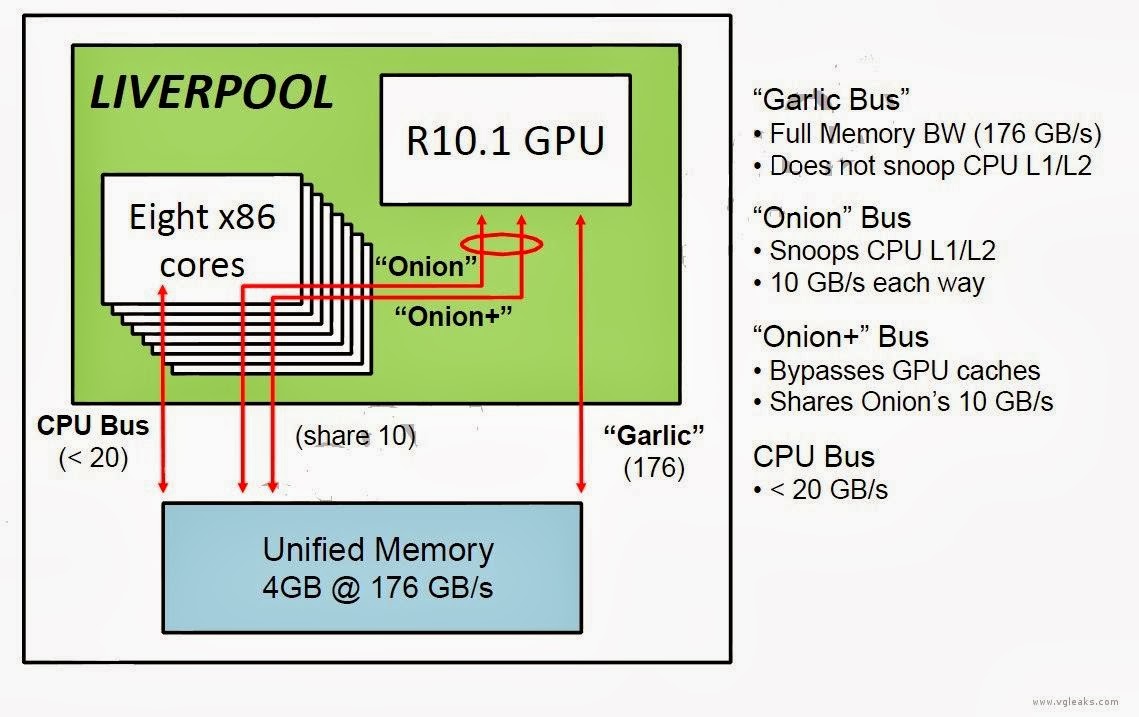

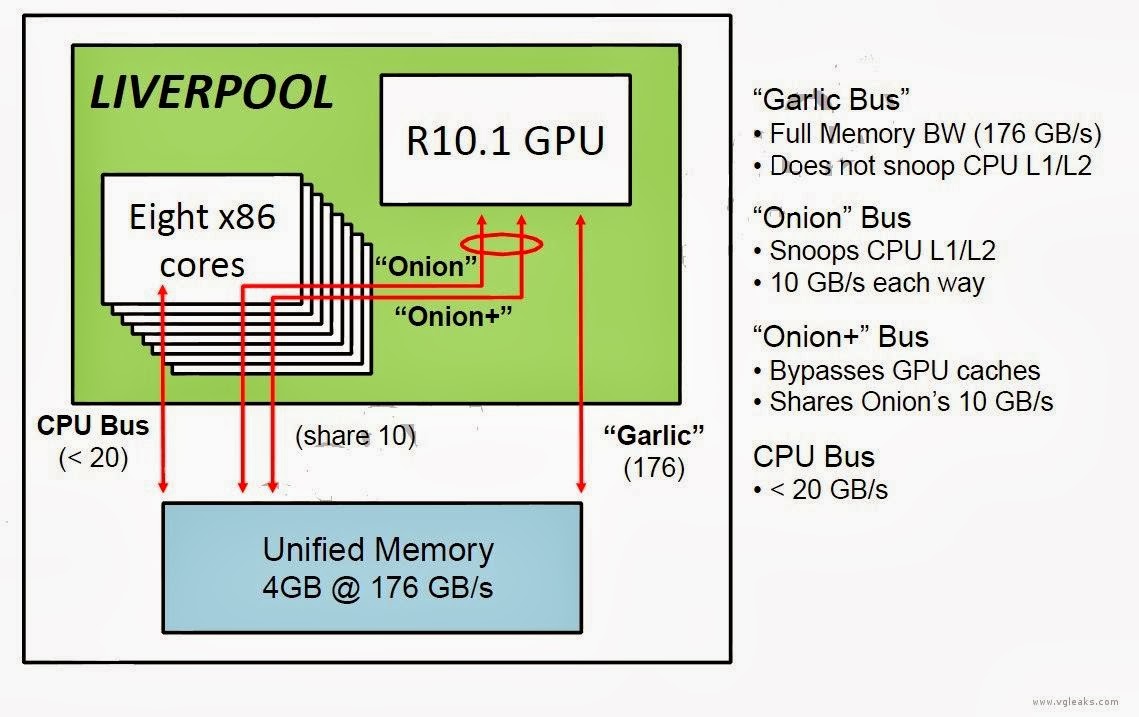

PS4's memory subsystem has separate buses for CPU (20Gb/s) and GPU(176Gb/s)

Eurogamer has an interesting article on the process of porting Ubisoft racer The Crew from PC to PS4. In it, developers of the PS4 version speak in detail about the porting process. It's a fascinating read...

jimbojim

Banned

Well, we know the GPU can hold steady 2.23 GHz because you can fix that frequency in the dev-kit (same chip) to test how your code works at different frequencies.

So the chip is capable of the fixed frequency, so it must be another problem why the console can't hold it. And there we have the fixed power delivery (via smartshift) that is needed because of the cooling solution (at least this seems to be the only reason why they won't fix the frequency at their maximum settings).

Based Cerny in Eurogamer interview about locked profiles in devkits :

Regarding locked profiles, we support those on our dev kits, it can be helpful not to have variable clocks when optimising. Released PS5 games always get boosted frequencies so that they can take advantage of the additional power," explains Cerny.

Like i've said in one of my posts here before :

"PS5 variable clocks are there to balance the load between the CPU and the GPU in order to eliminate per frame bottlenecks, they're not there because "the system can't handle both at max clocks". Whenever there's a bottleneck in either the CPU/GPU, power can be channeled from either one of them to the other without compromising performance thus making the system more resilient to bottlenecks.

With a system like the Series X, you just throw fixed power at both and if there's a bottleneck in either the CPU or the GPU at a given time, the bottleneck simply occurs.

Now, of course the Series X still has a more powerful CPU/GPU, that's not up for debate, what I'm trying here to say is that If the system was also designed as a variable clock system with the APU load balancing like the PS5, it would end up being even more powerful than what it currently is precisely because of that.

I don't need bottlenecks with locked clocks in PS5. Thanks!

Why do you want to CPU and GPU to work at max. clocks ( and in some situations where CPU or GPU power isn't necessary ) when it won't be utilized 100% like in XSX case and some power will be left on a table?

jimbojim

Banned

That's also why I don't like Matt's wording T of "they are variable to get more performance out of the hardware". That IS the performance of the hardware.

It's not that what Matt implied. Like Jason Ronald said, if they went with variable clocks, XSX would have more FLOPS. Matt implied to that. XSX would have more FLOPS

Well beg to differ I take it as propagation logic in the phrasing he used.

The only time I have heard of signal propagation delay causing issues is when traversing large caches. I think MS had this issue with increasing the size of the ESRam because of how large it was.

I am sure sony engineers have allot of balance and petformance data and have tuned accordingly to their design, as will have microsoft. Your just guessing now.

Of course I am guessing since we have no solid information to go on. That is what makes speculation fun, make guesses and see how close you get.

Dont agree on silicon degredation voltage, thats not correct for GPU.

Excessive voltage causes silicon degradation in all applications. If you overvolt silicon you shorten its lifespan so for these sorts of consumer applications voltage is kept at a level that will ensure it lasts.

Also memory bandwidth although a factor in balancing te overall system design, performance is helped by all caches and functions operating in less cycles so no on that one either.

Except we know bandwidth matters to RDNA because the 36CU 5600XT with higher clocks than the 36CU 5700 has lower performance simply due to having a smaller memory bus and less bandwidth. This shows in resolutions where having 6GB ram should not be a bottleneck.[/QUOTE]

Just accept what Cerny says until we receieve a) Ps5 teardown b) PC parts.

Nothing wrong with waiting and seeing. I just like speculation.

TBiddy

Member

It's not that what Matt implied. Like Jason Ronald said, if they went with variable clocks, XSX would have more FLOPS. Matt implied to that. XSX would have more FLOPS

Jason Ronald also said that it makes it harder for developers to optimize, when the clocks are variable.

That said, it's quite tiresome that the thread is once again about the PS5.

Thirty7ven

Banned

That said, it's quite tiresome that the thread is once again about the PS5.

#3 post in the thread is a Xbox fan bringing the PS5 into the equation. #11 is a known Xbox fan making direct comparisons. You would know since you thought he was being "thoughtful".

What's tiresome is Xbox fans always needing PS5 in the conversation, and then act victims when the conversation is about the PS5.

jimbojim

Banned

Jason Ronald also said that it makes it harder for developers to optimize, when the clocks are variable.

That said, it's quite tiresome that the thread is once again about the PS5.

Harder for devs? Does 3rd party devs has a problem with a PS5? Nope. Maybe devs on Xbox would have a problem with DX12 since they need to develop across Xbox consoles and PC and divergence isn't that good between Xbox and PC ( as stated from Hot chips ).

I said effectively able. They've said a 60% memory and bandwidth savings (2.5x multiplier) for SFS in most of their articles. If you save 60% of 2.4 GB/s 10GB then you're only using 40% of the raw bandwidth. If you used 100% of the raw bandwidth with SFS then it would be (2.4 ÷ 0.4) or (2.4 x 2.5) whichever you prefer, giving 6 GB/s and same for memory.That's now exactly how it works, no software feature will ever allow you to store 25GB of data inside 13GB RAM, it's the opposite - instead of let's say 10GB used normally thanks to SFS only 4 will be needed, requiring less bandwidth, and leaving space for additional data to be stored.

Quite bizzare that you started with the math but you didn't finish.

instead of let's say 10GB used normally thanks to SFS only 4 will be needed

If you used 10 GB normally but now you only need 4 GB. Then if you need to use 25 GB you would only need 10 GB right? (10 /4) = 2.5, (25/10) = 2.5 It seems you understand the concept but it also doesn't seem like you understand simple math.

Sony

Nintendo

It's not that what Matt implied. Like Jason Ronald said, if they went with variable clocks, XSX would have more FLOPS. Matt implied to that. XSX would have more FLOPS

In itself, variable clocks vs. sustained clocks is a pointless discussion. Context is key.

If Xbox went for veriable clocks, they might have had a higher theoretical clock and maximum performance.

But also in that case, it's a not a matter of Sustained Clocks = 100% performance and Variable Clocks = 100+V % performance, where V is the performance gain of chosing for variable.

CPU Clock = X Ghz + CPUvar ≤ Peak clock

GPU Clock = Y Ghz + GPUvar ≤ Peak clock

Yes, it's likely that chosing for the variable clock could increase the peak clocks. But the new peaks will not be sustained, it needs a scene specific utilization of CPU and GPU within the variance, which is handled by Smart Shift.

Shmunter

Member

Different. Split bus, one continuous memory poolAs a side note I seem to remember the PS4 operating somewhat in the same manner. There's a thread about it here. I'm not sure if it compares directly, though.

PS4's memory subsystem has separate buses for CPU (20Gb/s) and GPU(176Gb/s)

Eurogamer has an interesting article on the process of porting Ubisoft racer The Crew from PC to PS4. In it, developers of the PS4 version speak in detail about the porting process. It's a fascinating read...www.neogaf.com

MarkMe2525

Member

Atmos is fake? It's a video game, it's all fake. It also sounds awesome. My analogy may be shit but isn't this the same as saying horizon Dawn isn't impressive because it's a fake generated image of an open world?It sounds good (Dolby Atmos) but it's fake and pretty limited, that's the truth of it. You don't need fancy headsets, only good quality headsets that support basic 2.0 Channel. That's why Dolby went bitching around after the PS5 GDC as they're not needed anymore for PS5.

It'll support all of them anyway, but it's more accurate with headsets.

Even basic TV stereo will have some special 3D audio simulation, most likely starting with Sony TV's.

And yes, every fucking droplet will produce its own sound, not a baked soundtrack put in some location.

Bo_Hazem

Banned

Atmos is fake? It's a video game, it's all fake. It also sounds awesome. My analogy may be shit but isn't this the same as saying horizon Dawn isn't impressive because it's a fake generated image of an open world?

Ok let's make it clear: HRTF 3D audio online needs simple 2.0 channel stereo, Dolby Atmos could probably do that but limited to 32 sources, but they mostly go for licensed headsets which are expensive and use 7.1 channel simulation to make 3D audio. HRTF-based 3D audio doesn't need 5.1/7.1 etc, only works great with basic 2.0 Channel, meaning any stereo headphones/headsets, and it's vastly superior and accurate, especially if you have your HRTF measured or find something closer to yours. Such audio needs physics calculations that can be taxing if done on the GPU, along with convolution reverb and other calculations that affect the received sound.

The Tempest is the next level of 3D audio, true 3D audio, something never been heard of in gaming of doing hundreds of raytraced sound sources, up to giving every droplet of rain its own sound to create a more immersive experience, and such accuracy is much needed for VR first.

Last edited:

thicc_girls_are_teh_best

Member

How trustworthy is Matt when it comes to hardware? From what I remember he used to know some people at Microsoft. But is he an expert in hardware or is he just accepted as such, because of his mod status at REeeee?

Dunno, but I heard he was a Community Manager or something like that at a 3rd-party studio that has teams working on both systems. But that still leaves a massive amount of guesswork to the validity in some of his statements being taken as authoritative gospel over on Reeee.

What's the studio's name? What's their size? How up-to-date are they on next-gen devkits and APIs? Are they working on the same game for both systems or different games for each? What's the budget like on their titles? Which system's being used as lead platform? Who's their publisher? How much contact does he have with actual devs/programmers? What level of dev/programmer are they? What do they program? Does he speak with artists or writers instead of programmers? Etc. etc.

Nikana

Go Go Neo Rangers!

Ok let's make it clear, from people who know better, it should clear the fog. HRTF 3D audio online needs simple 2.0 channel stereo, Dolby Atmos could probably do that but limited to 32 sources, but they mostly go for licensed headsets which are expensive and use 7.1 channel simulation to make 3D audio. HRTF-based 3D audio doesn't need need 5.1/7.1 etc, only works great with basic 2.0 Channel, meaning any stereo headphones/headsets, and it's vastly superior and accurate, especially if you have your HRTF measured or find something closer to yours. Such audio needs physics calculations that can be taxing if done on the GPU, along with convolution reverb and other calculations that effect the received sound.

The Tempest is the next level of 3D audio, true 3D audio, something never been heard of in gaming of doing hundreds of raytraced sound sources, up to giving every droplet of rain its own sound to create a more immersive experience, and such accuracy is much needed for VR first.

Dolby can support hundreds of sources as well and Atmos headsets do not use simulation if the game/source supports atmos.

Last edited:

And PS5 to be a weaker console than it is now. LOL. Variable frequencies are there to squeeze more power out of the GPU. There is no boost mode in PS5. 2.23 GHz is just max. clock. What are the CPU and GPU in PS5 are doing are just, so called to say "load balancers". That's why Smartshift is there. To distribute power WHERE IS IT NEEDED. People are really struggling to realize how a variable clock/power system gets more performance out of a certain hardware than one with fixed clocks/power balancing.

PS5 variable clocks are there to balance the load between the CPU and the GPU in order to eliminate per frame bottlenecks, they're not there because "the system can't handle both at max clocks". Whenever there's a bottleneck in either the CPU/GPU, power can be channeled from either one of them to the other without compromising performance thus making the system more resilient to bottlenecks.

With a system like the Series X, you just throw fixed power at both and if there's a bottleneck in either the CPU or the GPU at a given time, the bottleneck simply occurs. With locked clocks, some power will be left out on a table, it won't be used 100%

Now, of course the Series X still has a more powerful CPU/GPU, that's not up for debate, what I'm trying here to say is that If the system was also designed as a variable clock system with the APU load balancing like the PS5, it would end up being even more powerful than what it currently is precisely because of that.

One correction.

smartshift on the PS5 is in one sense only, from the CPU to the GPU.

on notebooks that is a two-way street.

Probably the smartshift on the PS5 is just to compensate in these specific cases that the GPU clock drops, as it is all blocked by consumption, increasing the power supply to the GPU makes it keep the clock high.

it is a superior solution that has a locked clock ... Just as "checkboading" is a superior solution to "true 4k", proven by Gears 5.

Variable clock on PS5 will be the same as the PS4 PRO checkboading.

MS will try to make a circus on top of that, "true 4k" "true clock" ... And in the end, the next xbox if it exists, should use a similar solution, in the same way that the xoneX "true 4k" uses "checkboarding 4k fake" because it is a superior and smart solution.

Dunno, but I heard he was a Community Manager or something like that at a 3rd-party studio that has teams working on both systems.

I heard that the Earth is flat.

as far as we know he is reliable simply because he repeats that everyone knows, the difference in power is the smallest of all generations.

Problem that for some insecure with Gameplay of X360, this is not enough and they need to rave with the power of "hidden numbers" that do not reflect the reality and the difference will be bigger.

Every generation is this delusion on the same side "Hidden GPU" "Magic cloud" ... now it is the power of "hidden number" that is greater than the real one. It seems that one side always has serious problems with frustration with reality.

Last edited:

thicc_girls_are_teh_best

Member

D

Deto

There is no ultimately "superior" solution when it comes to fixed/variable freq and checkerboarding/"true" 4K because these things depend on use conditions. On an 8K, 100" TV you'd much rather want true 4K vs. checkboarded 1440p, because it'd be a lot easier to notice the resolution difference.

If you have a game with a lot of things going on for a large, continuous period of time stressing out the chips, you'll prefer fixed clocks to variable ones because the latter may eventually need to downclock to ensure power budget isn't exceeded.

Also you can knock it off with the snark, do you want a real discussion or do you just want to do shitty one-liner retorts? You've been doing nothing but fanboying in your posts in this thread, it seems you don't want to genuinely discuss what has been presented through the OP whatsoever. If you knew anything about hardware development you'd know the software needs to be present to best expose those hardware features and maximize their usage for software developers to extract.

The point of discussion around XvA has been WRT its level of tuned fine granularity and discernment for what specific bits of data need be targeted for loading. The fact there is hardware built not just in the SSD I/O pipeline but also the CPU and GPU to facilitate this is indicative of such. Therefore speculating their XvA solution can provide results that are above whatever provided paper figures specify, knowing that their approach and Sony's aren't apples-to-apples, isn't some nefarious leap of logic or delving into "hidden dGPU in power brick" levels of insanity.

You can keep lying to yourself to believe otherwise, but that just makes your dunce hat big enough to poke through the ceiling. And no, that's not gonna impress the ladies either.

If you have a game with a lot of things going on for a large, continuous period of time stressing out the chips, you'll prefer fixed clocks to variable ones because the latter may eventually need to downclock to ensure power budget isn't exceeded.

Also you can knock it off with the snark, do you want a real discussion or do you just want to do shitty one-liner retorts? You've been doing nothing but fanboying in your posts in this thread, it seems you don't want to genuinely discuss what has been presented through the OP whatsoever. If you knew anything about hardware development you'd know the software needs to be present to best expose those hardware features and maximize their usage for software developers to extract.

The point of discussion around XvA has been WRT its level of tuned fine granularity and discernment for what specific bits of data need be targeted for loading. The fact there is hardware built not just in the SSD I/O pipeline but also the CPU and GPU to facilitate this is indicative of such. Therefore speculating their XvA solution can provide results that are above whatever provided paper figures specify, knowing that their approach and Sony's aren't apples-to-apples, isn't some nefarious leap of logic or delving into "hidden dGPU in power brick" levels of insanity.

You can keep lying to yourself to believe otherwise, but that just makes your dunce hat big enough to poke through the ceiling. And no, that's not gonna impress the ladies either.

Last edited:

prinz_valium

Member

Of course a PS5 with fixed 2.23GHz would be stronger than a PS5 with variable max 2.23GHzYou said PS5 with fixed 2.23 GHz would be stronger. It wouldn't, that's for sure. Mentioned in posts above.

Also PS5 would be weaker because GPU couldn't go above 2 Ghz. Why? Maybe you should rewatch "Road to PS5" and listen what Cerny said.

How is that even a question?

Is a car faster if it drives 100MHP all the time or just 80% of the time?

NoMoChokeSJ

Banned

Your post was #714 and the PS5 discussion is still going on. I'm pretty sure what was brought up on post #3 and #11, were probably addressed 600+ posts ago. This is EVERY xbox thread. Same characters turn it into a PS5 thread within a few pages. What is tiresome is the PS5 defenders fucking up every xbox thread because out of weird console insecurity. I'm sure the PS5 will be plenty powerful.. so maybe give it a rest.#3 post in the thread is a Xbox fan bringing the PS5 into the equation. #11 is a known Xbox fan making direct comparisons. You would know since you thought he was being "thoughtful".

What's tiresome is Xbox fans always needing PS5 in the conversation, and then act victims when the conversation is about the PS5.

Bo_Hazem

Banned

Your post was #714 and the PS5 discussion is still going on. I'm pretty sure what was brought up on post #3 and #11, were probably addressed 600+ posts ago. This is EVERY xbox thread. Same characters turn it into a PS5 thread within a few pages. What is tiresome is the PS5 defenders fucking up every xbox thread because out of weird console insecurity. I'm sure the PS5 will be plenty powerful.. so maybe give it a rest.

I was dragged here by some troll. Avoided last quotation as well to avoid repeating an already fully explained topic. Enjoy your thread.

Last edited:

chilichote

Member

The thing is what many don't understand, the clock rate depends on the amount of work. So when there is no work to be done, the Xbox still clocks at 1825MHz, but the PS5 doesn't have to because it's a waste of energy.Of course a PS5 with fixed 2.23GHz would be stronger than a PS5 with variable max 2.23GHz

How is that even a question?

Is a car faster if it drives 100MHP all the time or just 80% of the time?

Last edited:

01011001

Banned

The thing is what many don't understand, the clock rate depends on the amount of work. So when there is no work to be done, the Xbox still clocks at 1825MHz, but the PS5 doesn't have to because it's a waste of energy.

that's not why the PS5 has variable clocks, it has these because at intensive loads it will throttle down in order to stay inside a maximum power draw, so the heat output stays in check.

geordiemp

Member

I imagine Sony are doing something like upto 80% (for instance as a number to use) APU utilisation you get full clockspeed but above this you have to start reducing clocks.

Since the thermal and power limit is set at below 100% utilisation you can have higher clocks than if you were setting the clockspeed based on power and thermals at 100% utilisation so it adds performance in the majority of real use cases and only reduces performance in a few edge cases.

With MS they have a system where their thermal and power solution can handle the APU being at 100% utilisation so in the majority of cases they have left clockspeed headroom on the table.

So XSX is now 15 % + 20 % = 35 % more powerful.

Your living in fantasty land, why cant people just discuss the information and data we have without going la la

Speak about hot chips and XSX, why have you got to FUD Ps5 in every post, its like timdog

So the idiots are back on variable clocks, after trying what was it again, og yeah degrading silicon in ps5 and it melts things and kills your mum.

Cerny must be liar and we believe in Marlenus er Timdog. Jesus.

And the post gota thoughtfull, embarrassing

Last edited:

Mr Moose

Member

GPU can access the full 176GB/s on the PS4. There is Onion+ too.As a side note I seem to remember the PS4 operating somewhat in the same manner. There's a thread about it here. I'm not sure if it compares directly, though.

PS4's memory subsystem has separate buses for CPU (20Gb/s) and GPU(176Gb/s)

Eurogamer has an interesting article on the process of porting Ubisoft racer The Crew from PC to PS4. In it, developers of the PS4 version speak in detail about the porting process. It's a fascinating read...www.neogaf.com

I think the Series X might be more like the... What GPU was it Nvidia had with "4GB"? GTX 970?

MasterCornholio

Member

GPU can access the full 176GB/s on the PS4. There is Onion+ too.

I think the Series X might be more like the... What GPU was it Nvidia had with "4GB"? GTX 970?

Sounds like a pretty good thing to keep for the PS5. Anyone have any idea if the PS5 kept this?

Journey

Banned

This is one way to look at it. The other is that Microsoft is talking about the box so much because they have no games to showcase the power of the box. Revealing as many details as possible is the only way to build hype when you have nothing else but game pass.

Hot Chips is about.... gam... oh wait, no it's not lol.

No games to showcase the power is borderline trolling now after seeing some of the most impressive games li

GPU can access the full 176GB/s on the PS4. There is Onion+ too.

I think the Series X might be more like the... What GPU was it Nvidia had with "4GB"? GTX 970?

I think the point is we don't know the specifics on how it's dealt with on series X.

Mr Moose

Member

I think the point is we don't know the specifics on how it's dealt with on series X.

10GB at 560GB/s, 6GB at 336GB/s

The GTX 970 has 3.5GB in one partition, operating at 196GB/s, with 512MB of much slower 28GB/s RAM in a second partition. Nvidia's driver automatically prioritises the faster RAM, only encroaching into the slower partition if it absolutely has to. And even then, the firm says that the driver intelligently allocates resources, only shunting low priority data into the slower area of RAM.

It's obviously not going to be THAT extreme, but yeah that's what made me think of the Series X/970 setup being similar.

"Memory performance is asymmetrical - it's not something we could have done with the PC," explains Andrew Goossen "10 gigabytes of physical memory [runs at] 560GB/s. We call this GPU optimal memory. Six gigabytes [runs at] 336GB/s. We call this standard memory. GPU optimal and standard offer identical performance for CPU audio and file IO. The only hardware component that sees a difference in the GPU."

In terms of how the memory is allocated, games get a total of 13.5GB in total, which encompasses all 10GB of GPU optimal memory and 3.5GB of standard memory. This leaves 2.5GB of GDDR6 memory from the slower pool for the operating system and the front-end shell. From Microsoft's perspective, it is still a unified memory system, even if performance can vary. "In conversations with developers, it's typically easy for games to more than fill up their standard memory quota with CPU, audio data, stack data, and executable data, script data, and developers like such a trade-off when it gives them more potential bandwidth," says Goossen.

It sounds like a somewhat complex situation, especially when Microsoft itself has already delivered a more traditional, wider memory interface in Xbox One X - but the notion of working with much faster GDDR6 memory presented some challenges. "When we talked to the system team there were a lot of issues around the complexity of signal integrity and what-not," explains Goossen. "As you know, with the Xbox One X, we went with the 384[-bit interface] but at these incredible speeds - 14gbps with the GDDR6 - we've pushed as hard as we could and we felt that 320 was a good compromise in terms of achieving as high performance as we could while at the same time building the system that would actually work and we could actually ship."

Dodkrake

Banned

So XSX is now 15 % + 20 % = 35 % more powerful.

Your living in fantasty land, why cant people just discuss the information and data we have without going la la

Speak about hot chips and XSX, why have you got to FUD Ps5 in every post, its like timdog

So the idiots are back on variable clocks, after trying what was it again, og yeah degrading silicon in ps5 and it melts things and kills your mum.

Cerny must be liar and we believe in Marlenus er Timdog. Jesus.

And the post gota thoughtfull, embarrassing

Just wait until the Xbox brigade finds out that a chip, with either variable or sustained clocks, does not turn on all its transistors in real world scenarios, only in synthetic benchmarks.

Oh boy this gen will be fun.

Journey

Banned

10GB at 560GB/s, 6GB at 336GB/s

It's obviously not going to be THAT extreme, but yeah that's what made me think of the Series X/970 setup being similar.

Right, we know it's 10GB at 560GB/s and 6GB at 336GB/s, but we don't know the details of the arrangement.

Last edited:

Kagey K

Banned

So XSX is now 15 % + 20 % = 35 % more powerful.

Your living in fantasty land, why cant people just discuss the information and data we have without going la la

Speak about hot chips and XSX, why have you got to FUD Ps5 in every post, its like timdog

So the idiots are back on variable clocks, after trying what was it again, og yeah degrading silicon in ps5 and it melts things and kills your mum.

Cerny must be liar and we believe in Marlenus er Timdog. Jesus.

And the post gota thoughtfull, embarrassing

WatDat

Banned

The variable clocks discussion is tiring and pointless without knowing what the sustained clocks are.

You have max clocks and sustained clocks. That means the PS5 frequencies are variable but capped at 3.5 GHz for the CPU and 2.23 GHz for the GPU. Those are the official, max frequencies. The 100%.

Some posters here imply that variable frequencies allows the CPU and GPU to go beyond 3.5 Ghz and 2.23 Ghz. They won't and they can't. That's also why I don't like Matt's wording T of "they are variable to get more performance out of the hardware". That IS the performance of the hardware.

In other words, The CPU's 100% is 3.5Ghz and the GPU's 100% is 2.23 Ghz.

Any variation below that will have the CPU run at [100%-X%] and the GPU run at [100%-Y%], where X and Y can differ per scene.

That's why it's so important to know what the PS5's sustained clocks are/ base clocks if developers don't choose to variate.

Overly simplicied, this means that for the PS5:

CPU Clock = X Ghz + CPUvar ≤ 3.5 Ghz

GPU Clock = Y Ghz + GPUvar ≤ 2.23 Ghz

Where:

X = base/sustained/minimum clock speed of the CPU

Y = base/sustained/minimum clock speed of the GPU

CPUvar = variable clock range of the CPU

GPUvar = variable clock range of the GPU

We don't know what X and Y are and we don't know what the conditions for CPUvar and GPUvar are.

Sony's smart shift technology dictates the CPUvar and GPUvar based on workload. But we don't know from which setpoint.

Damn.. You’re probably going to get reported up the bunghole for such a sensible post on this topic.

Godspeed.

TheGreatWhiteShark

Member

/enters xbox hot chips thread

/sees misinformation about dolby, and apotheosis of stupid variable clocks

dont worry playstation-only fellas. I am sure you will survive

/sees misinformation about dolby, and apotheosis of stupid variable clocks

dont worry playstation-only fellas. I am sure you will survive

Last edited:

jimbojim

Banned

that's not why the PS5 has variable clocks, it has these because at intensive loads it will throttle down in order to stay inside a maximum power draw, so the heat output stays in check.

Power consumption drop is not a performance drop in games.

jimbojim

Banned

Is a car faster if it drives 100MHP all the time or just 80% of the time?

How about a max speed at highest rpm? Let's see then how long machine will last.

WatDat

Banned

/enters xbox hot chips thread

/sees misinformation about dolby, and apotheosis of stupid variable clocks

dont worry playstation-only fellas. I am sure you will survive

‘member when TFLOPS USED to matter?

jimbojim

Banned

I've mentioned in previous page, will mention here. Some are deliberately ignoring it. Why PS5s variable clocks are better than "PS5 would be stronger with fixed clocks?

Sony did tell us how their design works. The thing you're missing is that the PS5 approach is not just letting clocks be variable, like uncapping a framerate. That would indeed have no effect on the lowest dips in frequency. But they've also changed the trigger for throttling from temperature to silicon activity. And that actually changes how much power can be supplied to the chip without issues. This is because the patterns of GPU power needs aren't straightforward.

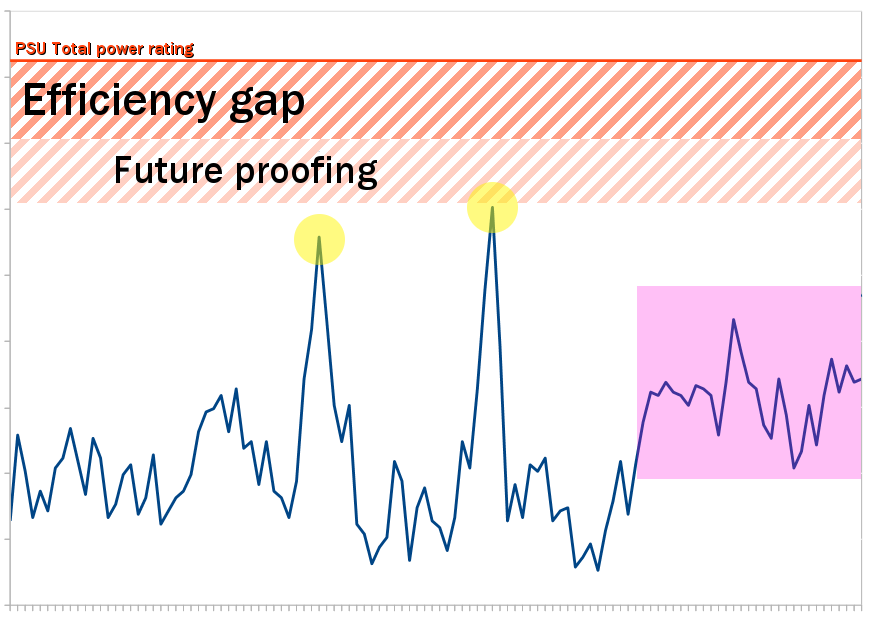

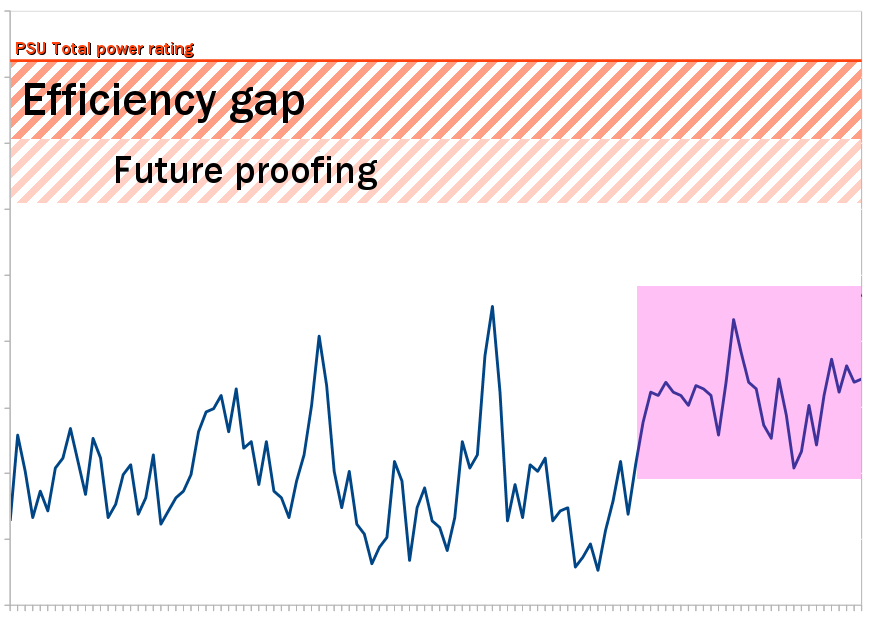

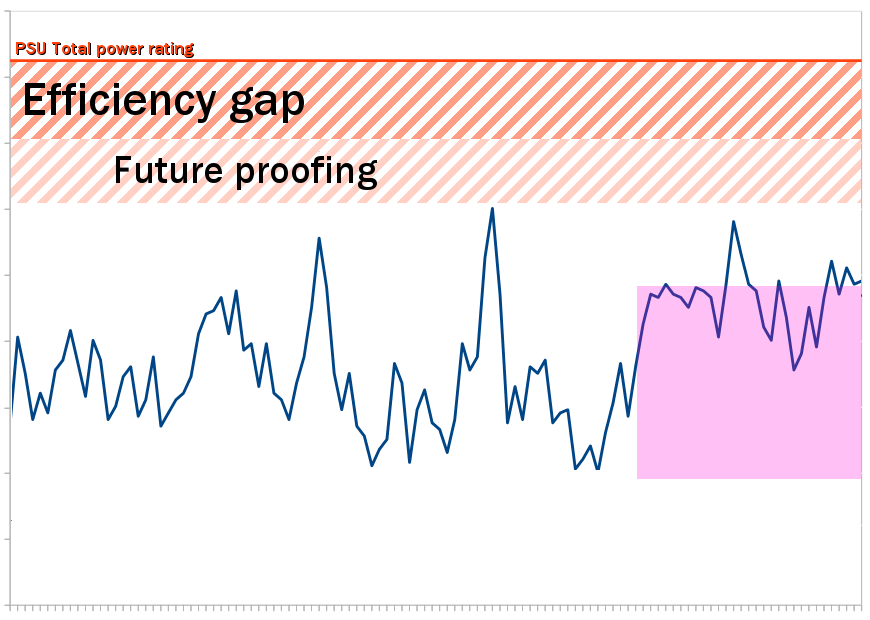

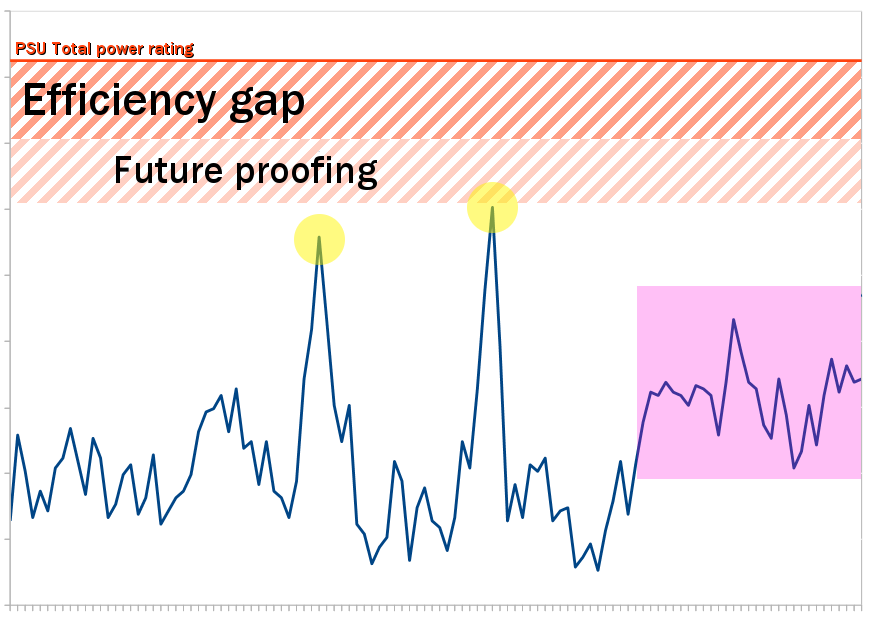

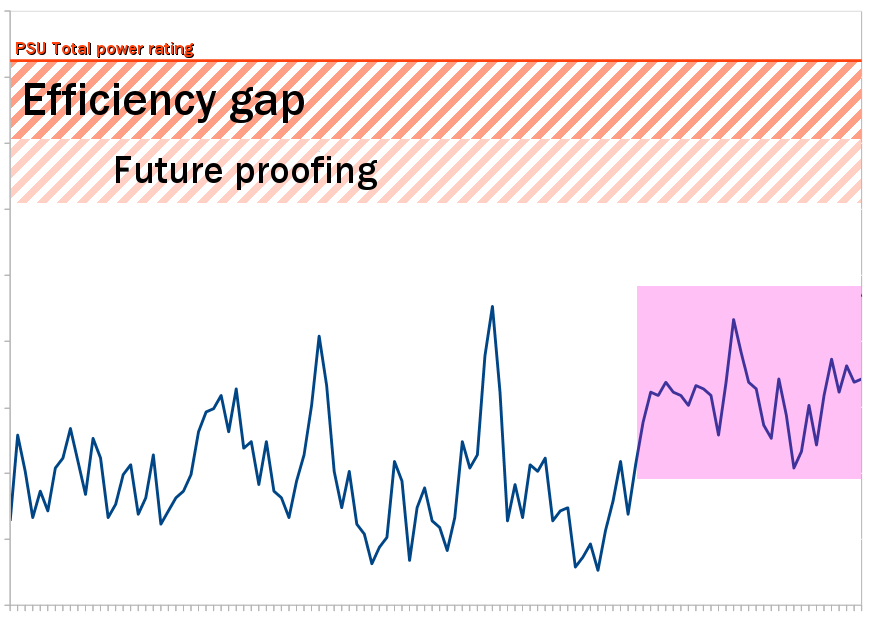

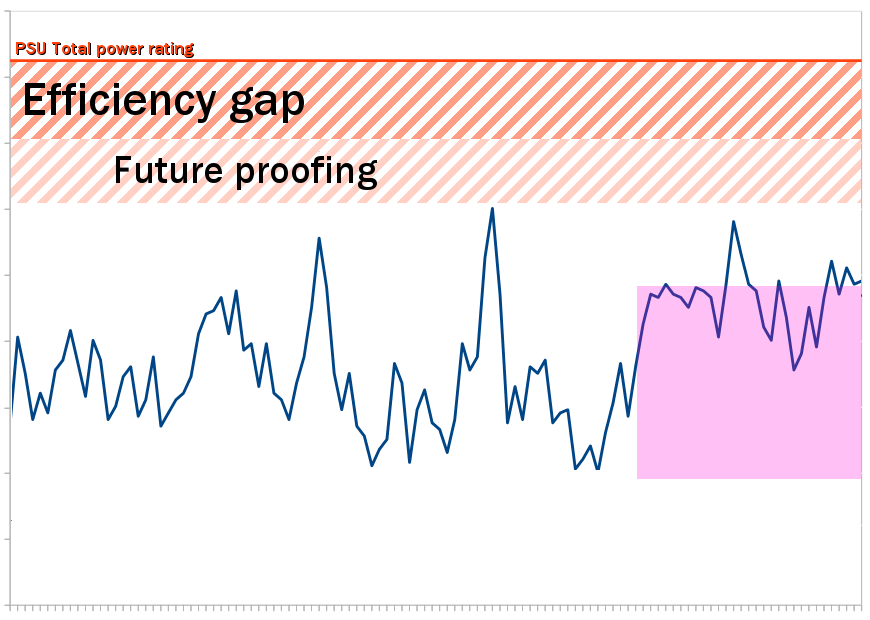

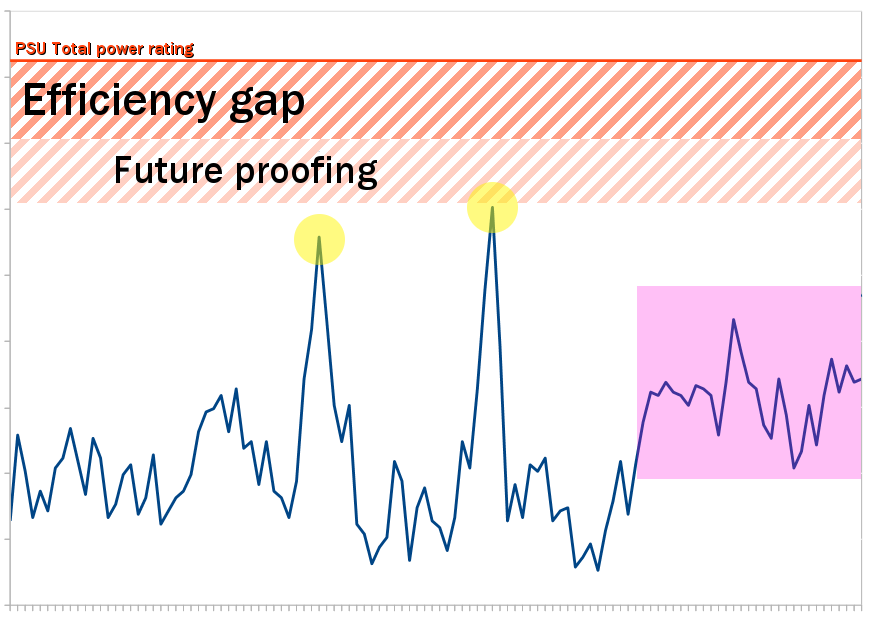

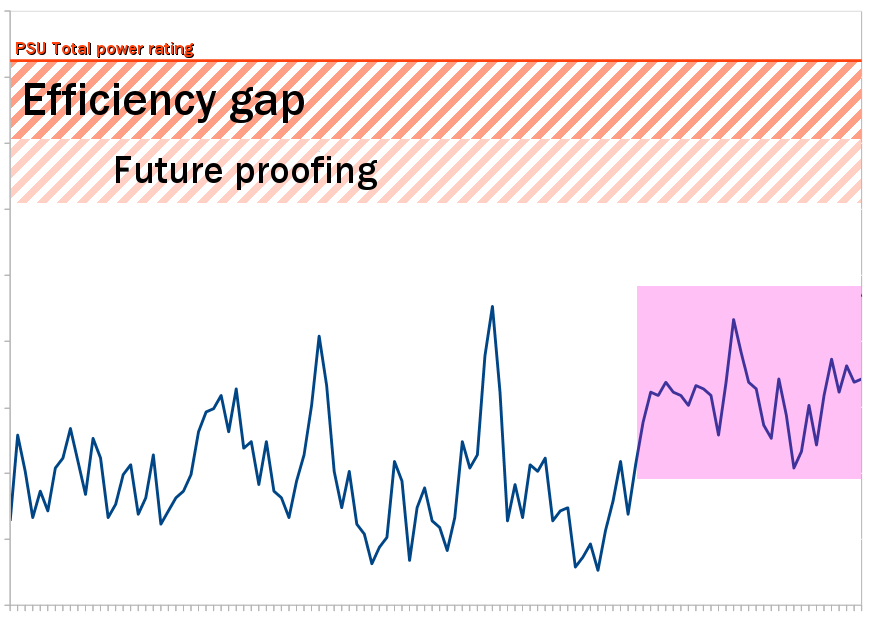

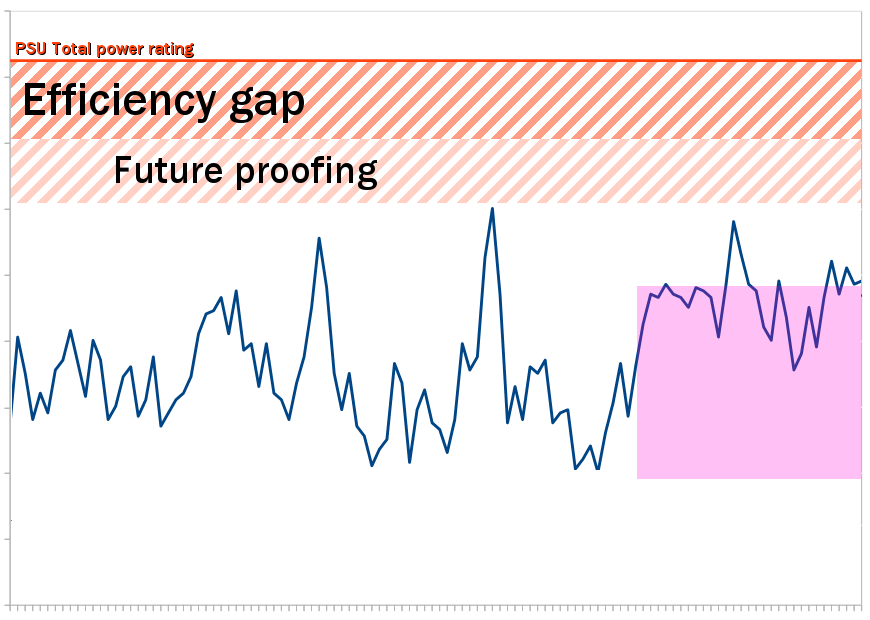

Here's a depiction of the change. (This is not real data, just for illustrative purposes of the general principle.) The blue line represents power draw over time, for profiled game code. The solid orange line represents the minimum power supply that would need to be used for this profile. Indeed, actual power draw must stay well below the rated capacity. Power supplies function best when actually drawing ~80% of their rating. And when designing a console the architects, working solely from current code, will build in a buffer zone to accommodate ever more demanding scenarios projected for years down the line.

You'd think the tallest peaks, highlighted in yellow, would be when the craziest visuals are happening onscreen in the game: many characters, destruction, smoke, lights, etc. But in fact, that's often not the case. Such impressive scenes are so complicated, the calculations necessary to render them bump into each other and stall briefly. Every transistor on the GPU may need to be in action, but some have to wait on work, so they don't all flip with every tick of the clock. So those scenes, highlighted in pink, don't contain the greatest spikes. (Though note that their sustained need is indeed higher.)

Instead, the yellow peaks are when there's work that's complex enough to spread over the whole chip, but just simple enough that it can flow smoothly without tripping over itself. Unbounded framerates can skyrocket, or background processes cycle over and over without meaningful effect. The useful work could be done with a lot less energy, but because clockspeed is fixed, the scenes blitz as fast as possible, spiking power draw.

Sony's approach is to sense for these abnormal spikes in activity, when utilization explodes, and preemptively reduce clockspeed. As mentioned, even at the lower speed, these blitz events are still capable of doing the necessary work. The user sees no quality loss. But now behind the scenes, the events are no longer overworking the GPU for no visible advantage.

But now we have lots of new headroom between our highest spikes and the power supply buffer zone. How can we easily use that? Simply by raising the clockspeed until the highest peaks are back at the limit. Since total power draw is a function of number of transistors flipped, times how fast they're flipping, the power drawn rises across the board. But now, the non-peak parts of your code have more oomph. There's literally more computing power to throw at the useful work. You can increase visible quality for the user in all the non-blitz scenes, which is the vast majority of the game.

Look what that's done. The heaviest, most impressive scenarios are now closer to the ceiling, meaning these most crucial events are leaving fewer resources untapped. The variability of power draw has gone down, meaning it's easier to predictively design a cooling solution that remains quiet more often. You're probably even able to reduce the future proofing buffer zone, and raise speed even more (though I haven't shown that here). Whatever unexpected spikes do occur, they won't endanger power stability (and fear of them won't push the efficiency of all work down in the design phase, only reduce the spikes themselves). All this without any need to change the power supply, GPU silicon, or spend time optimizing the game code.

Keep in mind that these pictures are for clarity, and specifics about exactly how much extra power is made available, how often and far clockspeed may dip, etc. aren't derivable from them. But I think the general idea comes through strongly. It shows why, though PS5's GPU couldn't be set to 2GHz with fixed clocks, that doesn't necessarily mean it must still fall below 2 GHz sometimes. Sony's approach changes the power profile's shape, making different goals achievable.

I'll end with this (slowly) animated version of the above.

www.resetera.com

www.resetera.com

Sony did tell us how their design works. The thing you're missing is that the PS5 approach is not just letting clocks be variable, like uncapping a framerate. That would indeed have no effect on the lowest dips in frequency. But they've also changed the trigger for throttling from temperature to silicon activity. And that actually changes how much power can be supplied to the chip without issues. This is because the patterns of GPU power needs aren't straightforward.

Here's a depiction of the change. (This is not real data, just for illustrative purposes of the general principle.) The blue line represents power draw over time, for profiled game code. The solid orange line represents the minimum power supply that would need to be used for this profile. Indeed, actual power draw must stay well below the rated capacity. Power supplies function best when actually drawing ~80% of their rating. And when designing a console the architects, working solely from current code, will build in a buffer zone to accommodate ever more demanding scenarios projected for years down the line.

You'd think the tallest peaks, highlighted in yellow, would be when the craziest visuals are happening onscreen in the game: many characters, destruction, smoke, lights, etc. But in fact, that's often not the case. Such impressive scenes are so complicated, the calculations necessary to render them bump into each other and stall briefly. Every transistor on the GPU may need to be in action, but some have to wait on work, so they don't all flip with every tick of the clock. So those scenes, highlighted in pink, don't contain the greatest spikes. (Though note that their sustained need is indeed higher.)

Instead, the yellow peaks are when there's work that's complex enough to spread over the whole chip, but just simple enough that it can flow smoothly without tripping over itself. Unbounded framerates can skyrocket, or background processes cycle over and over without meaningful effect. The useful work could be done with a lot less energy, but because clockspeed is fixed, the scenes blitz as fast as possible, spiking power draw.

Sony's approach is to sense for these abnormal spikes in activity, when utilization explodes, and preemptively reduce clockspeed. As mentioned, even at the lower speed, these blitz events are still capable of doing the necessary work. The user sees no quality loss. But now behind the scenes, the events are no longer overworking the GPU for no visible advantage.

But now we have lots of new headroom between our highest spikes and the power supply buffer zone. How can we easily use that? Simply by raising the clockspeed until the highest peaks are back at the limit. Since total power draw is a function of number of transistors flipped, times how fast they're flipping, the power drawn rises across the board. But now, the non-peak parts of your code have more oomph. There's literally more computing power to throw at the useful work. You can increase visible quality for the user in all the non-blitz scenes, which is the vast majority of the game.

Look what that's done. The heaviest, most impressive scenarios are now closer to the ceiling, meaning these most crucial events are leaving fewer resources untapped. The variability of power draw has gone down, meaning it's easier to predictively design a cooling solution that remains quiet more often. You're probably even able to reduce the future proofing buffer zone, and raise speed even more (though I haven't shown that here). Whatever unexpected spikes do occur, they won't endanger power stability (and fear of them won't push the efficiency of all work down in the design phase, only reduce the spikes themselves). All this without any need to change the power supply, GPU silicon, or spend time optimizing the game code.

Keep in mind that these pictures are for clarity, and specifics about exactly how much extra power is made available, how often and far clockspeed may dip, etc. aren't derivable from them. But I think the general idea comes through strongly. It shows why, though PS5's GPU couldn't be set to 2GHz with fixed clocks, that doesn't necessarily mean it must still fall below 2 GHz sometimes. Sony's approach changes the power profile's shape, making different goals achievable.

I'll end with this (slowly) animated version of the above.

PlayStation 5 System Architecture Deep Dive |OT| Secret Agent Cerny OT

Sony did tell us how their design works. The thing you're missing is that the PS5 approach is not just letting clocks be variable, like uncapping a framerate. That would indeed have no effect on the lowest dips in frequency. But they've also changed the trigger for throttling from temperature to...

Insane Metal

Gold Member

When he posted here he worked at a third party studio. And since I was here all the time I remember him getting things right and being unbiased. Back in 2012/2013 before PS4 and XBO launch.How trustworthy is Matt when it comes to hardware? From what I remember he used to know some people at Microsoft. But is he an expert in hardware or is he just accepted as such, because of his mod status at REeeee?

Last edited:

VideodromeX

Member

/enters xbox hot chips thread

/sees misinformation about dolby, and apotheosis of stupid variable clocks

dont worry playstation-only fellas. I am sure you will survive

Totally agree with you, its getting tiresome

TBiddy

Member

#3 post in the thread is a Xbox fan bringing the PS5 into the equation. #11 is a known Xbox fan making direct comparisons. You would know since you thought he was being "thoughtful".

The first few responses were rather tactful. It started to go downhill at page two already, when the conversation started to revolve about variable clocks for some reason. 15 pages later, and a guy marked with a "report me for trolling MS threads" is still fighting the war for Sony. The post I marked "Thoughtful" was just that, by the way.

I was dragged here by some troll. Avoided last quotation as well to avoid repeating an already fully explained topic. Enjoy your thread.

15 pages later, and you're still angry that I laughed at your old mistake? Get over it, already.

When he posted here he worked at a third party studio. And since I was here all the time I remember him getting things right and being unbiased. Back in 2012/2013 before PS4 and XBO launch.

That's what I remember as well, but I have no recollection of him being an authority on hardware.

Last edited:

TheGreatWhiteShark

Member

sure do‘member when TFLOPS USED to matter?

https://www.playstationlifestyle.ne...-resolution-is-the-1-reason-people-buy-a-ps4/

but that ticket has expired long time ago.

now its all about dat sound fidelity

Last edited:

VideodromeX

Member

I've mentioned in previous page, will mention here. Some are deliberately ignoring it. Why PS5s variable clocks are better than "PS5 would be stronger with fixed clocks?

Sony did tell us how their design works. The thing you're missing is that the PS5 approach is not just letting clocks be variable, like uncapping a framerate. That would indeed have no effect on the lowest dips in frequency. But they've also changed the trigger for throttling from temperature to silicon activity. And that actually changes how much power can be supplied to the chip without issues. This is because the patterns of GPU power needs aren't straightforward.

Here's a depiction of the change. (This is not real data, just for illustrative purposes of the general principle.) The blue line represents power draw over time, for profiled game code. The solid orange line represents the minimum power supply that would need to be used for this profile. Indeed, actual power draw must stay well below the rated capacity. Power supplies function best when actually drawing ~80% of their rating. And when designing a console the architects, working solely from current code, will build in a buffer zone to accommodate ever more demanding scenarios projected for years down the line.

You'd think the tallest peaks, highlighted in yellow, would be when the craziest visuals are happening onscreen in the game: many characters, destruction, smoke, lights, etc. But in fact, that's often not the case. Such impressive scenes are so complicated, the calculations necessary to render them bump into each other and stall briefly. Every transistor on the GPU may need to be in action, but some have to wait on work, so they don't all flip with every tick of the clock. So those scenes, highlighted in pink, don't contain the greatest spikes. (Though note that their sustained need is indeed higher.)

Instead, the yellow peaks are when there's work that's complex enough to spread over the whole chip, but just simple enough that it can flow smoothly without tripping over itself. Unbounded framerates can skyrocket, or background processes cycle over and over without meaningful effect. The useful work could be done with a lot less energy, but because clockspeed is fixed, the scenes blitz as fast as possible, spiking power draw.

Sony's approach is to sense for these abnormal spikes in activity, when utilization explodes, and preemptively reduce clockspeed. As mentioned, even at the lower speed, these blitz events are still capable of doing the necessary work. The user sees no quality loss. But now behind the scenes, the events are no longer overworking the GPU for no visible advantage.

But now we have lots of new headroom between our highest spikes and the power supply buffer zone. How can we easily use that? Simply by raising the clockspeed until the highest peaks are back at the limit. Since total power draw is a function of number of transistors flipped, times how fast they're flipping, the power drawn rises across the board. But now, the non-peak parts of your code have more oomph. There's literally more computing power to throw at the useful work. You can increase visible quality for the user in all the non-blitz scenes, which is the vast majority of the game.

Look what that's done. The heaviest, most impressive scenarios are now closer to the ceiling, meaning these most crucial events are leaving fewer resources untapped. The variability of power draw has gone down, meaning it's easier to predictively design a cooling solution that remains quiet more often. You're probably even able to reduce the future proofing buffer zone, and raise speed even more (though I haven't shown that here). Whatever unexpected spikes do occur, they won't endanger power stability (and fear of them won't push the efficiency of all work down in the design phase, only reduce the spikes themselves). All this without any need to change the power supply, GPU silicon, or spend time optimizing the game code.

Keep in mind that these pictures are for clarity, and specifics about exactly how much extra power is made available, how often and far clockspeed may dip, etc. aren't derivable from them. But I think the general idea comes through strongly. It shows why, though PS5's GPU couldn't be set to 2GHz with fixed clocks, that doesn't necessarily mean it must still fall below 2 GHz sometimes. Sony's approach changes the power profile's shape, making different goals achievable.

I'll end with this (slowly) animated version of the above.

PlayStation 5 System Architecture Deep Dive |OT| Secret Agent Cerny OT

Sony did tell us how their design works. The thing you're missing is that the PS5 approach is not just letting clocks be variable, like uncapping a framerate. That would indeed have no effect on the lowest dips in frequency. But they've also changed the trigger for throttling from temperature to...www.resetera.com

Ok, PS5 has 18TF and dual GPU, now settledown

TheGreatWhiteShark

Member

dude, its the same gpu/cpu combo. only ps5 has lessHow about a max speed at highest rpm? Let's see then how long machine will last.

so its like one car on the racetrack revs up to factory rev limit,

while the other, similar car with the smaller engine, went to the neighborhood tuner and put the rev limit 4.050rpm higher

because it wants to compete with the car that has 44% more displacement

go on, ask mr. yamauchi the race driver to tell you what the outcome will be

now please stop talking about ps5 in a hot chips xbox thread

there might be some useful info about xbox to be shared here

Last edited:

geordiemp

Member

sure do

https://www.playstationlifestyle.ne...-resolution-is-the-1-reason-people-buy-a-ps4/

but that ticket has expired long time ago.

now its all about dat sound fidelity

Why do you think XSX will have a better resolution ? You realise TF is just the computational capacity of the vector ALU, there is more to it. You can crow when XSX runs something much better.

And why cant you guys just talk XSX and hot chips without having to talk about trying to beat Sony in every damn post....