BluRayHiDef

Banned

Does anyone think that the benchmark results will hold up when independent reviewers get a hold of the cards? Also, how bad do you think the ray tracing benchmarks will be, particularly in Control?

Clockspeeds are key to RNDA 2

Wonder which APU runs at RDNA 1 speeds

Benchmarks will always be higher than actual performance when given to reviewers. Whether it be AMD, Nvidia, Intel, etc. I honestly don't even want to think of how control will play with RT, as it's pointless without DLSS/alternative. With AMD's gpu's, I'd imagine you would can only play at lower resolution with RT enabled, or high resolution with no RT, but not both.Does anyone think that the benchmark results will hold up when independent reviewers get a hold of the cards? Also, how bad do you think the ray tracing benchmarks will be, particularly in Control?

Ill tell you this. Few people give a shit about ray tracing. Most will glady turn it off to get the performance.Does anyone think that the benchmark results will hold up when independent reviewers get a hold of the cards? Also, how bad do you think the ray tracing benchmarks will be, particularly in Control?

But the performance is better. Look at Watch Dogs Legion that just came out. Extremely heavy on hardware at max settings. Cant be played 4k/60 on anything, native. But nvidia has DLSS and Raytracing, which permits the game to run at 4k/60. AMD will launch its 1000 dollars card and it will play this game at 1440p in order to be playable and without raytracing.

It really is. I let it be known a while back that I would switch back to AMD if they nail performance of RT and have an answer to DLSS.In this topic i learned that the red team vs green team war is as hilarious as console war.

please continue

I was not expecting this but the lowest RDNA 2 is 579$. People getting high end would definitely want good RT though.

Binned chips most likely.Can someone clarify how 8 additional CUs from the 6800XT to the 6900XT can make up for the difference in performance and and pricing while staying at the same wattage?

Where is the benchmark for the AMD cards running this on par or better?

Article: The Big Ferocious GPU Cannot Maintain 60 FPS in 4K When Running Watch Dogs: Legion With Ultra Settings...Without Ray Tracing!

https://www.dsogaming.com/pc-performance-analyses/nvidia-geforce-rtx3090-cannot-run-watch-dogs-legion-with-constant-60fps-in-4k-ultra/amp/ ____________________________________________________________ Who would've thought that a graphics card that costs a whopping $1500 and has an astounding...www.neogaf.com

Clockspeeds are key to RNDA 2

Wonder which APU runs at RDNA 1 speeds

The point is that using that game as an argument is just pointless, it's an Ubisoft game. It will run like shit on everything.Where is the benchmark for the AMD cards running this on par or better?

True, but what matters is where the game will run shitless.The point is that using that game as an argument is just pointless, it's an Ubisoft game. It will run like shit on everything.

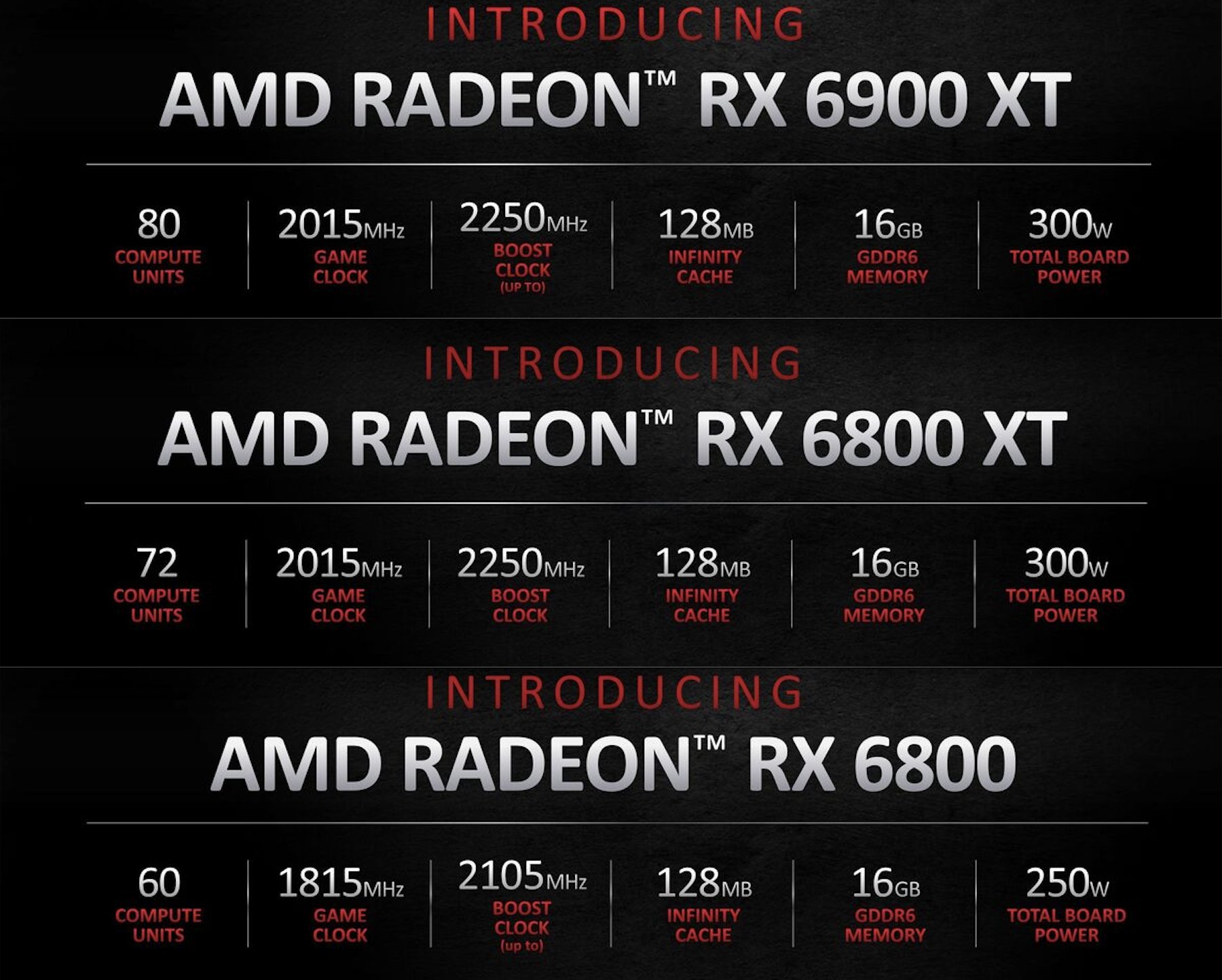

Can someone clarify how 8 additional CUs from the 6800XT to the 6900XT can make up for the difference in performance and and pricing while staying at the same wattage?

There was rumor (which this reveal aligns well with) that mentioned game benches shown to him. (6800 or 6800XT, I don't remember) He mentioned RT wise that card was on par with 2080Ti.Where is the benchmark for the AMD cards running this on par or better?

Cerny worked on PS5 and RDNA2 at the same time licensing AMazeDtek back to AMD.

Fuck RT until the FPS pick up significantly. Shitty DLSS as a dumb holdover because they were scared to get pipped in high res.

How many times are you going to tell GAF that you have both cards...I have both an RTX 3080 and an RTX 3090 and I regret NOTHING. DLSS makes playing games at 4K with ray tracing enabled a viable option, and AMD's RX 6000 Series doesn't have an equivalent to it yet.

Furthermore, there are a dozen upcoming games that will feature ray tracing and DLSS.

RTX ON: A Dozen More Games Will Have Ray Tracing and DLSS This Year

Today’s biggest blockbuster titles and indie games are RTX ON. Check out this Holiday’s upcoming games adopting ray tracing and DLSS, including Cyberpunk 2077, Call of Duty: Black Ops Cold War, and Watch Dogs: Legion.www.nvidia.com

I'm very interested in that 6800xt and amd has 2-3 months to show me their version of dlss and convince me to buy their gpu, i don't care about rtx but i do care about having 4k with 30-40% less resources that i can use for other things, you know, unimportant stuff like framerate and details.It really is. I let it be known a while back that I would switch back to AMD if they nail performance of RT and have an answer to DLSS.

Upon further looking though, I think a bunch of people in here are rooting for AMD because of the console wars, as many of these guys don't even game on PC

But AIB 3090 is about 10% faster than 3080 FE.

Taking that into consideration, I think the 6800 and 6800XT should be priced lower. The 6900XT however is $500 cheaper than the 3090 so I can't complain.No RT specific Hardware, no Tensor hardware equivalent either. Without those AMD needed to blow Nvidia out of the water for it to really be close.

Nice. Too expensive and a shame on PC.Ridiculous post. DLSS provides image quality that matches a target resolution but that is provided at a lower resolution, and the subsequent boost in frame rate is immense. Control at native 4K with ray tracing fully enabled runs at about 25 frames per second on an RTX 3080; however, via DLSS Quality Mode, the frame rate jumps to the mid fifties and low sixties.

I doubt AMD would be able produce enough cards to satisfy demand, for anyone to want you to leave the green reality distortion field..I'm very interested in that 6800xt and amd has 2-3 months to show me their version of dlss and convince me to buy their gpu

Even without Zen3 synergy and OC, 6900XT beats 3090, why would anyone pay $999 for a more power hungry card with 4GB less RAM?The real 6900XT competitor will be a 3080 Ti 12GB at ~$999.

6800 is 18% faster than 3070 and has twice RAM, why can't it cost $79 more?I think the 6800 and 6800XT should be priced lower.

ti's inc6800 is 18% faster than 3070 and has twice RAM, why can't it cost $79 more?

6800XT is faster than 3080, has 6GB more RAM and... is even cheaper than it, despite availability issues of 3080.

Why would AMD price stuff even lower?

On top of it, AMD seems to still have OC headroom, at least on 6800 series, unlike Ampere.

If not OC, Fury X was matching 980Ti at higher than 1080p resolutions.

It's -$500 than the RTX 3090? Uses less energy, hits higher clocks and will go higher than game boost on top of the optimizations they showed if your using a ryzen 5000 cpu. Add all of that extra performance up.

But you wont.

Yes.can someon tldr this for me?

Was my 3080 fe a good purchase for 700usd ? I even sold watch dogs 2 for 30, so 3080fe costed me 670

Ridiculous post. DLSS provides image quality that matches a target resolution but that is provided at a lower resolution, and the subsequent boost in frame rate is immense. Control at native 4K with ray tracing fully enabled runs at about 25 frames per second on an RTX 3080; however, via DLSS Quality Mode, the frame rate jumps to the mid fifties and low sixties.

can someon tldr this for me?

Was my 3080 fe a good purchase for 700usd ? I even sold watch dogs 2 for 30, so 3080fe costed me 670

Depends on the game. If good, it stabilizes all aliasing incredibly wellI think DLSS is nice in theory, but when I use it, the image just looks...weird. I am basing this on my experience with Wolfenstein Youngblood and Control...but mostly Youngblood. Maybe it's a title by title sort of thing.

can someon tldr this for me?

Was my 3080 fe a good purchase for 700usd ? I even sold watch dogs 2 for 30, so 3080fe costed me 670

btw i still have year sub of geforce now if someone wants to buy it

Even without Zen3 synergy and OC, 6900XT beats 3090, why would anyone pay $999 for a more power hungry card with 4GB less RAM?

I mean, green fanboys would, but they would no matter what anyhow, so who cares.

Yes, it definitely happens. Nvidia has been dominant for a long time so many people get caught up in only supporting them even when the competition helps to bring about better prices and better performance.In this topic i learned that the red team vs green team war is as hilarious as console war.

please continue

the simple fact that you need dlss to play with ray tracing decently, should tell you that (at leas right now), it's not a feature that is important to many people, because too expensive in terms of performance loss.

can someon tldr this for me?

Was my 3080 fe a good purchase for 700usd ? I even sold watch dogs 2 for 30, so 3080fe costed me 670

btw i still have year sub of geforce now if someone wants to buy it

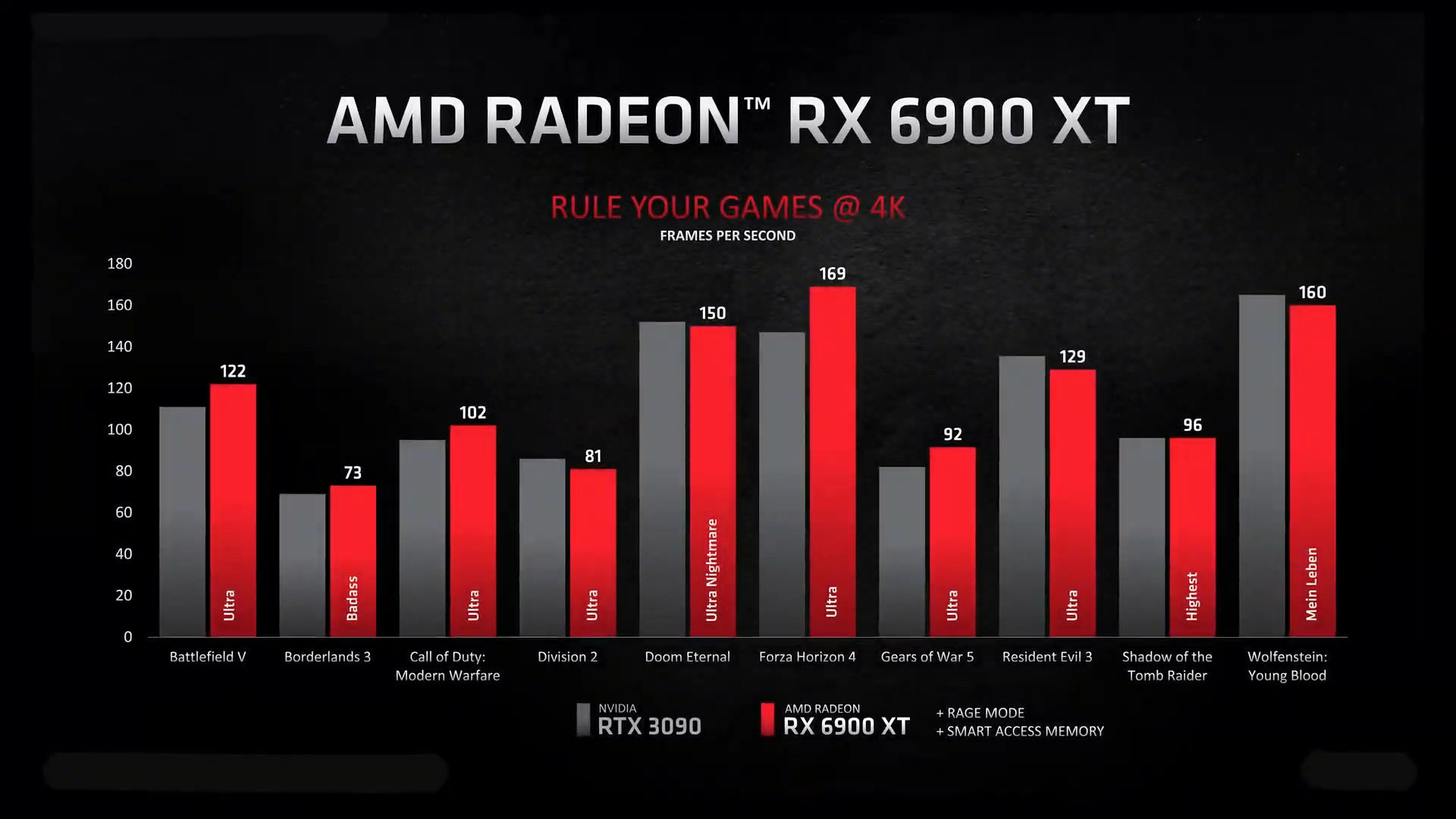

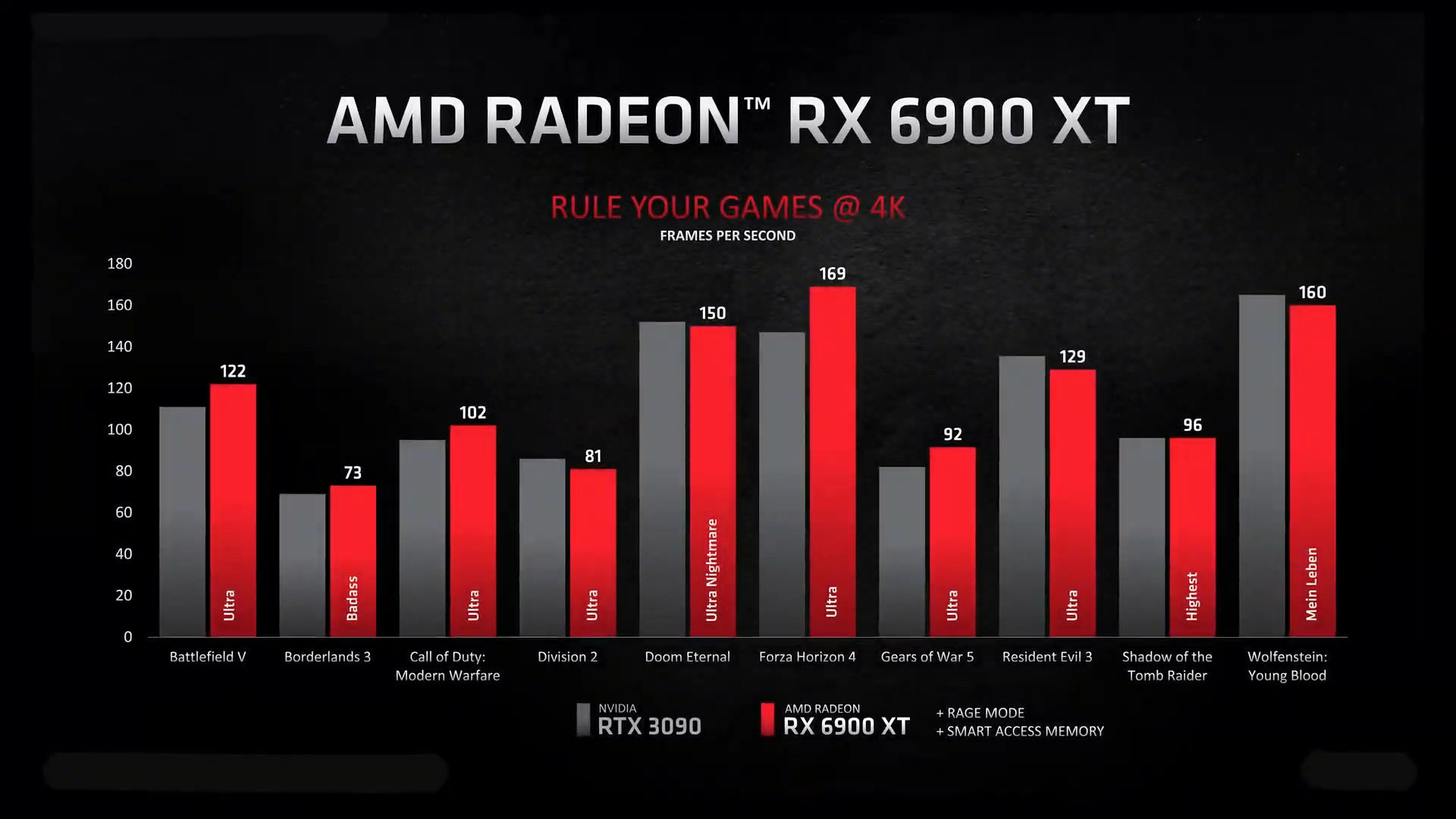

"+Rage mode/+SAM" is overclock/Zen 3 synergy, so this is the best case (+AMD picked games). Expect that claimed 300W will be more like ~330-350W for these results, so that's the same as a RTX 3090. AMD giving no figures at all for RT performance (this means it's behind nVidia) is a good reason to buy a Ampere card over the similarly priced RDNA2 card, not that I'd expect you to recognize that.

Not an AMD fanboy, but I will go out on a limb and say that their future super sampling offering will allow the 6800 XT to grow into a greater card than it already is. I am not too concerned about RT performance right now, and by the time this new SS is offered it will gain a much needed performance boost in RT intensive games.Until I see RT performance I'm holding my breath. Not buying a $1k card if it cant play RT games at 90% of Nvidia's offerings

Not an AMD fanboy, but I will go out on a limb and say that their future super sampling offering will allow the 6800 XT to grow into a greater card than it already is. I am not too concerned about RT performance right now, and by the time this new SS is offered it will gain a much needed performance boost in RT intensive games.

For them to mention they are working on this and future technology with MS(DX 12 Ultra) to make games load faster/run smoother gives me the confidence to go team red this time.

it's 10gb gddr6x vs 16gb gddr6. I wonder what will matter more... probably 3080 will be too slow by the time games require that vram anywayNvidia:

- DLSS more performance in games that will use it

- Better ray tracing performance

- driver stability and support in games

- more heat

- bigger probably

- bigger power supply needed ( more energy consumption )

- low v-ram not next gen proof. 8gb/10gb ( 3090 doesn't have this issue )

- good cooler.

AMD:

- less heat

- a bit cheaper

- next gen future proof v-ram 16gb/16gb/16gb

- driver stability unknown

- a bit faster

- good cooler.

- lower raytracing performance

- no dlss aternative atm.

- probably a bit smaller

That's so far it.

Only GPU for next gen that makes sense that is on the market is 6800xt currently for the higher end.