It isn't when you factor out Windows and DirectX and instead use Proton/Linux in many cases.

Just like AMD CPUs were a 2nd class citizen to Intel on Windows over the years and only now with immense levels of compute and massive CPU caches is becoming harder for the Wintel MO to playout as normal. When Microsoft redeveloped DirectX for the original Direct-X-box Nvidia provided not only the GPU but provided Nvidia CG which used HLSL as a unified shader language to be used both with Nvidia CG and DirectX IIRC, and has been that way ever since. Nvidia's hand in DirectX makes them a first class citizen for the API - which is inferior as a hardware agnostic API to Opengl, Mantle and Vulkan - whereas AMD is effectively a 2nd class citizen, and the Windows vs Linux benchmarks differences support that IMHO.

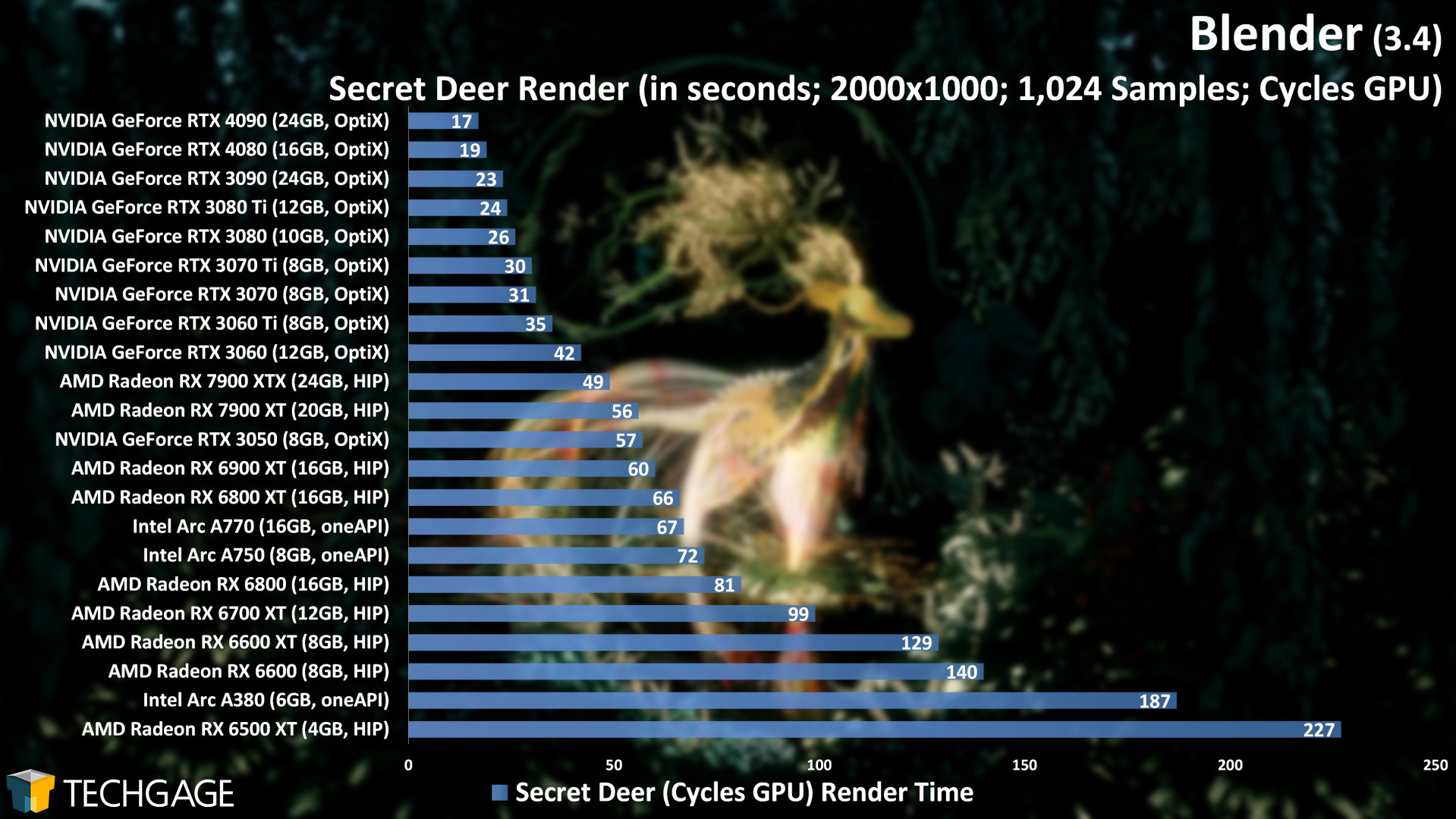

Nvidia have something like 80% of the Windows gaming market, which has 95% of PC gaming market, so blaming AMD for the rigged game where they are always playing driver catch up hardly seems fair IMO. I haven't bought an AMD card - or ever bought an AMD CPU for myself - since they were ATI, so I'm not an AMD fanboy saying this, but I do recognise that benchmarking on WIndows with DirectX games isn't a reflection of the hardware or even the efforts AMD make with their drivers most of the time, and even Intel eluded to the additional performance in comparison their Arc can get using Vulkan based benchmarks.

Look at how Valve are getting way above AMD APU on Windows results with the SteamDeck APU and look at modern games like Calisto Protocol benchmarks - which was optimised for the PS5 Vulkan style API to see a better comparison of the hardware, even if it doesn't solve the reality that it is parity or likely worse product situation than buying Nvidia to use with Windows for gaming.