Gaiff

SBI’s Resident Gaslighter

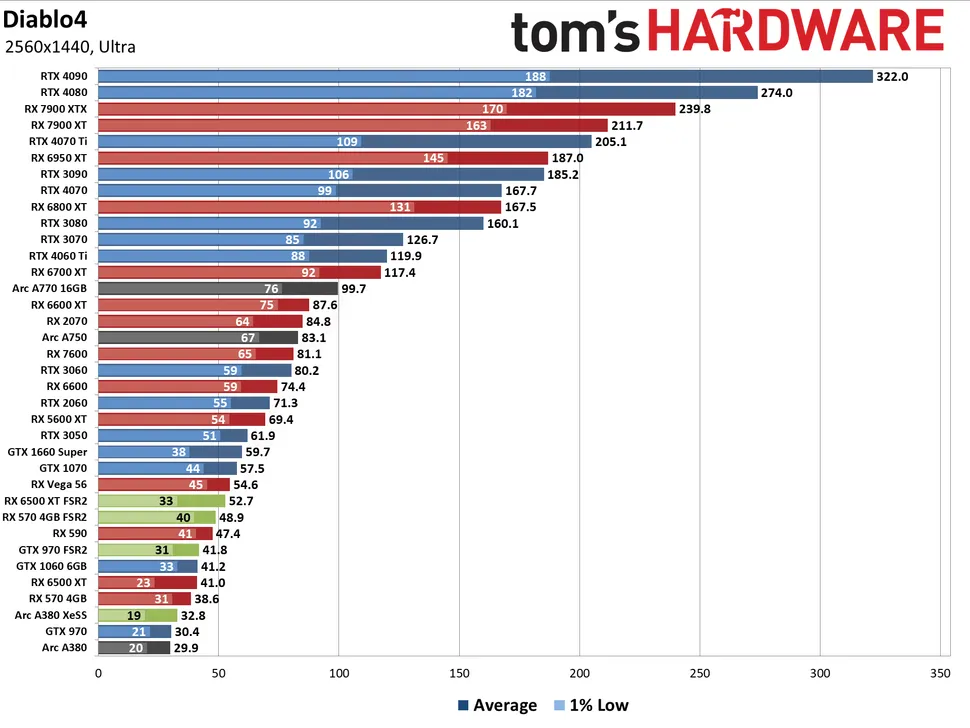

What has this got to do with Diablo 4? Oliver tested playing alone and didn't see dropped frames in large combat scenarios but did see dropped frames in the central city which is where Tom's Hardware tested. The implication is that the city is more demanding than even big fights, making it a good stress test.It's POE but same game with D4, but diablo 4 have much less monsters. Consoles doesn't have X3D CPU

Last edited: