How can you compare film on green screens to CG?

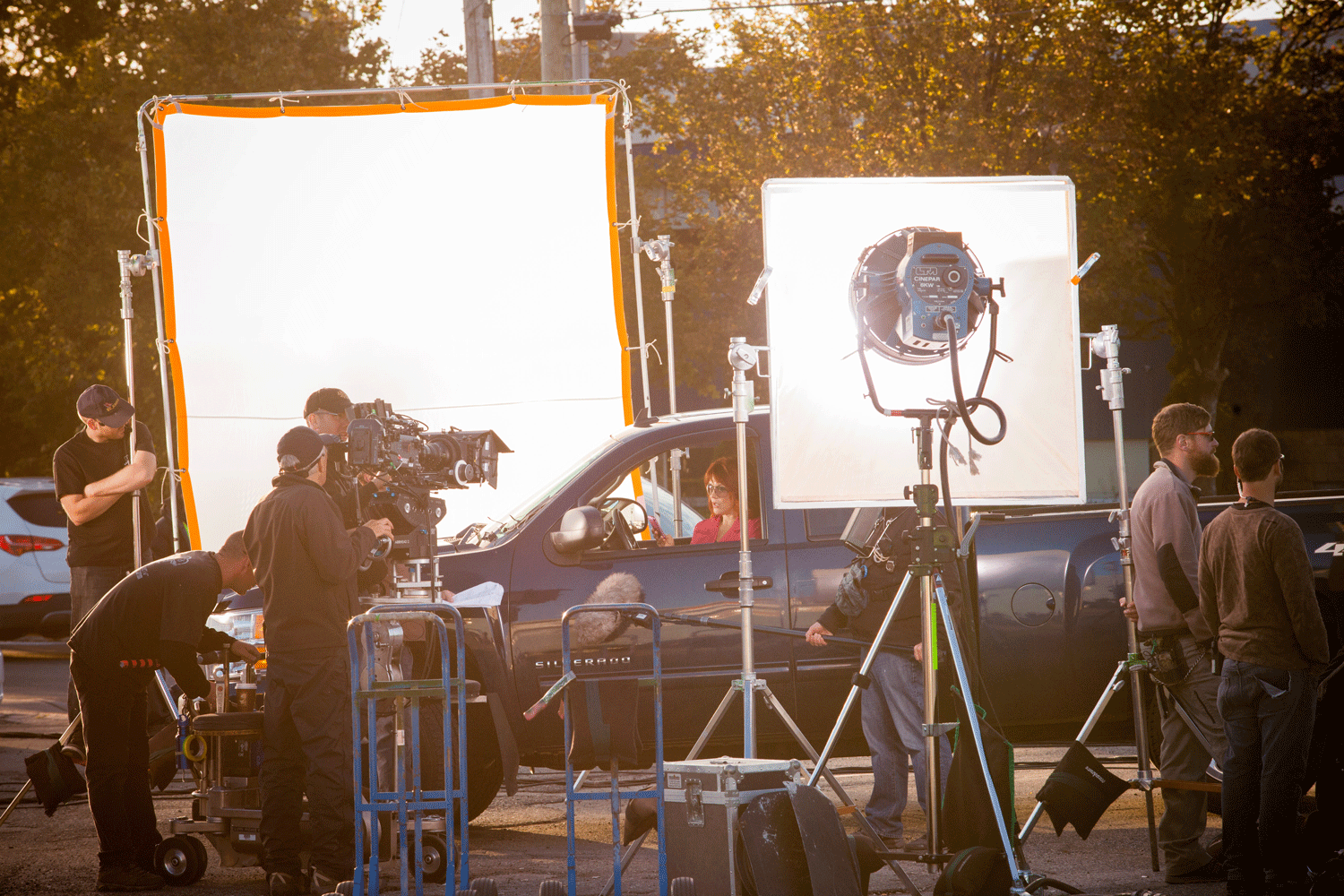

Because alot of filming technique shows up in game lighting...three point lighting, using bounce cards, etc.

Nanite is one aspect in getting high quality geometry and textures. It's the first in like forever since videogames have come out. To me, it's an extremely long period of time just to get that

You just said there's no need for new tools, that "

Game tools don't need much work this generation." So Which is it? Do you realize that when new compute power are made available, it leads to new ways to do things and makes possible old ideas that weren't possible before? You do realize that this change doesn't happen instantly?

For example take a look at the deep learning breakthrough that happened in 2012. Deep learning till today is still mostly using the same algorithm that existed in the 60s. The difference is that we didn't have enough compute power required to make those algorithm actually work back then. In 2012, thanks to the advancement in GPU due to the gaming industry, two guys randomly tried the ancient old algorithms from the 60s and BOOOM it worked and over-night hundreds of new industries were created and affected due to that one event in 2012. Tools were created, entire IDE to train, label, process datasets for NN.

Now every device you use or service you use has been affected by that one moment in 2012.

That's to say that tools don't exist until new/old ideas are made possible. Which then lead to tools being created to explore these ideas. This change doesn't happen instantly.

and then you have hardware that can't even render it at a good performance level.

So which is it, games will look the same or games have to be native 4k, 60fps to not look the same?

What does 1080p have anything to do with photorealism? Are you saying games have to be a mytical native 4k to be considered as "looks better"?

With all the reconstruction tech that we have FSR, DLSS, TSR, etc. Trying to achieve anything higher than 1400p is literally a waste of compute power.

So far, there has been no game that has come out this generation that makes my claim false. Nothing.

Your statement has already been proven false and laughable by looking at the prior gen and how games looked.

Just to name a few generation transitions.

RDR 1 > RDR 2

TLOUS 1 > TLOUS 2

Beyond: Two Souls > Detroit Become Human

Alan Wake > Control / Quantum Break

Last gen looked a gen ahead of its prior games and you have given 0 arguments against this.

Will there be games that will waste compute power trying to get to native 4k and 60 fps and hence not look a gen ahead? Yes.

Demos don't count when there isn't a game associated with it. If you don't have RT lighting, Nanite won't be good enough to make my claim false either. There are just way too many areas that need improvement in graphics pipeline to make it a "generational leap" from last gen.

Demos shown last gen have already been surpassed. Not only that but The Matrix Awaken isn't even your typical demo. It actually runs on consoles and its playable. Lastly devs stated expressly that it had alot of headroom for game logic and missions. This is clear indication that you will never admit that you are wrong. No matter what. You will make up some bs because this is more about your Vendetta against Sony fans than anything to due with graphics.