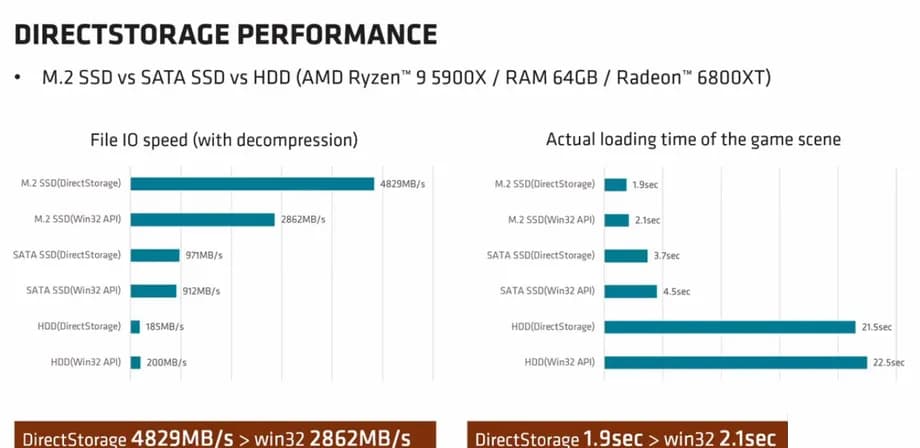

Maybe not. From the first numbers we see from Direct Storage on Forspoken, even a sata SSD might be bottlenecked by the Windows old file system .

But still, even if we ignore sata SSDs, Microsoft is late with Direct Storage by over half a decade.

Sony is not a software company, but they already had a new file system, capable of using nvme SSDs to a high degree. The PS5 was released 1.5 years ago.

Microsoft, the world biggest software company, can't even keep pace.

And then there is the stutter with DirectX12.

Vulkan already has extensions to reduce stutter from shader compilation.

Reducing Draw Time Hitching with VK_EXT_graphics_pipeline_library

And Linux is doing strong strides to improve performance and reduce stutter. In some games, already surpassing Windows by a mile.