Kraken VS ZLIB:

According to the official graph by

RAD Game Tools,

Kraken has

29% higher compression ratio over

ZLIB (used in PS4 and other platforms), and does it

3-5x faster (doesn't really concern us as gamers, only good for devs). Both

Kraken and

ZLIB are

lossless compressions, meaning data is kept as it is, like textures, audio, game files, etc. Also another advantage is being

297% (~4x times) faster in

decompression, which is very critical for

data streaming at least.

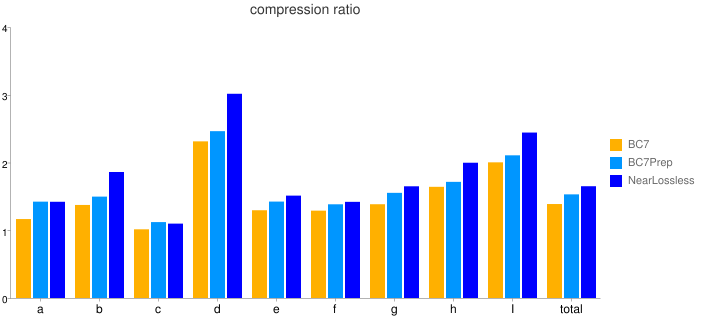

Oodle Texture:

Textures in games take a big chunk of the total size. To amplify compression even further,

Oodle Texture go

5-15% "near" lossless compression:

Lossless Transform for BC7: Oodle Texture also includes a lossless transform for BC7 blocks called "BC7Prep" that makes them more compressible. BC7Prep takes BC7 blocks that are often very difficult to compress and rearranges their bits, yielding 5-15% smaller files after subsequent compression. BC7Prep does require runtime reversal of the transform, which can be done on the GPU. BC7Prep can be used on existing BC7 encoded blocks, or for additional savings can be used with Oodle Texture RDO in near lossless mode. This allows significant size reduction on textures where maximum quality is necessary.

Pushing compression with a slight loss, you can go up to 50% smaller textures files:

Oodle Texture Rate Distortion Optimization (RDO), sometimes known as "super compression", lets you encode BCN textures with your choice of size-quality tradeoff. Oodle Texture RDO searches the space of possible ways to convert your source texture into BCN, finding encodings that are both high visual quality and smaller after compression. RDO can often find near lossless encodings that save 10% in compressed size, and with only small visual difference can save 20-50%.

Oodle Texture RDO provides fine control with the lambda parameter, ranging from the same quality as non-RDO BCN encoding, to gradually lower quality, with no sudden increase in distortion, or unexpected bad results on some textures. Oodle Texture RDO is predictable and consistent across your whole data set; you can usually use the same lambda level on most of your textures with no manual inspection and tuning. Visit Oodle Texture RDO examples to see for yourself.

RDO encoding just produces BCN block data, which can be put directly into textures, and stored in hardware tiled order. Oodle Texture RDO does not make a custom output format and therefore requires no runtime unpacking.

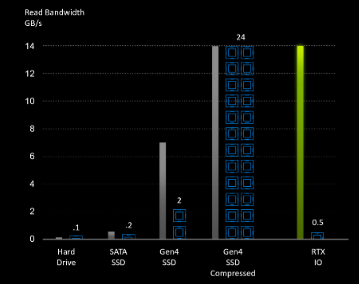

Kraken Hardware Decompressor:

That's said,

Kraken hardware decompressor on PS5 should work best with

Oodle Texture, but

Oodle Texture can work with any lossless decompressor, like

ZLIB HW decompressor on PS4, Xbox Series X|S. Xbox Series X|S already have a great solution for texture compression known as

BCpack.

Oodle Texture RDO optimizes for the compressed size of the texture after packing with any general purpose lossless data compressor. This means it works great with hardware decompression. On consoles with hardware decompression, RDO textures can be loaded directly to GPU memory, Oodle Texture doesn't require any runtime unpacking or CPU interaction at all. Of course it works great with Kraken and all the compressors in Oodle Data Compression, but you don't need Oodle Data to use Oodle Texture, they are independent.

Sources:

RAD Game Tools' web page. RAD makes Bink Video, the Telemetry Performance Visualization System, and Oodle Data Compression - all popular video game middleware.

www.radgametools.com

RAD Game Tools' web page. RAD makes Bink Video, the Telemetry Performance Visualization System, and Oodle Data Compression - all popular video game middleware.

www.radgametools.com

PS5 vs Xbox Series X

As Xbox Series X|S have around similar texture reduction, we can't say

Oodle Texture is better than

BCpack nor vice versa. But as PS5 has licensed

Kraken to all PS4/PS5 developers and it still has 29% size reduction advantage over

ZLIB on PS4 and Xbox Series X|S, mated with

Kraken hardware decompressor. We also know that Xbox Series S will have gimped versions of the Series X textures, so will put it aside from the comparison. Assuming the textures/assets are the same on both consoles:

But, with the 297% (~4x times) faster decompression, which should be even better with Kraken HW decompressor, PS5 might leverage the extra space to use higher quality assets.

It doesn't stop right there though, with PS5 unprecedented SSD and I/O, it could eliminate the use of LOD's (Levels of Details), which can be 5-7 images in different resolutions/qualities. Sony Atom View has brought this technique in 2017, using only one high quality asset and streaming polygons as you get closer, resulting in much higher quality textures without wasting size on duplicates (5-7 versions of the same asset) in the old fashion LOD system. Atom View works as a plugin on UE and Unity engines, and probably with other Sony WWS proprietary engines:

Sony has helped Epic Games achieve similar tech known as "Nanite" on their upcoming Unreal Engine 5, using hundreds of billions of polygons of uncompressed 8K Hollywood-level assets with up to 16K shadows, all crunched losslessly to around 20 million polygons per frame for this 4.2 million pixel (1440p) gameplay demo:

(Timestamped)

TL;DR

PS5 should benefit from this headroom advantage to use higher quality assets than other platforms, or be more efficient with its storage for the same assets quality but smaller game sizes. All this while still being able to eliminate LOD's and use that extra space to have a bump on assets quality for the same smaller game size overall!

Thank you for reading.

EDIT: Thanks to

GreyHand23

GreyHand23

for the addition: