Bitmap Frogs

Mr. Community

I will definitely pass that on to the rest of the team.

Don't we have a thunderdome thread?

Of course it doesn't get much traffic because the entire forum is now the thunderdome.

I will definitely pass that on to the rest of the team.

Do you think it would be possible to create a console war thread where anything goes? My thought would be alot of that type of behavior would be channeled there and somewhat keep it out of these threads. Just a suggestion.

but we already have that. it's called the next gen speculation thread. ;pI will definitely pass that on to the rest of the team.

The delta of xsx being better in all multiplats.

It can, because we do know that RDNA2 is 7nm+RT, all other features are optional.

CDNA cards won't have them, for example, still RDNA2-based.

CDNA is based on GCN and Vega because that uarch is a monster for compute workloads.

This was a solid thread at the start before warriors

I wonder if anyone on the XBOX team could tell us more about best tested case uses of their ML upscaling tech. I think it's going to be great for BC and am looking forward to that. I wonder if it will have some real cool DLSS type use cases.

They don't have to worry about. They put code into the machine and the machine uses the clock that's needed for the code.But the thing you’re assuming is that it makes a difference or that people care. It running at that clock speed all the time isn’t a bad thing. In fact it’s a good thing because it’s one less thing the developers have to worry about.

Series X obviously has the better GPU. Imo this can only mean 2 things. Sony cheaped out a bit and the ps5 will be at least $100 cheaper. Or.. Sony is convinced their SSD tech will be a bigger game changer than a better gpu.But it doesn't, we already know that's a lowball figure.

First of all bandwidth is a huge differentiator especially when it comes to rendering performance. It's the reason the Xbox One X was running games on average at resolutions 70-80% higher than the PlayStation 4 Pro while only having a 43% more capable GPU. With the PlayStation 5 and Series X we're again met with a large divergence in memory bandwidth afforded to the GPU.

Secondly there's what I said in relation to frequency not closing the gap with CU's, it's just a fact of reality that it does not. Lesser CU count overclocked to reach the stated teraflop figure of a like minded GPU with more CU's at lower frequency results in the obvious workflow advantage of the higher CU count GPU. Bear in mind this is with a GPU hitting the same teraflop target, with the Series X and the PlayStation 5 there is a 1.875 teraflop disparity so it's plain to see how that pans out when extrapolated.

Third we come down to the architectures, the cat is out of the bag for the PlayStation 5 in terms of being a hybrid GPU implementation, this means the GPU will come with the benefits of 7nm RDNA architectural efficiencies. For the Series X it's quite clearly an RDNA 2 GPU given its specifications, with that comes the efficiency uplift of being natively of that architecture and 7nm+. What that uplift is has yet to be revealed but it's not going to be 0% which would widen the gap further.

Fourth we come to the variability factor of the PlayStation 5, its frequency is not fixed, it deviates based upon the power budget of what it's processing/rendering. That "18%" is based upon the PlayStation 5 when at peak boost frequency, but we all know that's not going to be the case especially in rendering heavy games where power demands spike dramatically.

So at the end of the day that 18% is not actually 18%, it is left to be seen what it actually averages out to but it's going to be a decent and substantive margin above that.

Series X obviously has the better GPU. Imo this can only mean 2 things. Sony cheaped out a bit and the ps5 will be at least $100 cheaper. Or.. Sony is convinced their SSD tech will be a bigger game changer than a better gpu.

Both consoles have their strengths in different areas and it will be up to 3rd party developers which strengths they will focus on more. Typically that means the console that has the biggest installbase...

We'll see but I got a feeling Sony's 1st party games will stand out a lot compared to MS's exclusives, though. With the whole power narrative, MS isn't doing Series X any favors by shackling it to current gen, potato PCs and a 4Tflops Series S for the remainder of the console generation.

CDNA is based on GCN and Vega because that uarch is a monster for compute workloads.

It won't be "shackled" to current gen for the entire generation. Why does this narrative keep getting repeated by Sony fans?

If you and your co-worker did the exact same job at the exact same skill and effort level and yet they were paid 18% more than you, would you consider that to be 'significant'?18% isn't "significant" imo

...and well, the One X is noticeably better than the pro for third-party games, you know, the vast majority of games.I/O advantages? I think we're not going to see that much difference tbhThe delta between Pro and X was even higher and well...

I meant, shackled to Jaguar cpu and HDD for the first two years, and shackled to the 4Tflops Series S for the remainder of the console generation.

It won't be "shackled" to current gen for the entire generation. Why does this narrative keep getting repeated by Sony fans?

Yes, but without smart-shift boosting the PS5 would be weaker. You're treating it like it's a feature when I suspect that Sony are, understandably, selling the positive features of what was really a necessity.It's not that what Matt implied. Like Jason Ronald said, if they went with variable clocks, XSX would have more FLOPS. Matt implied to that. XSX would have more FLOPS

Your point about the Jaguar cpu and HDD have been addressed multiple times in this thread already. It's nonsense.I meant, shackled to Jaguar cpu and HDD for the first two years, and shackled to the 4Tflops Series S for the remainder of the console generation.

Series X is great piece of kit, no arguing that. It's more Microsoft's corporate strategy for next gen that stops Series X from tapping into that next gen excitement.

Yeah it is 20 channels, I believe (although could be wrong on what I am looking atPossibly? If they are relying on hardware BC I assume they will need to keep this level of functionality and ensure the PS5 can provide it. I'm wondering if they will have a software solution for allowing the GPU to snoop CPU cache; Series X allows its GPU to snoop CPU cache through hardware support but the inverse requires software solution.

Then again, I'm wondering if in fact that's not something PS5 has and therefore could be a reason they added the cache scrubbers to the GPU.

I know the Era user you are talking about, Lady Gaia, and I remember more or less what they were saying. And I did notice the SoC coherency fabric MCs in the graph but wasn't sure what to make of them (but it sounds like they are memory controllers as you say). However, I could've sworn MS listed their GDDR6 as 20-channel, not 16-channel.

Aside from that, I actually don't think what Lady Gaia (or you here for that matter) were saying is at play with MS's setup, because the "coherency" in SoC Coherency Fabric MC gives it away, at least IMHO. Coherency in the MCs would mean that consistency in data accesses, modifies/writes etc. between chips being handled through the two would be maintained by the system automatically, and not require shadow masking/copying of data between one pool (the fast pool) to the other (the slower pool), or vice-versa. Otherwise, if not for the coherency, that would probably be a requirement as we see on PCs (which fwiw are nUMA systems by and large rather than hUMA like the consoles will be).

There is probably still some very slight penalty in switching from the faster pool to the slower one, but I'm guessing this is only a few cycles lost. There was some stuff from a rather ridiculous blog I came across written back in March that tried insinuating a situation where Series X was pulling in less bandwidth than the PS4 in real-world situations due to interleaving the memory; quite surely no console designer would choose a solution with THAT massive of a downside for a next-gen system release, so it made it easy to write that idea off. Lady Gaia's take makes a bit more sense but even there I think they are overshooting the penalty cost.

Additionally, we should consider that games won't be spending even amounts of time between the faster and slower memory pools. That's the main reason I tend to write off anything trying to average out the bandwidth between the pools. Yeah, it's a neat metric to consider for theoretical discussion, but it makes no sense to present as a realistic use-case figure because you will have most games spending the vast majority of the time on GPU-bound operations. There are probably other things MS have implemented with the memory management between the two pools handled through API tools that haven't been disclosed (though I hope they disclose them at some point) that probably simplify things a lot for devs and take advantage of the fact the system is still by all accounts a hUMA design.

en.wikichip.org

en.wikichip.org

Yeah but who cares about 3rd party shit being cross-gen?It's helps to justify the purchase of the weaker console.

It's conveniently forgotten that both consoles will have cross-gen games, that only MS first party are mandated to support the Xbox One and that it's only for a couple of years, so will only impact a relative handful of games in reality.

Did I say forgotten? Sorry, I meant deliberately ignored for FUD purposes.

Parity is a thing and developers do have to design around it. So If MS launches two consoles - with such a gap in performance - surely one has to suffer over the other? People keep saying games will just run at bit lower resolution and framerate on Series S. But what if a developer doesn't want to target native 4k and 60fps? What if developers can get much better results out of these consoles by targeting 30fps and 1440p, for example?Your point about the Jaguar cpu and HDD have been addressed multiple times in this thread already. It's nonsense.

As for the Series S, my understanding is that it's designed to play next-gen games just at lower framerates/resolutions. That's it. It still has a vastly improved GPU/CPU compared to current gen. It still has an SSD.

Nothing will be shackled by that. You need to let go of this narrative.

If you and your co-worker did the exact same job at the exact same skill and effort level and yet they were paid 18% more than you, would you consider that to be 'significant'?

Yeah it is 20 channels, I believe (although could be wrong on what I am looking at)counted 10 channels on each MCs block unit, with what looked like pass-thru on both the inside and outside (left & right edges) with the blue stuff, presumably being infinity fabric (Scalable Data Fabric) bus connecting everything

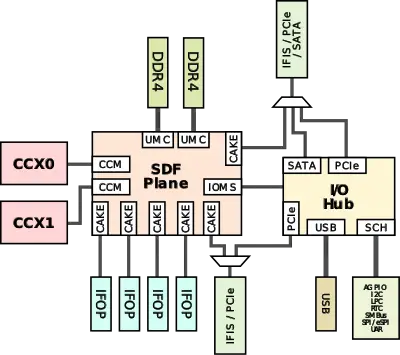

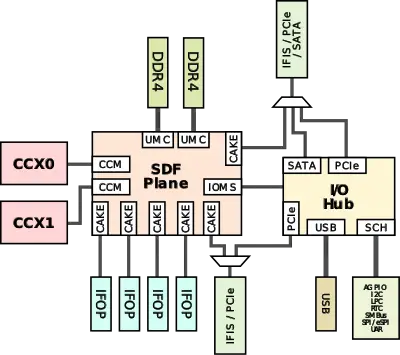

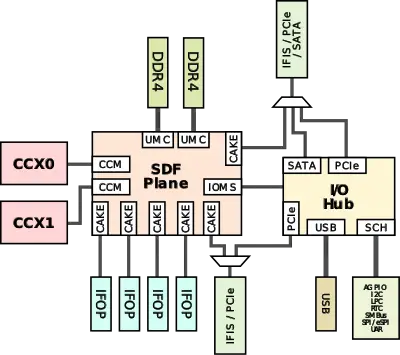

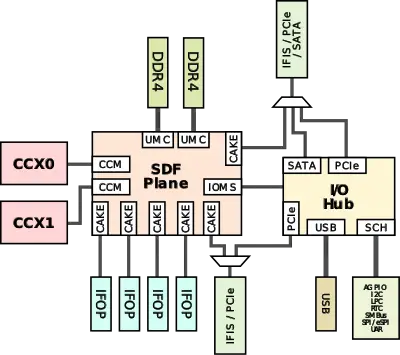

But unless everyone else is looking at more info than me (I just looked at the OP's tom's hardware link and slides) that's where the term "coherency" might be getting misunderstood by me - or others - because I believe it is indicating that the chip is definitely 2017 Infinity Fabric tech, rather than incoming, Infinity Architecture technology, and so the "coherency" is about the CCX module coherency stuff like Cache Coherency Master, and CAKE (Coherency AMD Socket Externder) stuff shown over at wikichip

Infinity Fabric (IF) - AMD - WikiChip

Infinity Fabric (IF) is a proprietary system interconnect architecture that facilitates data and control transmission across all linked components. This architecture is utilized by AMD's recent microarchitectures for both CPU (i.e., Zen) and graphics (e.g., Vega), and any other additional...en.wikichip.org

As for memory access, and the cost of accessing at 192bit (instead of 320bit), if you are saying all the disk IO is through the CPU - which I speculated in the next gen thread months ago - then surely streaming data (like the UE5 demo ) as REYES will mean that the CPU (IO decompressor) will be flat out with the 6GB pool, and to reduce GPU access cost being reduced to 192bit (also) will require that data moved to the 10GB, every few frames, no?

The diagram below that G GODbody linked, is that an official slide - as it looks official with corner logo?

The reason I ask, is because looking at the die-shot in the slides, I would be expecting something more like a 2 storey diagram interfacing the the two MCs blocks to the high & low chip address lines, with interleaved channels coming out of each MCs blocks alternating between high and low, visualising interleaved connectivity but with addressing that required asynchronous accesses - with the MCs translating/bit shifting/swizzling/etc when the GPU accesses the low memory, or when the CPU accesses the high memory.

Fair enough. It seems that no matter how much info is divulged about this console, there are still questions on how certain things work. Back when the specs were revealed I speculated about all the issues that this weird RAM config may have. If you're interested...;Ascend

I used the terms "high" and "low" because they are in the official slide that states "interleaving" memory.

Yes, but without smart-shift boosting the PS5 would be weaker. You're treating it like it's a feature when I suspect that Sony are, understandably, selling the positive features of what was really a necessity.

Without boosts the PS5 wouldn't by anywhere close to competitive in the hardware stakes.

The Series X doesn't have Smartshift because it simply doesn't need it.

While they did mention specifically "machine learning inference acceleration for resolution scaling on games" on hot chips,I wonder if anyone on the XBOX team could tell us more about best tested case uses of their ML upscaling tech. I think it's going to be great for BC and am looking forward to that. I wonder if it will have some real cool DLSS type use cases.

oh come on now..! there's been enough defiance of logic in EVERY other thread. and it seems everybody is becoming dumber because of it.Clocks in PS5 aren't boosted. If it is boosted, PS5 would be a 9 TF console, not 10. Of course XSX doesn't need Smartshift when it has a locked clocks. Ms designed XSX with locked clocks in mind. Go figure

Yeah it is 20 channels, I believe (although could be wrong on what I am looking at)counted 10 channels on each MCs block unit, with what looked like pass-thru on both the inside and outside (left & right edges) with the blue stuff, presumably being infinity fabric (Scalable Data Fabric) bus connecting everything

But unless everyone else is looking at more info than me (I just looked at the OP's tom's hardware link and slides) that's where the term "coherency" might be getting misunderstood by me - or others - because I believe it is indicating that the chip is definitely 2017 Infinity Fabric tech, rather than incoming, Infinity Architecture technology, and so the "coherency" is about the CCX module coherency stuff like Cache Coherency Master, and CAKE (Coherency AMD Socket Externder) stuff shown over at wikichip

Infinity Fabric (IF) - AMD - WikiChip

Infinity Fabric (IF) is a proprietary system interconnect architecture that facilitates data and control transmission across all linked components. This architecture is utilized by AMD's recent microarchitectures for both CPU (i.e., Zen) and graphics (e.g., Vega), and any other additional...en.wikichip.org

As for memory access, and the cost of accessing at 192bit (instead of 320bit), if you are saying all the disk IO is through the CPU - which I speculated in the next gen thread months ago - then surely streaming data (like the UE5 demo ) as REYES will mean that the CPU (IO decompressor) will be flat out with the 6GB pool, and to reduce GPU access cost being reduced to 192bit (also) will require that data moved to the 10GB, every few frames, no?

The diagram below that G GODbody linked, is that an official slide - as it looks official with corner logo?

The reason I ask, is because looking at the die-shot in the slides, I would be expecting something more like a 2 storey diagram interfacing the the two MCs blocks to the high & low chip address lines, with interleaved channels coming out of each MCs blocks alternating between high and low, visualising interleaved connectivity but with addressing that required asynchronous accesses - with the MCs translating/bit shifting/swizzling/etc when the GPU accesses the low memory, or when the CPU accesses the high memory.

Don't forget 12GB RAM(around 9 useable for games) vs 8GB(around 5.5GB for games) on the PS4 Pro. That was a major factor.But it doesn't, we already know that's a lowball figure.

First of all bandwidth is a huge differentiator especially when it comes to rendering performance. It's the reason the Xbox One X was running games on average at resolutions 70-80% higher than the PlayStation 4 Pro while only having a 43% more capable GPU. With the PlayStation 5 and Series X we're again met with a large divergence in memory bandwidth afforded to the GPU.

[SNIP]

I wonder what the point "Two independent virtualized command streams, different DX API levels" is all about?

oh come on now..! there's been enough defiance of logic in EVERY other thread. and it seems everybody is becoming dumber because of it.

First of all bandwidth is a huge differentiator especially when it comes to rendering performance. It's the reason the Xbox One X was running games on average at resolutions 70-80% higher than the PlayStation 4 Pro while only having a 43% more capable GPU. With the PlayStation 5 and Series X we're again met with a large divergence in memory bandwidth afforded to the GPU.

yeah, right.PS5 has capped clocks at 3.5 and 2.23. There is no boost in that if clocks are capped. Bc games will receive boost. Not next-gen games.

Wow that fell upon deaf ears. I wonder why.yeah, right.

as I said already, its practically groundhog day with you guys. every day the same.

cerny himself said that it was impossible for ps5 to have a sustained gpu at 2.0Ghz, with cpu at 3.0Ghz

but somehow, some people now believe that now with boost mode, the clock will be way more than 2.0Ghz all of the time. 2.23 to be exact

oh, and with cpu at 3.5Ghz no less

here you go, we had this same conversation the day before, and I put up the exact words of chef cerny, bookmarked for your convenience:

Next-Gen PS5 & XSX |OT| Console tEch threaD

Covid is still having such a massive impact on distribution channels (I’m seeing this at work a lot). I'm seeing it alot as well. . Toilet Paper is starting to disappear again. And I can't get any ammo!!!!www.neogaf.com

I dont need to repeat myself describing how stupid this entire thing is, and how it completely evades logic.

but hey, console warz!

This is just a poor analogy.If you and your co-worker did the exact same job at the exact same skill and effort level and yet they were paid 18% more than you, would you consider that to be 'significant'?

Not every operation needs that much bandwidth.Of course, more TF, bigger BW needed.

Bandwidth per TF is very similar on both. Mind you that XSX RAM speed will go down when games will use more than 10 GB.

actually, they could use it, but they wanted fixed clockrates. Sony wanted to limit the chip by max power usage, ms wanted to fix the clocks so they must have a big enough PSU to support everything the APU is demanding from it. Therefor you could say that sony uses the given silicon in a more efficient way. Btw, you don't need smartshift for the variable clockrates, smartshift only delivers the "unused" power to the component that needs it. MS could also just increase clockspeeds depending on the gpu usage and the power-envelop for the GPU. The tech for that should be inside of the AMD chip but also with some side-effects. But why should they do that? It could make less chips capable of being usable for a console (lower the yields) and would make it more complicated for developers. And with their strange memory system, they already made the console more complicated for developers ... just to save costs. ... Well they should have just made the game-memory fast and only the OS part with a slower connection. This would have made it much simpler for developers to optimize for this. But well .. it is still much easier than optimizing for a small SRAM pool (like in xbox one).Why MS does not use smartshift too? It's a standar rdna 2 feature. This will help squeeze a bit more gpu power by lowering the CPU clock in a GPU bound situation. This could effectively add 0.5 TF at least.

Maybe lowering the CPU does not give the required "fuel" to feed all these 52 CU's.

Even if it's a little more complicated by the developer. They can offer it as an option at least.

You can't just substract the max CPU bandwidth from the GPU bandwidth. The bandwidth is for both of them (but CPUs are often limited but they normally don't need that much bandwidth). It only get's complicated on xbox series x. If a component wants to access the 6gb pool it is limited to the bandwidth of those 6gb, if you want to access the 10GB pool, you are limited by the top speed. But as the OS uses the largest part of the 6GB pool, it should be really not happen often, that the speed is limited by the 6gb pool.These six 2GB RAM chips (1GB for CPU and other for GPU). When CPU needs data, it will be locked for GPU access data, right?

So, when CPU access all 6 chips at 336GB/s, GPU will access only 4 chips 1GB each, at 224GB/s right? It can change if GPU uses some of 6GB CPU memory.

In PS5, i guess it´s different, because if CPU uses 1 chip containing 2GB, it will be 56GB/s for CPU and the 7 other 2GB chips will be for GPU, 392GB/s.

So, it seems for me SeriesX has 560GB/s bus GPU, but not constant. And PS5 will have about 392GB/s bus GPU, but constant.

That's not how it works.Of course, more TF, bigger BW needed.

Bandwidth per TF is very similar on both. Mind you that XSX RAM speed will go down when games will use more than 10 GB.

yeah, right.

as I said already, its practically groundhog day with you guys. every day the same

It's really important to clarify the PlayStation 5's use of variable frequencies. It's called 'boost' but it should not be compared with similarly named technologies found in smartphones, or even PC components like CPUs and GPUs. There, peak performance is tied directly to thermal headroom, so in higher temperature environments, gaming frame-rates can be lower - sometimes a lot lower. This is entirely at odds with expectations from a console, where we expect all machines to deliver the exact same performance. To be abundantly clear from the outset, PlayStation 5 is not boosting clocks in this way. According to Sony, all PS5 consoles process the same workloads with the same performance level in any environment, no matter what the ambient temperature may be

cerny himself said that it was impossible for ps5 to have a sustained gpu at 2.0Ghz, with cpu at 3.0Ghz

You can't just substract the max CPU bandwidth from the GPU bandwidth. The bandwidth is for both of them (but CPUs are often limited but they normally don't need that much bandwidth). It only get's complicated on xbox series x. If a component wants to access the 6gb pool it is limited to the bandwidth of those 6gb, if you want to access the 10GB pool, you are limited by the top speed. But as the OS uses the largest part of the 6GB pool, it should be really not happen often, that the speed is limited by the 6gb pool.

Yeah it is 20 channels, I believe (although could be wrong on what I am looking at)counted 10 channels on each MCs block unit, with what looked like pass-thru on both the inside and outside (left & right edges) with the blue stuff, presumably being infinity fabric (Scalable Data Fabric) bus connecting everything

But unless everyone else is looking at more info than me (I just looked at the OP's tom's hardware link and slides) that's where the term "coherency" might be getting misunderstood by me - or others - because I believe it is indicating that the chip is definitely 2017 Infinity Fabric tech, rather than incoming, Infinity Architecture technology, and so the "coherency" is about the CCX module coherency stuff like Cache Coherency Master, and CAKE (Coherency AMD Socket Externder) stuff shown over at wikichip

Infinity Fabric (IF) - AMD - WikiChip

Infinity Fabric (IF) is a proprietary system interconnect architecture that facilitates data and control transmission across all linked components. This architecture is utilized by AMD's recent microarchitectures for both CPU (i.e., Zen) and graphics (e.g., Vega), and any other additional...en.wikichip.org

As for memory access, and the cost of accessing at 192bit (instead of 320bit), if you are saying all the disk IO is through the CPU - which I speculated in the next gen thread months ago - then surely streaming data (like the UE5 demo ) as REYES will mean that the CPU (IO decompressor) will be flat out with the 6GB pool, and to reduce GPU access cost being reduced to 192bit (also) will require that data moved to the 10GB, every few frames, no?

The diagram below that G GODbody linked, is that an official slide - as it looks official with corner logo?

The reason I ask, is because looking at the die-shot in the slides, I would be expecting something more like a 2 storey diagram interfacing the the two MCs blocks to the high & low chip address lines, with interleaved channels coming out of each MCs blocks alternating between high and low, visualising interleaved connectivity but with addressing that required asynchronous accesses - with the MCs translating/bit shifting/swizzling/etc when the GPU accesses the low memory, or when the CPU accesses the high memory.

Ascend

I used the terms "high" and "low" because they are in the official slide that states "interleaving" memory.

In computing, interleaved memory is a design which compensates for the relatively slow speed of dynamic random-access memory (DRAM) or core memory, by spreading memory addresses evenly across memory banks. That way, contiguous memory reads and writes use each memory bank in turn, resulting in higher memory throughput due to reduced waiting for memory banks to become ready for the operations.

HSA provides a pool of cache-coherent shared virtual memory that eliminates data transfers between components to reduce latency and boost performance. For instance, when a CPU completes a data processing task, the data may still require graphical processing. This requires the CPU to pass the data from its memory space to the GPU memory, after which the GPU then processes the data and returns it to the CPU. This complex process adds latency and incurs a performance penalty, but shared memory allows the GPU to access the same memory the CPU was utilizng, thus reducing and simplifying the software stack.

Cache coherency is a common tool employed in server environments to streamline operations, but HSA enables application of the technique anywhere one can find an SoC, including a broad range of devices in the client desktop, mobile, and tablet segments, among others.

yeah, right.

as I said already, its practically groundhog day with you guys. every day the same.

cerny himself said that it was impossible for ps5 to have a sustained gpu at 2.0Ghz, with cpu at 3.0Ghz

but somehow, some people now believe that now with boost mode, the clock will be way more than 2.0Ghz all of the time. 2.23 to be exact

oh, and with cpu at 3.5Ghz no less

here you go, we had this same conversation the day before, and I put up the exact words of chef cerny, bookmarked for your convenience:

Next-Gen PS5 & XSX |OT| Console tEch threaD

Covid is still having such a massive impact on distribution channels (I’m seeing this at work a lot). I'm seeing it alot as well. . Toilet Paper is starting to disappear again. And I can't get any ammo!!!!www.neogaf.com

I dont need to repeat myself describing how stupid this entire thing is, and how it completely evades logic.

but hey, console warz!

I think it does.