LordOfChaos

Member

Nvidia's RTX 4060 Ti and AMD's RX 7600 highlight one thing: Intel's $200 Arc A750 GPU is the best budget GPU by far

It's a genuinely great deal for the money and a decent budget gaming card.

Thots?

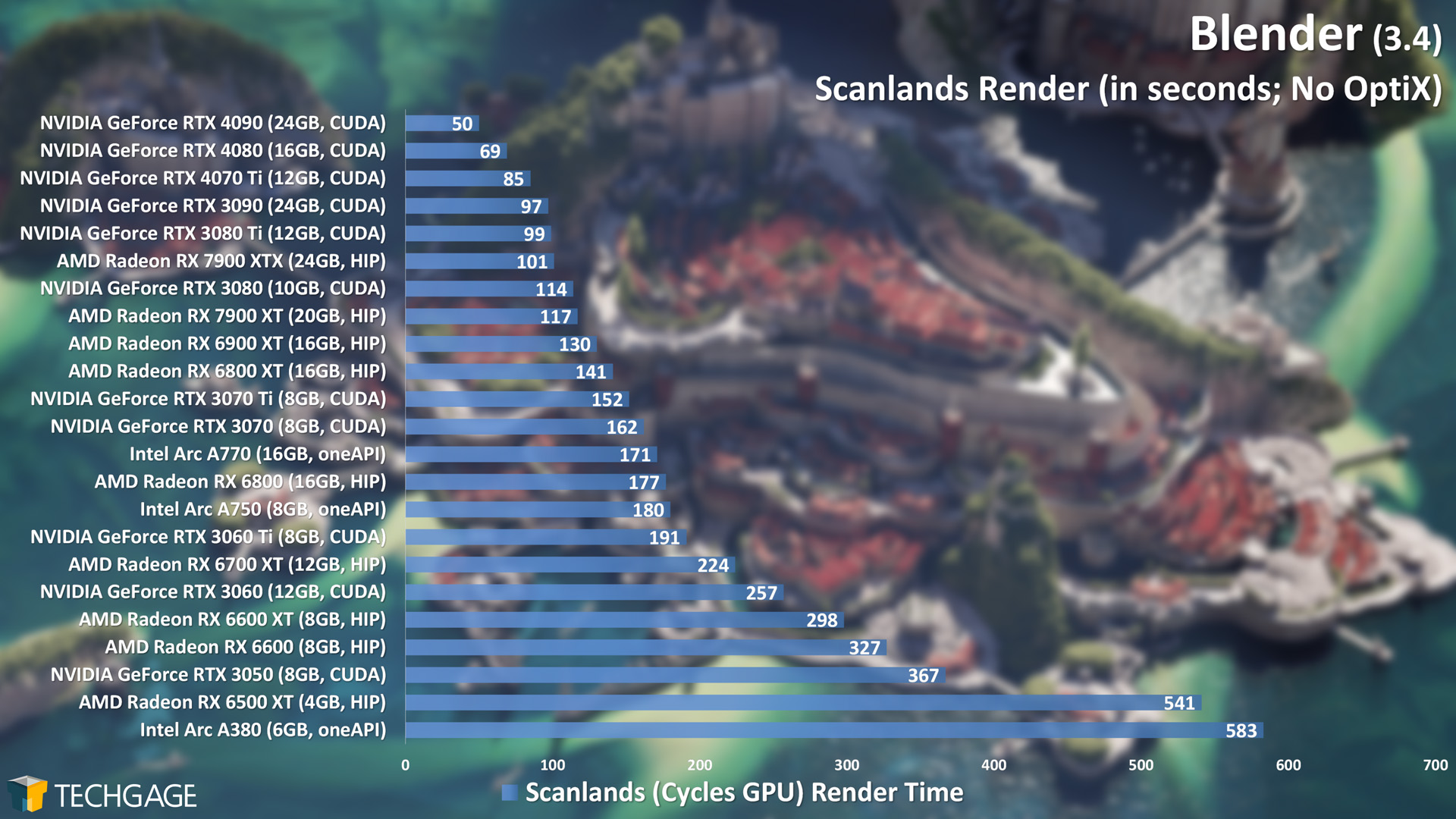

Has Blender support, here's an article covering some of that stuff although i'm not sure if the driver improvements have had any effect on these.can it be used for 3D/Rendering and such things?

www.pugetsystems.com

www.pugetsystems.com

The 6700XT is imo.

The A750 will work just fine for esports titles and the like, but if you plan to play upcoming AAA games then I don’t know if I would recommend an 8GB card at any price.

It's funny and sad. I was thinking the same thing. If Intel is the one to force the others into being competitive again, that's all sorts of bizzaro world.I hope Intel's next generation of cards has massively increased performance at killer prices. I can't believe we're at the point where we're asking Intel of all companies to save us. This is how bad things have gotten.

www.forbes.com

www.forbes.com

Sorry, you're gonna have to find someone else. I'm sure they're around here.Thots?

How do you figure that? Their hardware runs AI algorithms very well, and if they keep all the secret sauce AI hardware for themselves, they'll have a leg up on the competition.After the finacials they released and the stock price surge because of their AI lead Nvidia probably already working on their exit plan from consumer gpus, AI is the major market for them going forward and cloud will be where they bother with game hardware, maybe.

Waste of silicon when they can sell it into AI datacentres at much better margins, did you look at the numbers there?How do you figure that? Their hardware runs AI algorithms very well, and if they keep all the secret sauce AI hardware for themselves, they'll have a leg up on the competition.

Oh, I see what you mean. I thought you were suggesting that they'd abandon hardware completely. Yes, if gaming becomes a smaller and smaller piece of their revenue pie, there is less incentive to focus on it. However, if it still prints money, then I don't see why they would abandon it completely.Waste of silicon when they can sell it into AI datacentres at much better margins, did you look at the numbers there?

"Nvidia’s earnings report estimated $11 billion in sales for the second quarter, more than 50% higher than analysts’ predictions."

gaming used to be their largest revenue source, it was like 50/50 the past few years and now it's about 50% of data centre. The writing is on the wall.

Oh, I see what you mean. I thought you were suggesting that they'd abandon hardware completely. Yes, if gaming becomes a smaller and smaller piece of their revenue pie, there is less incentive to focus on it. However, if it still prints money, then I don't see why they would abandon it completely.

Intel does have one advantage over AMD's current GPUs, as they already have pretty good dedicated units for ray-tracing and machine learning.

Sadly, AMD is still using shaders for ML and TMUs for RT.

The driver improvements have been extremely impressive for these cards and continue to improve. However, I’d say the intel cards are attractive because of how bad the others are, not due how good they innately are.

can it be used for 3D/Rendering and such things?

techgage.com

techgage.com

The 6700XT is imo.

The A750 will work just fine for esports titles and the like, but if you plan to play upcoming AAA games then I don’t know if I would recommend an 8GB card at any price.