Are you trying to rewrite history? 60fps and higher and max setings has always been the rallying cry from gamers and it's almost always coming from the PC side. Did you not live in the so-called of the "PC Master Race", "Lord Gaben" and "Steam"?

No console executive or game studio has ever prioritised framerate over graphics. If they did achieve 60fps, it's because their tech overhead allows to do so such as games like COD.

Clearly you must come from a different dimension where 60fps is the standard back in the days. Try going back to PS3/360 era threads and see how the 30fps supporters are primarily from console players and marketing execs and even the games media. Now you're creating an imaginary oppression from the big bad 60fps movement. The fact that we are discussing this argument today completely flies in your face of this irony.

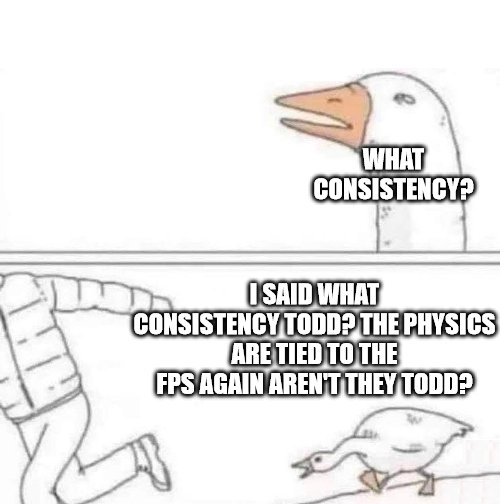

This is a much better response than the other nonsense replies out there. Interesting how you use Rage 2 as a comparison when we Horizon: Forbidden West unless that game is doing more CPU workload at 60fps. But going back to Starfield, that still doesn't look different that what Skyrim is doing, how is tracking an item in a house different than a planet? Those co-ordinates must be stored in same way, since they use the same base engine.

Secondly, these "interactive" items don't really come into play until you yourself interact with it, so how is it using CPU during this time? And even if you create a reaction, aren't they just updating the co-ordinates, unless they need to have BOTH the origin of the default location and the new location's data. If so, that's some poor memory management. It's one thing to give an item to the NPC, with them dynamically using said item to be tracked, but if the item moves one static location to another, then you shouldn't be using more CPU overhead than required.