I don't care much myself for raytracing at this point, performance hit is laughable still at this point and it offers nothing but being a tech demo still to this day. DLSS is interesting to me as it gives more performance and quality uplift something i always want. So yea i agree with u on that.

Then about nvidia gpu's and intel cpu's drivers:

Nvidia GPU's did better because nvidia actually made half decent drivers and went out to developers something AMD used to do to make their products work well. Saying that nvidia and intel had a packt together is simple false because on AMD cpu's the gains were also there, it was pure driver related but also techniques like physx that didn't got offloaded to the CPU because AMD had no answer towards it. It's AMD's fault not Nvidia's fault on this front. No excuses here for AMD they failed hard here.

And this is why i slammed earlier on in another thread AMD for stop wasting people's time with side games like dirt 5 and start focusing on juggernaut titles and how it performs there, because people in the market for a high end gpu probably already have a high end CPU in there PC setups currently.. AMD should be in the works with Cyberpunk like they did with GTA 5 and AC games / battlefield in the past to get support going and make the game work well for their products at day one. Yet we hear nothing from them on this matter. It's a bad sign while nvidia is screaming from the rooftops how they support this and that and that. It feels like 2013 all over again where wither gets hairworks and stressfx from amd was nowhere to be found. And no this was not nvidia or cyberpunks fault it was AMD refusing to communicate at any level or do any effort. it took them 4-5 months to fix crossfire while on day one sli worked perfectly fine. Hell even people themselves fixed the drivers for AMD and that took them months to implement in their own drivers.

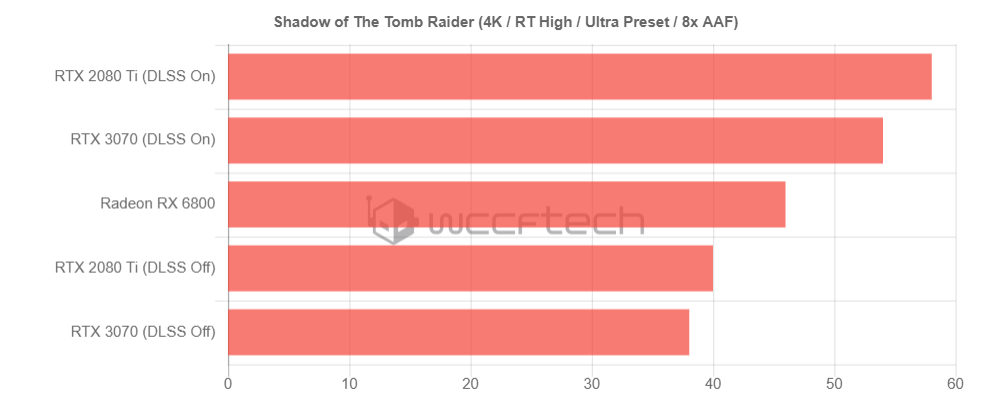

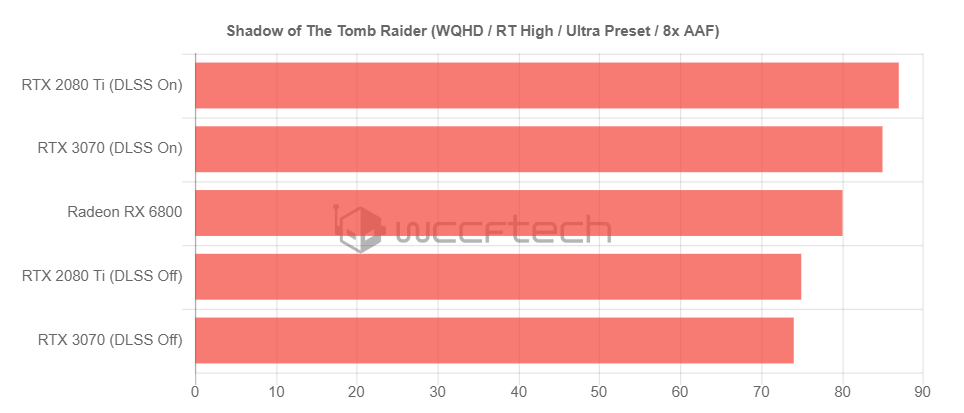

Look i am not against progress and i am not against better higher frame rates improvement techniques etc. The thing where i am against is shady advertisement where people think they get something but in reality they don't. For example if Nvidia simple showcases numbers with DLSS active and say its how there card performs and dont's show it without dlss. It's misleading because a game without DLSS will not showcase those gains and can fall behind the games u buy the card for without you knowing this specifically. People aren't that smart and fall for these type of shit marketing tricks all day long and get burned by it.

Example:

where's the chart without it? not found that day.

Another good example is where jimmy buys a GPU from AMD because its faster then the nvidia for 150 bucks. But its tested by all tech youtubers on nasa cpu's that cost as much as jimmies car and realizes when he come home he gets half of the frame rates because his cpu isn't up for the task and would have gotten far better performance from that nvidia gpu out of it as result. Jimmy is mad now.

And that will repeat again for the same reasons as u mention. A good 6000 series benchmark to showcase u exactly how it performs will be 5000 series CPU / 3000 series CPU and a Intel CPU. Sadly techyoutubers won't realize this and will go with whatever flows there boat which will result in either they have really good numbers because they pushed a 5000 series chip in there test box and mislead jimmy because he only has a 3000 series cpu or a intel cpu ( 75% is still intel basically ) and there you go.

Then your argument of but everybody will test with 5000 series chip.

We don't know this, it could very well be that the chip doesn't perform the way AMD is advertising and falls below the 10900k, intel could release a new cpu or series that is faster again and bye bye 6000 gains. Also even if the 5000 series is 1-5% faster will benchers care to swap?

See how this is going?

Then about pricing.

3090 isn't worth 1500. basing the price on that solution is simple dumb as hell. The same as the 2080ti wasn't worth 1200 bucks.

Then your part about super resolution being more performant, RT being better etc etc. It's all wishful thinking at this point. we have nothing to indicate that's going to be the case. As far as it stands now they are behind the curve and did nothing to clear this up. Also nvidia could adopt that solution also if they wanted too.

Look the cards are great, i love the 16gb model, i hate them locking there cards full performance behind specific hardware and i like there raw GPU performance.

What AMD needs to do is in my vision now.

Do a new presentation:

- showcase super resolution and its performance + release date

- show raytracing performance with it

- explain how they focus on drivers and developers

- showcase a list of high profile games people buy these cards for ( hint not dirt 5 ) and showcase that u worked with the developers to make your card work optimal.

Create confidence.

Because what i read on tech forums is nothing but people bitching about the price ( from 400 > 1000 ), the CPU locking and no confidence in there drivers. That's why i stated they need sharp prices but didn't do so.

We will see tho.