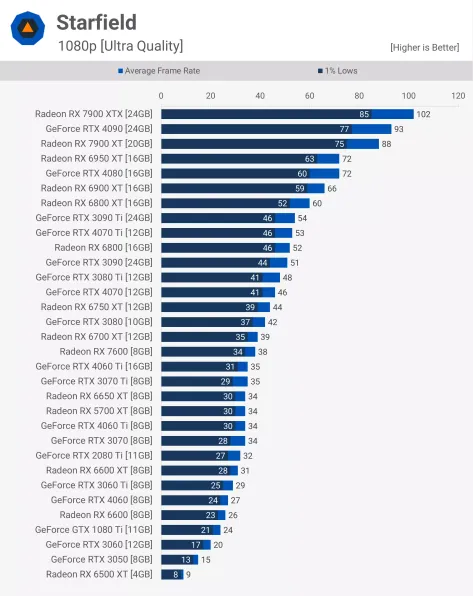

Game is broken on Nvidia GPUs, the GPUs are underutilised (low power consumption for the clocks and given voltages). Both 3000 and 4000 series. My 3080 at 1080p can't hold 60FPS even with optimised high settings. I'm not CPU bottlenecked.

12600K 4100cl17 manually tuned

3080 1700mhz 750mv 20Gb/s

1080P= 52-53fps in a spot

3080 1875mhz 850mv 20Gb/s

1080P= 58-60fps same spot

540P (Quarter the resolution)

1700mhz = 73-74fps

1875mhz = 80fps

Imagine that the GPU is a bottleneck even at 540P, first time see something like that.

It's like something in the Nvidia GPU is bottlenecking the rest of the GPU causing underutilisation of cores.