winjer

Gold Member

ATI times

That is quite impressive for the time, but it's RT using voxels. So it's probably more similar to Crytec's Svogi.

Still, very impressive stuff for 2008.

ATI times

Each frame of the scene was pre-computed into a baked voxel blob that we could re-light and move around in real time. (c)but it's RT using voxels. So it's probably more similar to Crytec's Svogi.

Yes, this is with RT Overdrive

Maybe Imagination Technologies would've been the only option if AMD didn't have anything.There is the matter of "if". As there was no GPU at the time that could do RT.

But even if there was, it would be very bad. Even Turing that released in 2018 had limited RT capabilities. Now imagine what a GPU in 2012 would be cable off, regarding RT.

nvidia certainly has nice tech, but putting it in all consoles would mean $1000 consoles and $2000 GPUs. Cheap consoles have been a huge help in preventing GPU prices from getting much worse than they already are. Trust me, it could get worse when you consider how little nvidia actually cares about the GPU market.Hmm, yes, I hadn't thought of it from that angle. Nvidia would pretty much have total domination of gaming if that were the case and that wouldn't be good.

I do hope AMD are able to significantly improve their ray-tracing for the next consoles. Hopefully Sony & Microsoft can help them with that.

The A770 barely keeps up with the 3060 and the 6600XT in Average utilizing ~2x more area and more power than both, It just reaches the performance of the 3060ti in some games.They are a generation behind with Alchemist, which you could say was a proof of concept.

The A770 keeps up with the 3060Ti and 6700XT......all of them are approx 400mm².

The A770 spanks the 6700XT in RT and upscaling tech.

Intel is approx 2 years behind Nvidia......AMD is 5 or more in term of tech and die usage.

The cheap consoles perform like shit in RT and these days are actively holding PC gaming back because games are designed for the shit performance of the consolesnvidia certainly has nice tech, but putting it in all consoles would mean $1000 consoles and $2000 GPUs. Cheap consoles have been a huge help in preventing GPU prices from getting much worse than they already are. Trust me, it could get worse when you consider how little nvidia actually cares about the GPU market.

Weird that you replied to somebody mentioning MSFT and Sony (ie consoles) and concentrated so heavily on the discussion of RT on PS pro. Who has said path tracing will be remedied with new consoles or the pro?The harsh reality is that there is absolutely nothing they can do because they are relying on AMD for that.

Lots of wishful thinking around on how Sony will use some ultra secret mega sauce and fix the situation with the “pro” version of the PS5, not to mention the 6.. but is not going to happen. Some of the devs might be able to remedy the situation, but what you see here with the 4090 is not coming to consoles for at least 10-15 years.

Weird that you replied to somebody mentioning MSFT and Sony (ie consoles) and concentrated so heavily on the discussion of RT on PS pro. Who has said path tracing will be remedied with new consoles or the pro?

The answer is that they won't use the same technology on console but still have RT and that itself is why there are so few games with path tracing to begin with, you can count them with half a hand. You should be hoping that path tracing does come to console sooner rather than later otherwise you will just get games using alternatives to path tracing like lumen which perform just fine on consoles and AMD. I get that to some the urge to be better than others outweighs everyone being better together but this isn't something you should be celebrating.

Who gives a fuck what technology they used to give you these frames ? Call them fake frames call them cheating. It enhance the experience motion and make it feel and play like a 120 or whatever high fps it can reach.It runs 8K 120 fps ray traced on Nvidia!*

*with DLSS lowering internal resolution to 360p and applying fake frames

Exactly, a lot of console games have raytracing already and next gen and the pro consoles will remedy the performance to make it easier to have RT by default. Nobody has said the pro will remedy path tracing though.Consoles already have hardware that can do ray-tracing and path-tracing. The problem is performance, as path-tracing cast more rays and more bounces, even using the Monte Carlo simulation to randomize and optimize the amount of rays cast.

Yeah, that's my point, they will use more performant alternatives to path tracing to achieve realistic lighting meaning fewer games get actual path tracing if consoles don't support it at acceptable framerates. He shouldn't be celebrating that. If consoles right now were capable of path tracing at good framerates we would have a lot more games with path tracing for PC too.Lumen on consoles does not use path-tracing. Nor ray-tracing. It uses SDF's that are a very simplified and much less performance intensive way.

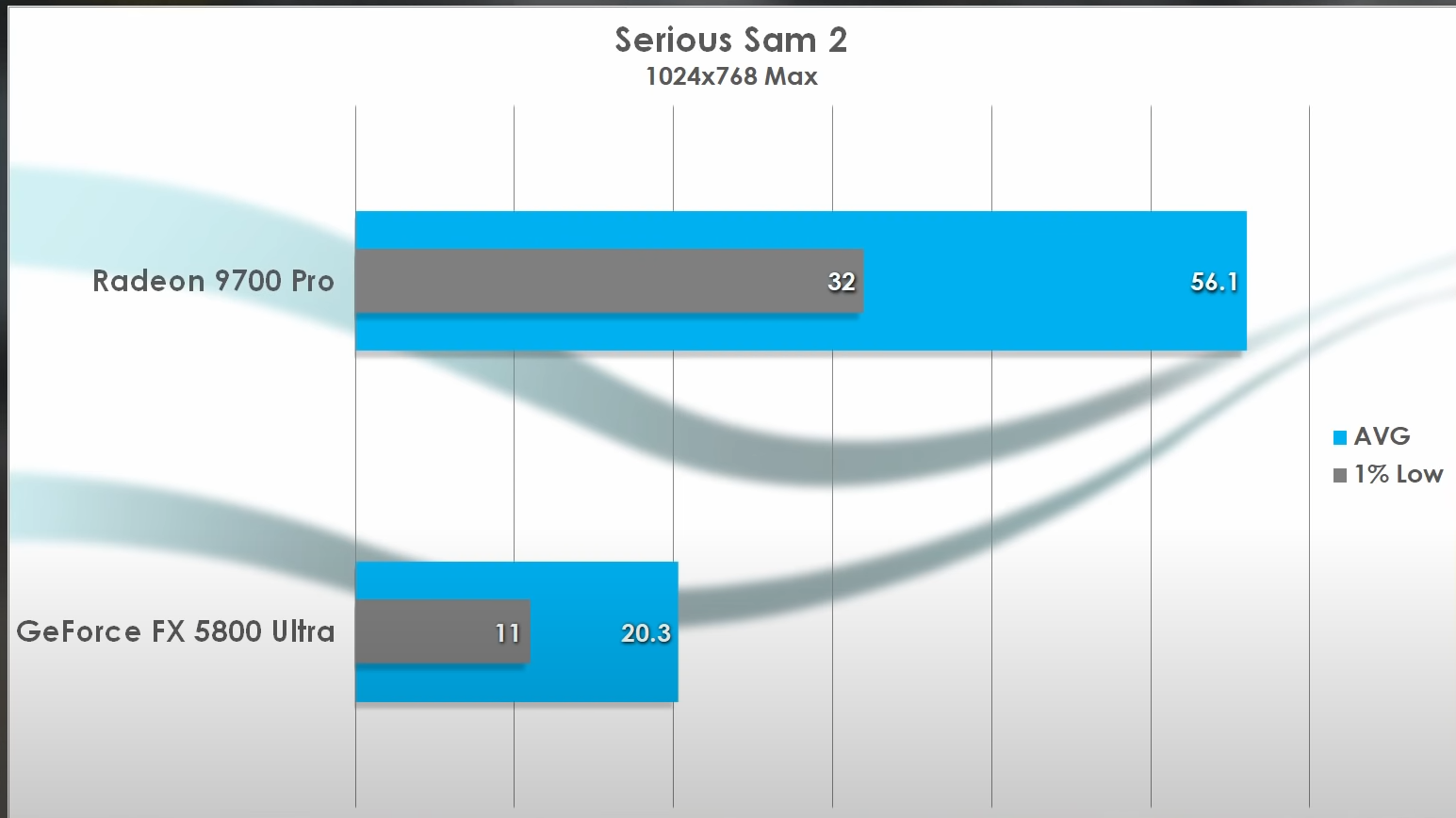

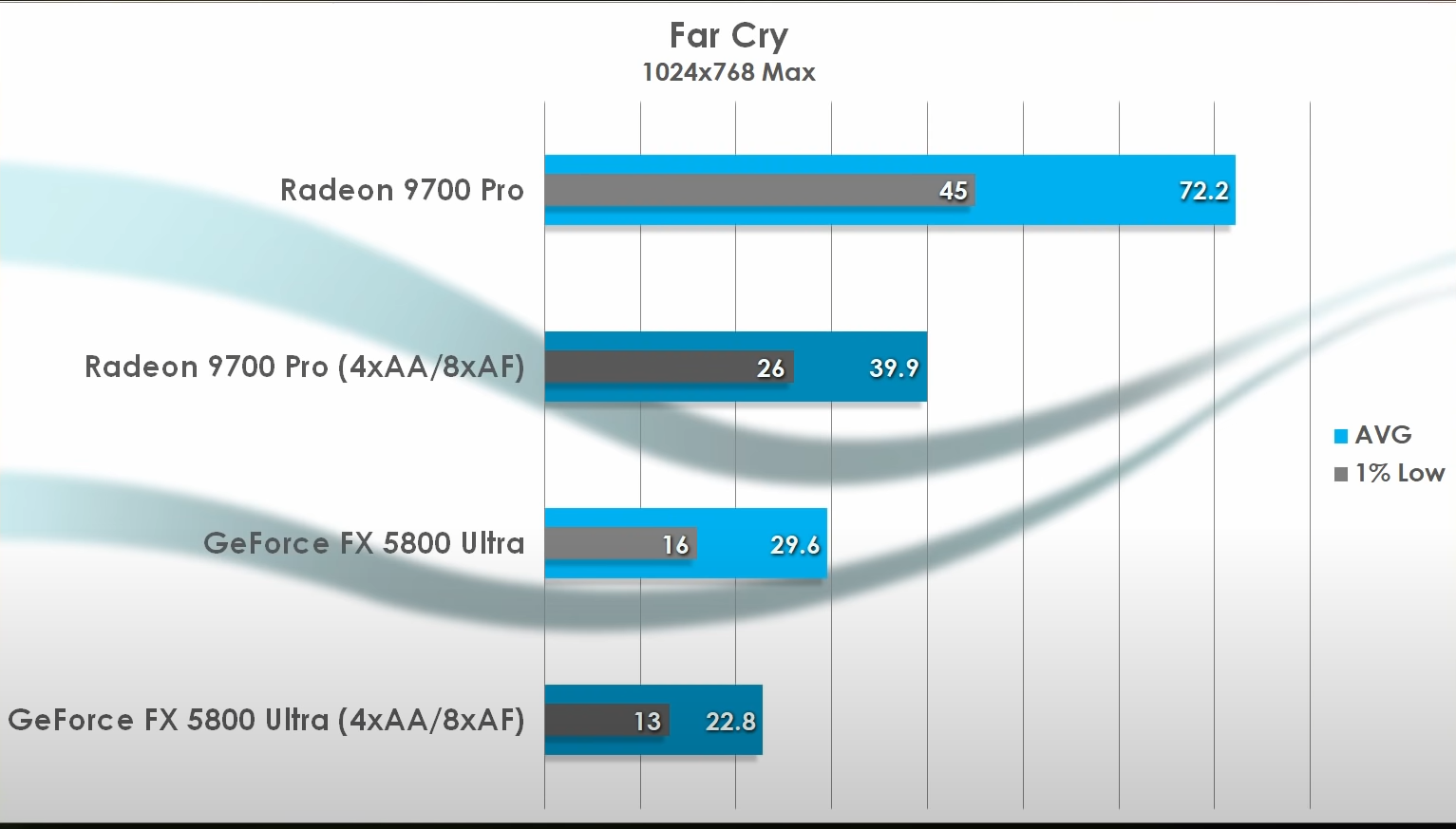

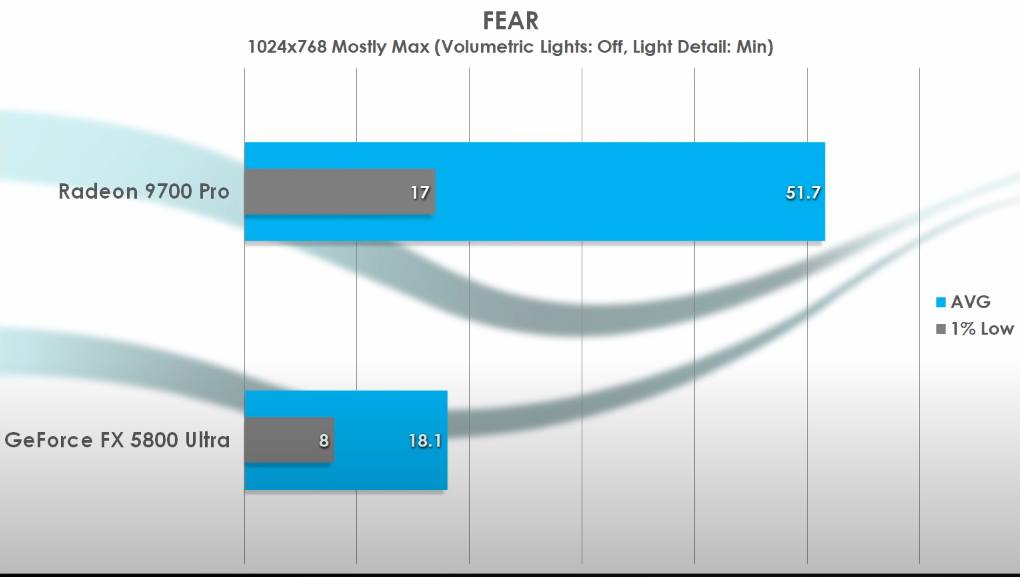

jeeze i bought a 5800ultra, worst card I have owned, dx9 perf was just terrible, next card I had was the 6800ultra which was a whole different storyEach frame of the scene was pre-computed into a baked voxel blob that we could re-light and move around in real time. (c)

Those RT in C2077, reminds me this times

Weird that you replied to somebody mentioning MSFT and Sony (ie consoles) and concentrated so heavily on the discussion of RT on PS pro. Who has said path tracing will be remedied with new consoles or the pro?

The answer is that they won't use the same technology on console but still have RT and that itself is why there are so few games with path tracing to begin with, you can count them with half a hand. You should be hoping that path tracing does come to console sooner rather than later otherwise you will just get games using alternatives to path tracing like lumen which perform just fine on consoles and AMD. I get that to some the urge to be better than others outweighs everyone being better together but this isn't something you should be celebrating.

I just don't enjoy DLSS in motion. For the games I've tested, it has introduced weird motion artifacts hard to put in words. I'll argue this sort of development is all wrong. Instead of optimizing their codes/engines for higher resolutions, devs now can just rely on these crutches to carry their asses at 1080p resolution. It's a sad development.Who gives a fuck what technology they used to give you these frames ? Call them fake frames call them cheating. It enhance the experience motion and make it feel and play like a 120 or whatever high fps it can reach.

People calling it weird names is just bitterness and salt to a high level.

As far as lowering internal resolution, when a Game running on DLSS has better smoothness to the edges and less antialaising than native in many games, then I'll take it.

This is just a sad post to be honest

Asking game devs to better optimize their shit game is a totally different subject and I agree with. You having DLSS feedback you don't like it's you and your opinion only. Even die hard AMD fans ( not saying you are ) agree that DLSS is amazing. And many games showed it run better than native when native is just TAA.I just don't enjoy DLSS in motion. For the games I've tested, it has introduced weird motion artifacts hard to put in words. I'll argue this sort of development is all wrong. Instead of optimizing their codes/engines for higher resolutions, devs now can just rely on these crutches to carry their asses at 1080p resolution. It's a sad development.