Velius

Banned

Prices are definitely coming down. At this rate I wouldn't be surprised if we start seeing retail RTX 30 series at retail in a few months.

And... I'm getting the fever. I WANT A RIG.

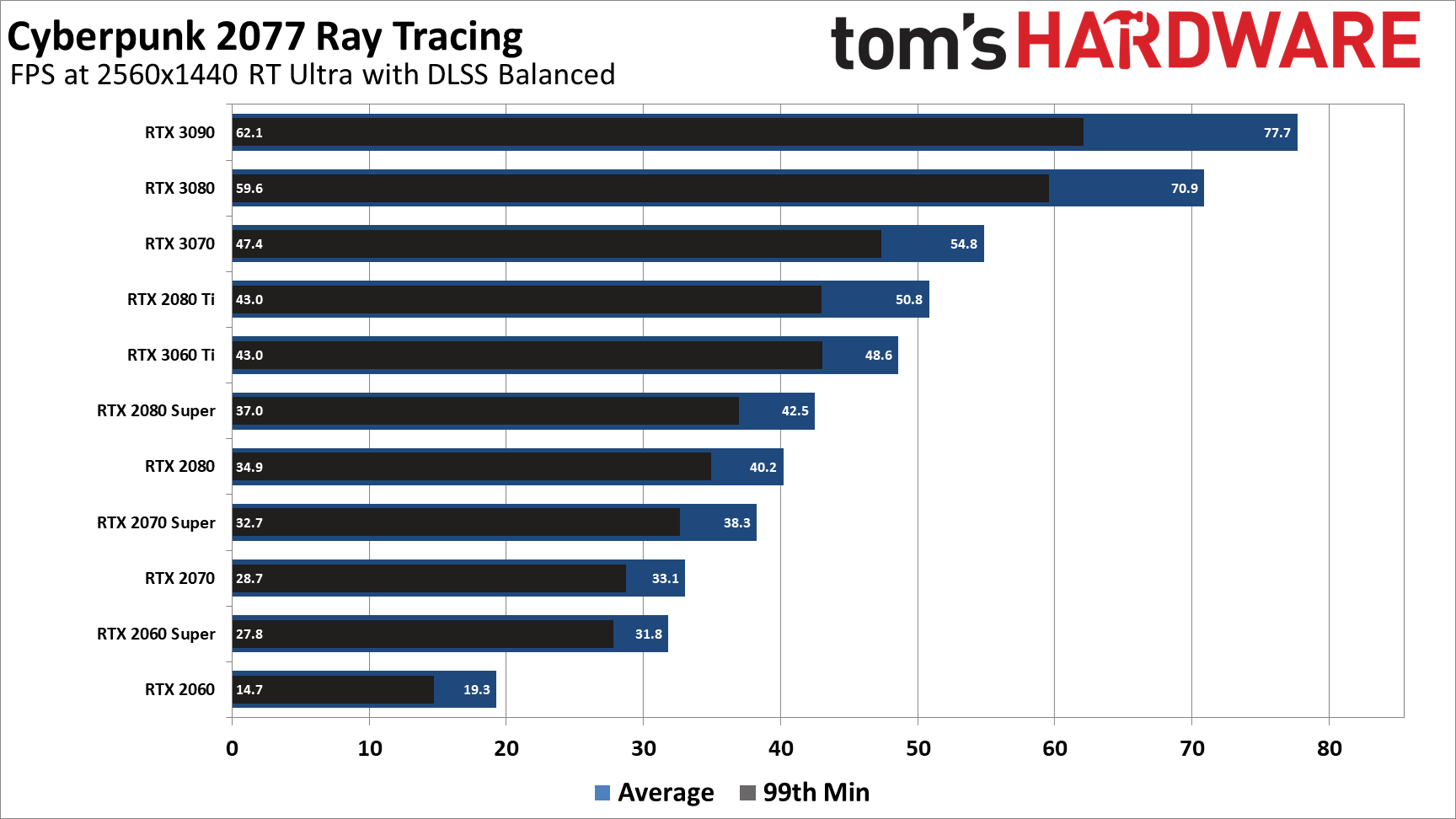

With that in mind, I want to build a rig that can run Cyberpunk maxed at 1440p with ray tracing on, while never dipping below 60FPS. Here's what I'm thinking. Any criticisms or advice welcome, I'm looking to reduce bottleneck wherever possible.

Below are my suggested specifications to accomplish such a feat. But I'm running into all kinds of interesting puzzles here. I want the best AIB possible, and lining things up, it's not really clear. So here are some contenders, maybe you guys can give me some feedback-- hell maybe even a couple of you actually have one of these and can let us know your experience.

First up, the THICASS four slot card, the Gigabyte Aorus Xtreme.

The 3090 has 10,496 CUDA Cores and a core clock of 1860 MHz, while the 3080 Ti has 10,240 Cuda Cores, and a boost cock of 1830 MHz. So it seems to me that these two are fairly close in their capabilities; if I could get them at retail it looks like the 3080 Ti would be the much better value, since they're very close in specs but with an enormous difference in price tag. Also they BOTH have 3x8-pin connectors, more power.

Then we have the not quite as thicass but still rather sexy ASUS ROG Strix OC. Again, 3090 and 3080 Ti.

The Cuda Cores for the 3080 Ti and 3090 are identical to their Gigabyte Aorus counterparts. But as far as MHz goes there's some difference.

ROG Strix OC 3090 has 1890 MHz in "OC Mode," and 1860 in "Gaming Mode." I don't know why gaming mode would be less than OC mode but there it is.

The 3080 Ti is less, with 1845 MHz in "OC" and 1815 in "Gaming." Should be noted that these two also have 3x8-Pin connectors.

So which is better? I mean for sheer numbers, it looks like 3090 ROG Strix OC, right? But how much more oomph are we talking about? How much of a difference does 30 MHz make?

Here is me proposed model for Cyberpunk 2077. Can any gurus tell me if this will run the game at:

1) No less than 60FPS

2) Max settings

3) 1440p

4) Ray Tracing ON

Ryzen 7 5800X

2x32GB Corsair Vengeance DDR4

Gigabyte Aorus X570 Elite WiFi

Gigabyte Aorus Xtreme RTX 3090

https://www.newegg.com/amd-ryzen-7-5800x/p/N82E16819113665

https://www.newegg.com/corsair-64gb-288-pin-ddr4-sdram/p/N82E16820236601?quicklink=true

https://www.newegg.com/gigabyte-x570-aorus-elite-wifi/p/N82E16813145165

https://www.newegg.com/gigabyte-geforce-rtx-3090-gv-n3090aorus-x-24gd/p/N82E16814932340

And... I'm getting the fever. I WANT A RIG.

With that in mind, I want to build a rig that can run Cyberpunk maxed at 1440p with ray tracing on, while never dipping below 60FPS. Here's what I'm thinking. Any criticisms or advice welcome, I'm looking to reduce bottleneck wherever possible.

Below are my suggested specifications to accomplish such a feat. But I'm running into all kinds of interesting puzzles here. I want the best AIB possible, and lining things up, it's not really clear. So here are some contenders, maybe you guys can give me some feedback-- hell maybe even a couple of you actually have one of these and can let us know your experience.

First up, the THICASS four slot card, the Gigabyte Aorus Xtreme.

The 3090 has 10,496 CUDA Cores and a core clock of 1860 MHz, while the 3080 Ti has 10,240 Cuda Cores, and a boost cock of 1830 MHz. So it seems to me that these two are fairly close in their capabilities; if I could get them at retail it looks like the 3080 Ti would be the much better value, since they're very close in specs but with an enormous difference in price tag. Also they BOTH have 3x8-pin connectors, more power.

Then we have the not quite as thicass but still rather sexy ASUS ROG Strix OC. Again, 3090 and 3080 Ti.

The Cuda Cores for the 3080 Ti and 3090 are identical to their Gigabyte Aorus counterparts. But as far as MHz goes there's some difference.

ROG Strix OC 3090 has 1890 MHz in "OC Mode," and 1860 in "Gaming Mode." I don't know why gaming mode would be less than OC mode but there it is.

The 3080 Ti is less, with 1845 MHz in "OC" and 1815 in "Gaming." Should be noted that these two also have 3x8-Pin connectors.

So which is better? I mean for sheer numbers, it looks like 3090 ROG Strix OC, right? But how much more oomph are we talking about? How much of a difference does 30 MHz make?

Here is me proposed model for Cyberpunk 2077. Can any gurus tell me if this will run the game at:

1) No less than 60FPS

2) Max settings

3) 1440p

4) Ray Tracing ON

Ryzen 7 5800X

2x32GB Corsair Vengeance DDR4

Gigabyte Aorus X570 Elite WiFi

Gigabyte Aorus Xtreme RTX 3090

https://www.newegg.com/amd-ryzen-7-5800x/p/N82E16819113665

https://www.newegg.com/corsair-64gb-288-pin-ddr4-sdram/p/N82E16820236601?quicklink=true

https://www.newegg.com/gigabyte-x570-aorus-elite-wifi/p/N82E16813145165

https://www.newegg.com/gigabyte-geforce-rtx-3090-gv-n3090aorus-x-24gd/p/N82E16814932340