Makes no sense for NV to go through the hassle of introducing new connector, unless they need it.

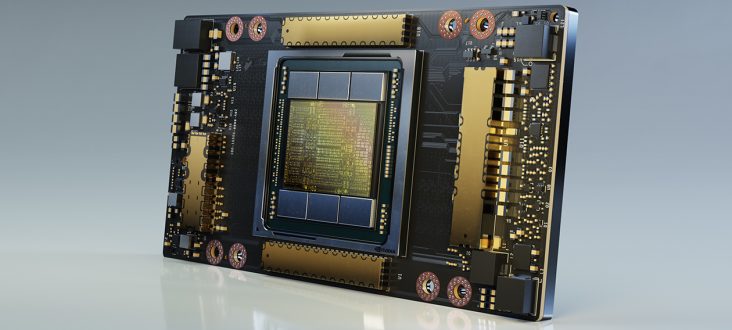

NVs biggest (datacenter - machine learning oriented) A100 chip was done at TSMC => they wouldn't do it, if Samsung 8nm could deliver better or at least comparable results.

Rumors put AMD's biggest chip to be 505mm2 and 80CUs. 2080Ti is about 40% faster than current 40CU chip.

Rumors also note that the fastest gaming Ampere struggles to be 40% faster than 2080Ti (that is 96% faster than AMD's 40CU) and is bigger than 600mm2, but on Samsung 8nm (which is more of a 10nm rebrand with some improvements)

Lisa explicitly said that AMD will roll out high end GPUs.

So, at the end of the day:

A given: AMD having a card faster than 3080. Radeon team is in full swing mode, no more starving budgets.

Possible, but not very likely: AMD beating 3080Ti/3090

I'd say 40CU vs 56CU in XSeX is. Sony is forced to squeeze a bit more perf, with a lot more power consumption.

Cheers, thats cool, might go back to AMD again if the perf to price ratio is right. Last time was a 7870, been nvidia since 970 though.

Also, you guys ever heard of something like this before?:

So I bought a Club3D 7870 from scan.co.uk and it came and I put it in the PC, connected the power to the card, etc. I start using it and when it starts to take on a load it just black screens and shuts down the PC. I open the case to check its all seated right and the power connectors securely connected as well. Same problem so I start emailed Club3D to make sure I'm not doing something stupid before I send it back to scan for a replacement.

They give me basic instructions which ofc includes: make sure you have a proper PSU and that you connect the GPU power connectors. In the pictures it shows 2 x 6 pin connectors and I look at the card and it has only ONE. I tell them this and they don't believe me and just keep giving basic information about setup/troubleshooting, after a few emails I send them a pic of the cards power connectors and they immediately change their tune, saying "Please return the card to scan and we'll sort you out with a new one". I checked in GPU-Z before returning it and it identifies as a 7870 and NOT a 7850 (which has 1 x 6 pin) and the branding it all 7870 from box to card itself.

So I ended up getting it sorted and it was fine (kinda, more on that below), I guess the card just wasn't getting enough power from 1 x 6 pin + the lane power so it crapped out at load.

Do you all think that was just improper manufacturing? Or that they mixed up a 7850 and put the 7870 firmware on it, branded it as a 7870 and then put it in a 7870 box? The latter seems SO unlikely, because thats a lot of mixups but the with the former I don't even know if its possible for a GPU to be manufactured wrongly in that way.

The worse part was that they had no more 7870s at that price so I had to go with the 7850 instead. Still good but I always missed that 7870...

Anyone ever heard of anything like that?