-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

12-pin Power Connector: It's Real and Coming with NVIDIA Ampere GPUs

CuNi

Member

To be fair, those PSU wattage recommendations are pretty generous. As long as you don't mess with the 12v line recommendations...

Also, 550W PSU != 550W PSU.

You have a varying amount of efficiency when under load, that's what those "Bronze/Silver" etc. labels mean.

It's weirdly a thing that very few people realize, how technically a difference in Bronze vs Platin or so can mean fully powering your system or limiting it in power!

Pagusas

Elden Member

Fuck our electrical bills, amirite?

Yeah, That extra 70 cents a month sure is going to break the bank.

JohnnyFootball

GerAlt-Right. Ciriously.

It's sure looking like that those hoping Ampere would be affordable are about to get their hearts broken.

theclaw135

Banned

This isn't what I had in mind for disrupting the PC market. I'd like to see, for instance, how laptop memory modules would perform on lower end desktops. Four 288 pin memory slots takes up a great deal of space, yet there's little point in most users having more than 32GB tops.

PhoenixTank

Member

Not quite. Trash tier PSUs (and I mean potential toaster tier) often can't provide their specced wattage. A 750W toaster might only be able to get up to 500W.Also, 550W PSU != 550W PSU.

You have a varying amount of efficiency when under load, that's what those "Bronze/Silver" etc. labels mean.

It's weirdly a thing that very few people realize, how technically a difference in Bronze vs Platin or so can mean fully powering your system or limiting it in power!

Properly rated power supplies will draw whatever they need (within reason) to power components within their spec. The ratings system refers to efficiency as you mentioned but A 500W Bronze will draw more power than a 500W Titanium at a given load (say 300W for the PC), but both will provide that 300W to the PC. A proper Bronze won't be stuck at 475W max output vs 495W for a Titanium.

Ratings are for consumption rather than supply.

Not that you should continually run power supplies at 100% either!

Lower efficiencies will result in more heat, but that is secondary and if the heat causes a total failure or failure to provide power it has not met its spec i.e. the toaster tier.

DonJuanSchlong

Banned

Power supplies are like amplifiers. You have different levels of efficiency, which would correlate to bronze, silver, gold, and platinum. You are paying extra for the efficiency, which is worth the money to many people. Some platinums can be overpriced though.

GHG

Member

Power supplies are like amplifiers. You have different levels of efficiency, which would correlate to bronze, silver, gold, and platinum. You are paying extra for the efficiency, which is worth the money to many people. Some platinums can be overpriced though.

Platinums are definitely in the realm of diminishing returns.

Unless you run a business where you have a load of PC's running 24/7 and you're looking to maximise margins then the extra efficiency they offer is not worth it.

Last edited:

DonJuanSchlong

Banned

Definitely. I won't get anything higher than gold. Especially as most come with warrantees that outlast the actual PSU.Platinums are definitely in the realm of diminishing returns.

Unless you run a business where you have a load of PC's running 24/7 and you're looking to maximise margins then the extra efficiency they offer is not worth it.

llien

Member

Say, 20 hours per week gaming, 50 weeks => 1000 hours per year.Yeah, That extra 70 cents a month sure is going to break the bank.

1kWh costs where I live are between 0.25-0.30€.

So for each additional single watt of energy consumed by the card, you pay the said 0.25-0.30€ annually.

Not end of the world, but not 70 cents a month either.

It can get much worse if it forces you to use air conditioning.

Last edited:

AquaticSquirrel

Member

If this is true, along with rumours of ampere cards running hot with high power draw then it will be interesting to see how people react.

When AMD had more powerful cards than Nvidia, but those cards ran hot/high power draw everyone complained saying they were bad and inefficient cards. It was better to have a less powerful but more efficient card.

This continued as Nvidia eventually overtook AMD on the power front but with AMD still having high power draw/hot running cards. We heard endlessly about it during tech reviews from official tech sites and Youtubers.

If new AMD cards are within spitting distance of ampere but with better efficiency, lower power draw/cool running I wonder will everyone slam Nvidia cards for running too hot and being inefficient to the degree they did in the past for AMD?

If I was a betting man, I would say no. Nvidia's marketing money helped grant them the leading position in the market they enjoy today. That mindshare does not evaporate over night. If the above all comes true I would imagine we will see excuses galore from "influencers", tech sites and gamers alike. Suddenly it will be A-Ok when Nvidia does it. Sure it might get mentioned in passing and then quickly dismissed or downplayed but it definitely won't get slammed the way AMD cards were.

It is similar to all the gamers you see who say things like "I hope AMD becomes competitive again with Nvidia so I can buy a new Nvidia card for less money!11". If they were truly competitive in the hypothetical at the top end then why wouldn't you consider the alternative? The power of marketing and mindshare. "The way it was meant to be played" etc.

Anyway, something worth thinking about and keeping an eye on if all the rumours come to pass.

When AMD had more powerful cards than Nvidia, but those cards ran hot/high power draw everyone complained saying they were bad and inefficient cards. It was better to have a less powerful but more efficient card.

This continued as Nvidia eventually overtook AMD on the power front but with AMD still having high power draw/hot running cards. We heard endlessly about it during tech reviews from official tech sites and Youtubers.

If new AMD cards are within spitting distance of ampere but with better efficiency, lower power draw/cool running I wonder will everyone slam Nvidia cards for running too hot and being inefficient to the degree they did in the past for AMD?

If I was a betting man, I would say no. Nvidia's marketing money helped grant them the leading position in the market they enjoy today. That mindshare does not evaporate over night. If the above all comes true I would imagine we will see excuses galore from "influencers", tech sites and gamers alike. Suddenly it will be A-Ok when Nvidia does it. Sure it might get mentioned in passing and then quickly dismissed or downplayed but it definitely won't get slammed the way AMD cards were.

It is similar to all the gamers you see who say things like "I hope AMD becomes competitive again with Nvidia so I can buy a new Nvidia card for less money!11". If they were truly competitive in the hypothetical at the top end then why wouldn't you consider the alternative? The power of marketing and mindshare. "The way it was meant to be played" etc.

Anyway, something worth thinking about and keeping an eye on if all the rumours come to pass.

Last edited:

Coulomb_Barrier

Member

W1zzard on TechpowerUp, famous Nvidia biased site I might add, is worried the 3080 Ti might draw over 400W! That's fucking crazy if true, Nvidia may have scored an own goal going with Samsung's 8nm process, TSMC are just too good right now so Nvidia's dispute with them is really braindead.

ZywyPL

Banned

I think you guys forget how hot Navi cards already are, despite being made in 7nm process and only 36-40CUs, AMD won't be able to step it up all of a sudden, while doubling the CU count at the same time as rumored, I'd say they are in the worse position as far as power consumption and heat goes, always have been since GCN. As for the Ampere cards themselves, if they will allow for 100+ FPS with RT ON people will jump over them like crazy and never ask a singe question, that's just how it works, always have been. Which again, A100 is 400W, 250 in PCIE version (https://www.nvidia.com/en-us/data-center/a100/), so I cannot imagine/explain where any extra wattage would be needed for? They will obviously add RT cores to the cunsumer cards, but those will replace some of the CUDA cores, so the end result won't change. Bottom line is, absolutely nothing will change as long as AMD will be two generation behind, as long as NV will be unquestionably the fastest out there they will be able to do whatever they want, and the consumers will have to suck it up. I wouldn't be surprised if Intel catches up with NV before AMD does TBH if RDNA2 doesn't close the gap this time around, like, how many tries, how many generations can we hope AMD will finally give them some competition?

The Skull

Member

I think you guys forget how hot Navi cards already are

Blower cards sure and hopefully AMD step away from these horrendous design's, but decent aftermarket cards? Fairly cool and quiet, along with similar power draw to their "competition". Certainly a lot better than GCN was. Drivers have been their biggest issue with the Navi cards though.

Last edited:

SantaC

Gold Member

AMD struck gold with TSMCW1zzard on TechpowerUp, famous Nvidia biased site I might add, is worried the 3080 Ti might draw over 400W! That's fucking crazy if true, Nvidia may have scored an own goal going with Samsung's 8nm process, TSMC are just too good right now so Nvidia's dispute with them is really braindead.

CuteFaceJay

Member

Is this a prank? I thought they were meant to be more power friendly as time moves on?

llien

Member

From the leaks, the upcoming tricks a la Fermi era:

1) Using forced DLSS upscaling

2) Tesselation 2.0 with RT

3) Driver issues trolling

4) "yeah, it's faster... and cooler... and cheaper... but <features>"

Regardless, AMD will push forward next gen and beyond, fueled by R&D team FINALLY being budgeted well, Huang's TSMC fuckup and

Navi has perf/w parity with Turing, and beats AMDs own Vega, including 7nm piece, by 30%+.

Navi is also hell of a small chip, at only 250mm2.

But counting 7nm DUV vs 12nm it is fair to say AMD, despite major leaps forward, is till behind on perf/watt front, but nowhere "two generations" behind.

1) Using forced DLSS upscaling

2) Tesselation 2.0 with RT

3) Driver issues trolling

4) "yeah, it's faster... and cooler... and cheaper... but <features>"

Regardless, AMD will push forward next gen and beyond, fueled by R&D team FINALLY being budgeted well, Huang's TSMC fuckup and

Why do you guys sound like people from parallel universe, what the heck is "two generation behind"?...two generation behind...

Navi has perf/w parity with Turing, and beats AMDs own Vega, including 7nm piece, by 30%+.

Navi is also hell of a small chip, at only 250mm2.

But counting 7nm DUV vs 12nm it is fair to say AMD, despite major leaps forward, is till behind on perf/watt front, but nowhere "two generations" behind.

Doesn't seem like you have a clue, how much they actually consume.forget how hot Navi cards already are

The A100 is using TSMC's 7 nm process though, not Samsung's 8 nm. The 5700 XT was rated at 225W, and with the 50% performance per watt improvement AMD have promised, they should be able to double the 5700 XT's performance for 300W. I think if Nvidia is using the 8nm process, it is conceivable AMD could match them in performance per watt, with Nvidia beating them in absolute performance by pushing above 300W.I think you guys forget how hot Navi cards already are, despite being made in 7nm process and only 36-40CUs, AMD won't be able to step it up all of a sudden, while doubling the CU count at the same time as rumored, I'd say they are in the worse position as far as power consumption and heat goes, always have been since GCN. As for the Ampere cards themselves, if they will allow for 100+ FPS with RT ON people will jump over them like crazy and never ask a singe question, that's just how it works, always have been. Which again, A100 is 400W, 250 in PCIE version (https://www.nvidia.com/en-us/data-center/a100/), so I cannot imagine/explain where any extra wattage would be needed for? They will obviously add RT cores to the cunsumer cards, but those will replace some of the CUDA cores, so the end result won't change. Bottom line is, absolutely nothing will change as long as AMD will be two generation behind, as long as NV will be unquestionably the fastest out there they will be able to do whatever they want, and the consumers will have to suck it up. I wouldn't be surprised if Intel catches up with NV before AMD does TBH if RDNA2 doesn't close the gap this time around, like, how many tries, how many generations can we hope AMD will finally give them some competition?

Last edited:

Ascend

Member

Not that you're wrong, but, if the console GPUs are anything to go by, they made some great advances in power consumption. Navi cannot run over 2 GHz, but the PS5, a console, can. It actually bodes well for AMD.I think you guys forget how hot Navi cards already are, despite being made in 7nm process and only 36-40CUs, AMD won't be able to step it up all of a sudden, while doubling the CU count at the same time as rumored, I'd say they are in the worse position as far as power consumption and heat goes, always have been since GCN. As for the Ampere cards themselves, if they will allow for 100+ FPS with RT ON people will jump over them like crazy and never ask a singe question, that's just how it works, always have been. Which again, A100 is 400W, 250 in PCIE version (https://www.nvidia.com/en-us/data-center/a100/), so I cannot imagine/explain where any extra wattage would be needed for? They will obviously add RT cores to the cunsumer cards, but those will replace some of the CUDA cores, so the end result won't change. Bottom line is, absolutely nothing will change as long as AMD will be two generation behind, as long as NV will be unquestionably the fastest out there they will be able to do whatever they want, and the consumers will have to suck it up. I wouldn't be surprised if Intel catches up with NV before AMD does TBH if RDNA2 doesn't close the gap this time around, like, how many tries, how many generations can we hope AMD will finally give them some competition?

llien

Member

Bigger cards are notoriously more energy efficient (as they have lower clocks):The A100 is using TSMC's 7 nm process though, not Samsung's 8 nm. The 5700 XT was rated at 225W, and with the 50% performance per watt improvement AMD have promised, they should be able to double the 5700 XT's performance for 300W. I think if Nvidia is using the 8nm process, it is conceivable AMD could match them in performance per watt, with Nvidia beating them in absolute performance by pushing above 300W.

FranXico

Member

PC graphics cards coming out later (maybe even this holiday) with 80 CUs at over 2GHz are going to be nuts.Not that you're wrong, but, if the console GPUs are anything to go by, they made some great advances in power consumption. Navi cannot run over 2 GHz, but the PS5, a console, can. It actually bodes well for AMD.

ZywyPL

Banned

Not that you're wrong, but, if the console GPUs are anything to go by, they made some great advances in power consumption. Navi cannot run over 2 GHz, but the PS5, a console, can. It actually bodes well for AMD.

But both consoles are much larger than ever before, with both companies putting strong emphasis on cooling, which was also unheard of before, so that gives a hint that the power RDNA2 gives doesn't come so freely.

Ascend

Member

The image is very small, but the XSX PSU is rated for 315W;But both consoles are much larger than ever before, with both companies putting strong emphasis on cooling, which was also unheard of before, so that gives a hint that the power RDNA2 gives doesn't come so freely.

That means, you're running the whole system for under 300W, because no one will design the system to load the PSU to its max capacity. You have;

GDDR6

SSD

CPU

I/O for everything else

We don't know the exact usage of every component, but we can use the CPU as a reference. It's basically a Ryzen 2 after all.. The CPU would be around 65W most likely. Maybe it can reach 100W during absolute peak load. So we must therefore assume that the GPU would be somewhere between 150W - 200W of usage. And that is for a GPU that has 52CUs and runs at 1.8GHz. The 5700XT that has 40CUs and runs at 1.9GHz is rated for 225W. I think this gives a good picture of the advances in power consumption.

llien

Member

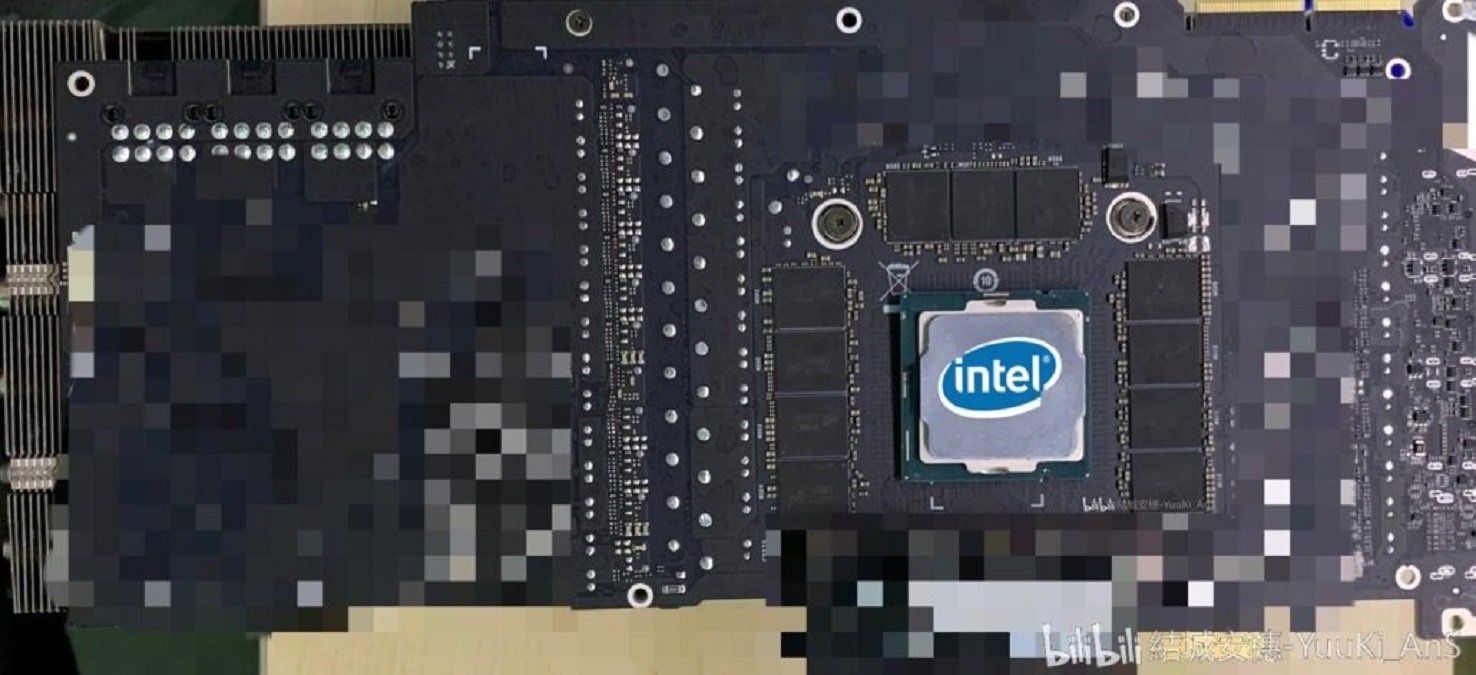

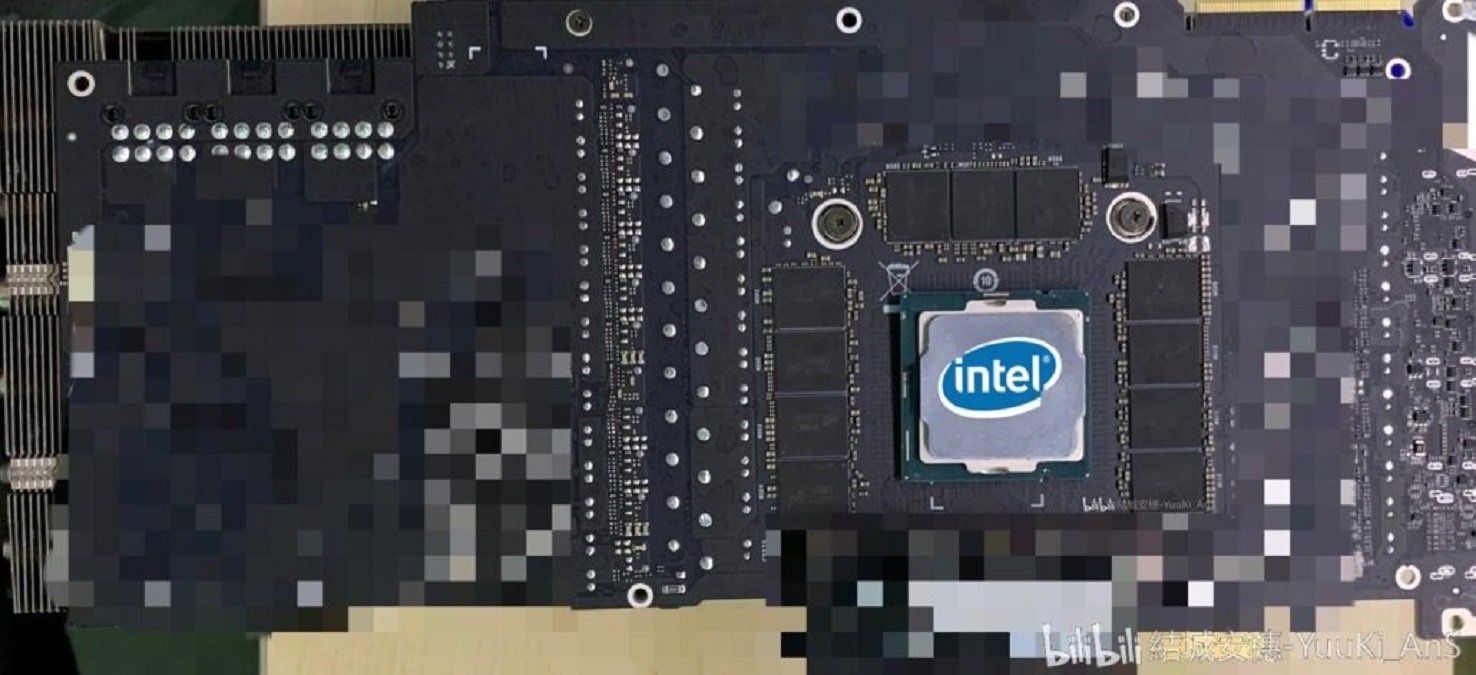

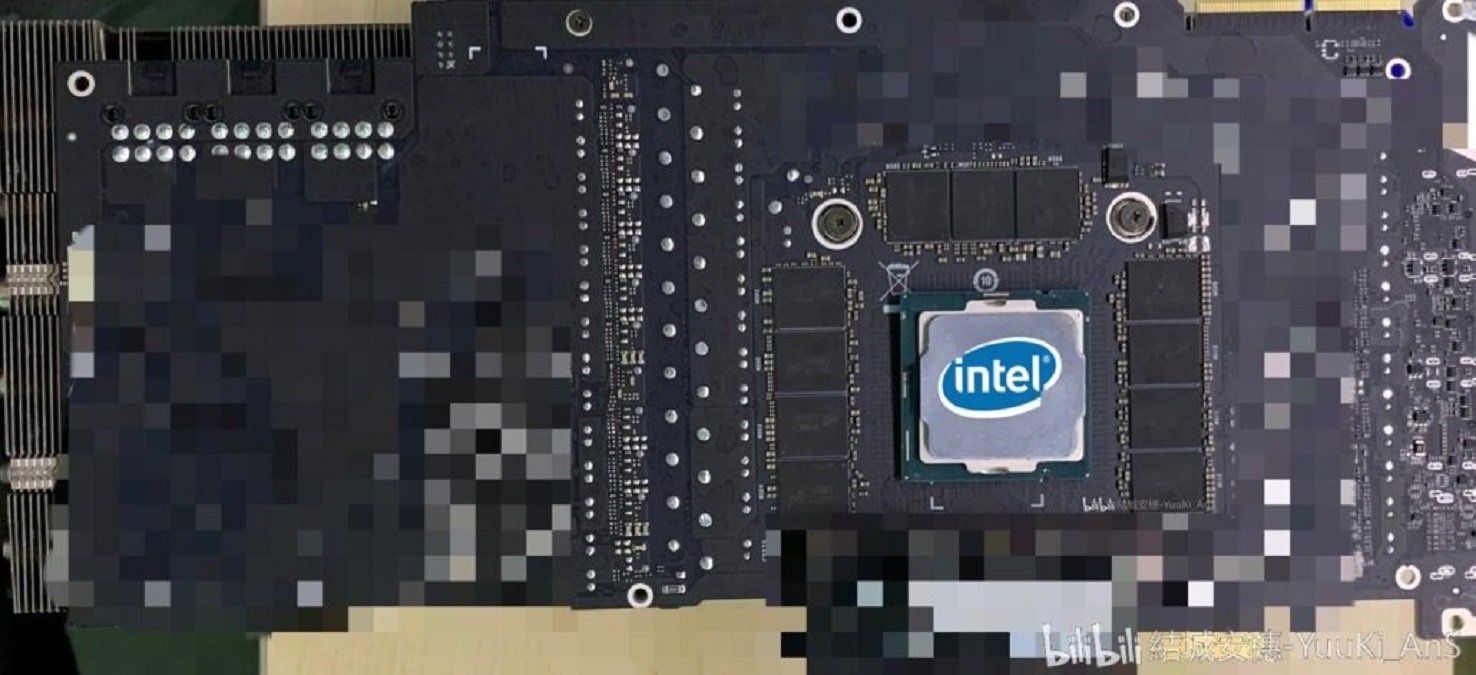

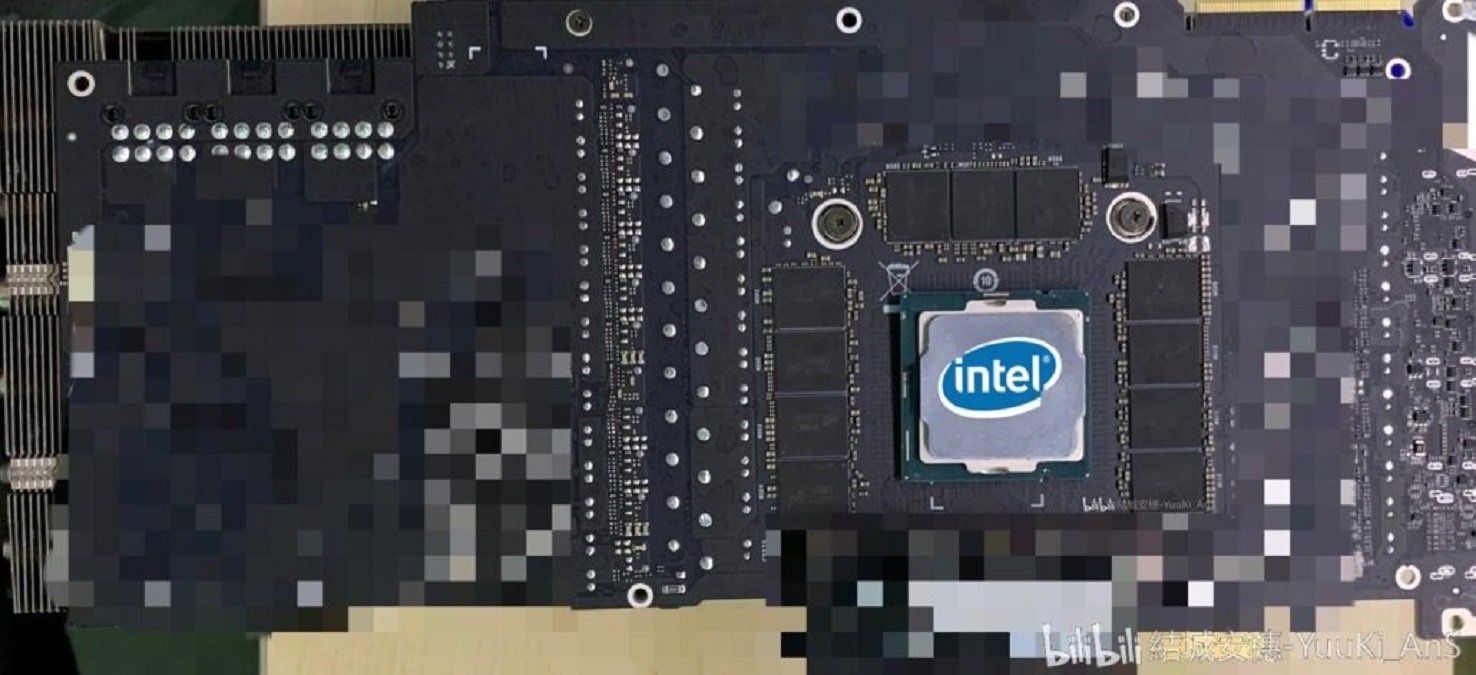

NV's card is said to be using it, AIBs stick with multiple 8-pin:

www.tweaktown.com

www.tweaktown.com

GeForce RTX 3090: 12-pin PCIe on Founders Edition, not on custom cards

We're hearing that NVIDIA will use a new 12-pin PCIe power connector on the GeForce RTX 3090 Founders Edition, not custom cards.

GreatnessRD

Member

Sounds like a big clusterfuck. September 1st will be an interesting day for sure.NV's card is said to be using it, AIBs stick with multiple 8-pin:

GeForce RTX 3090: 12-pin PCIe on Founders Edition, not on custom cards

We're hearing that NVIDIA will use a new 12-pin PCIe power connector on the GeForce RTX 3090 Founders Edition, not custom cards.www.tweaktown.com

michaelius

Banned

That's disappointing I was interested in FE this time due to rumors about dual sided cooling which looked like revolutionary design but I'm not changing my 2 years old perfectly fine psu just for that.

Ulysses 31

Gold Member

My Corsair AX1600Wi should still suffice.

Tomeru

Member

I know this C R A Z Y dude that runs a RTX 2070 super on a 550W PSU

the box said 600 but this dude does not give a fuck.

What could possibly go wrong

Chiggs

Gold Member

Didn't know if this would warrant it's own thread but I guess they were closer than I thought.

Another reason to hate Intel?

LazyParrot

Member

Those recommendations tend to be way too strict. Even a high-end CPU paired with a 2080Ti is unlikely to crack the 450W mark, even running at full load for extended periods of time (which pretty much never happens while gaming).I know this C R A Z Y dude that runs a RTX 2070 super on a 550W PSU

the box said 600 but this dude does not give a fuck.

Spukc

always chasing the next thrill

WOAAAH WE HAVE A MAD LAD HERE !!Those recommendations tend to be way too strict. Even a high-end CPU paired with a 2080Ti is unlikely to crack the 450W mark, even running at full load for extended periods of time (which pretty much never happens while gaming).

CuNi

Member

NV's card is said to be using it, AIBs stick with multiple 8-pin:

GeForce RTX 3090: 12-pin PCIe on Founders Edition, not on custom cards

We're hearing that NVIDIA will use a new 12-pin PCIe power connector on the GeForce RTX 3090 Founders Edition, not custom cards.www.tweaktown.com

TBH thats not going to happen.

The Idea of the 12 Pin connectors for GPUs and Motherboards etc is to reduce efficiency loss when converting AC to all those DC Voltages like 12V, 5V, 3.3V etc.

So the motherboard designs need to be changed as well to reflect that and I think that'll happen mid 2020 at the earliest for consumers.

Doing a staggered release would be very stupid as you'd force people to buy a new GPU to have that 12 Pin connector for the GPU and then half a year later force people YET AGAIN to buy a new PSU for the 12 Pin motherboard connector.

That would kill adoption rate before it even had a chance to begin.

Memorabilia

Member

It would seem this is bound to have a negative effect on Ampere sales. As much as I generally have preferred nVidia GPUs the past few years (despite vastly preferring AMD as a company), I'm not too thrilled at the notion of needing to replace my PSUs. I can't imagine the adoption rate on this is going to go super smoothly or quickly. And if this requires a new motherboard too, forget it.

PhoenixTank

Member

I've reread this a few times and I'm not entirely sure what you're getting at is based on firm ground.TBH thats not going to happen.

The Idea of the 12 Pin connectors for GPUs and Motherboards etc is to reduce efficiency loss when converting AC to all those DC Voltages like 12V, 5V, 3.3V etc.

So the motherboard designs need to be changed as well to reflect that and I think that'll happen mid 2020 at the earliest for consumers.

Doing a staggered release would be very stupid as you'd force people to buy a new GPU to have that 12 Pin connector for the GPU and then half a year later force people YET AGAIN to buy a new PSU for the 12 Pin motherboard connector.

That would kill adoption rate before it even had a chance to begin.

It sounds like you're conflating 12Pin PCIE with ATX12VO. Intel's ATX12VO standard has a 10 Pin Motherboard connector, and the power supplies only provide 12V (as you mentioned).

Nvidia don't seem to give a damn about an official standard here but PCIE GPU cables are already 12V/GND only. Not a sparky but there doesn't seem to be anything incompatible there that couldn't be solved with a new modular cable or an adapter from 3 x 8 Pin PCIE (probably in the box!).

To me their goal seems to be to increase available power delivery without going OTT with cables and wasting board space, but that doesn't have anything to do with efficiency in relation to efficiency loss. The only reason to buy a new PSU for these rumoured cards would be if your PSU isn't actually capable enough to power them anyway.

ATX12VO is going to take a while to become THE standard but yes a new PSU for that but you'll be building a new system anyway. Same thing when we went through previous ATX revisions. I don't see a double whammy of pain here.

Sorry if I've misread and got the wrong end of the stick there, just trying to make sense of it.

SecretAgentHam

Member

Guess I'll stick with my 1080ti and hope the next gen is a little more power efficient. Here's hoping AMD figure out how to compete in the GPU market and get their drivers ironed out.

n0razi

Member

Yeah I'm not upgrading to a 300+ watt video card any time soon regardless of if I can afford it or not.

Currently running an RTX 2070 (175w) and it drives my 3440x1440 ultrawide just fine; 600w is just insane for a single card... I can't imagine the heat something like that would put out and its already hot enough in my office.

My guess is that only the higher end cards (3080/3080ti+) will be utilizing this connector. I can't imagine a mid range (3060/3070) to need anything more than a single pin 6/8pin. The kind of people who drop $1000 on a new 3080/3080ti can also easily afford a new high end PSU.

Currently running an RTX 2070 (175w) and it drives my 3440x1440 ultrawide just fine; 600w is just insane for a single card... I can't imagine the heat something like that would put out and its already hot enough in my office.

the use of an adaptor is possible or people will have to buy new PSUs?

My guess is that only the higher end cards (3080/3080ti+) will be utilizing this connector. I can't imagine a mid range (3060/3070) to need anything more than a single pin 6/8pin. The kind of people who drop $1000 on a new 3080/3080ti can also easily afford a new high end PSU.

Last edited:

CuNi

Member

I've reread this a few times and I'm not entirely sure what you're getting at is based on firm ground.

It sounds like you're conflating 12Pin PCIE with ATX12VO. Intel's ATX12VO standard has a 10 Pin Motherboard connector, and the power supplies only provide 12V (as you mentioned).

Nvidia don't seem to give a damn about an official standard here but PCIE GPU cables are already 12V/GND only. Not a sparky but there doesn't seem to be anything incompatible there that couldn't be solved with a new modular cable or an adapter from 3 x 8 Pin PCIE (probably in the box!).

To me their goal seems to be to increase available power delivery without going OTT with cables and wasting board space, but that doesn't have anything to do with efficiency in relation to efficiency loss. The only reason to buy a new PSU for these rumoured cards would be if your PSU isn't actually capable enough to power them anyway.

ATX12VO is going to take a while to become THE standard but yes a new PSU for that but you'll be building a new system anyway. Same thing when we went through previous ATX revisions. I don't see a double whammy of pain here.

Sorry if I've misread and got the wrong end of the stick there, just trying to make sense of it.

All good, if anything I probably have worded it shitty as I typed that right after waking up and still in bed lol.

What I meant is that I don't think all NVcards will have that new 12-Pin connector as I highly doubt that it would look good in terms of publicity if you either are forced to A) buy a new PSU that has said 12-Pin connector or B) use janky adapters. Also, if you don't buy a whole new rig but just upgrade your GPU, you exactly have the issue described above. Use the adapter or buy new PSU. And if you decide to upgrade the rest of the rig 1-2 years later, you need to get a new PSU yet again. (if you bought a new one with the GPU that is). I just don't think they'll do such a staggered release but go all out and release 12-Pin PCIe and 10-Pin ATX at the same time with full compatible PSUs.

PhoenixTank

Member

Ahh fair enough.All good, if anything I probably have worded it shitty as I typed that right after waking up and still in bed lol.

What I meant is that I don't think all NVcards will have that new 12-Pin connector as I highly doubt that it would look good in terms of publicity if you either are forced to A) buy a new PSU that has said 12-Pin connector or B) use janky adapters. Also, if you don't buy a whole new rig but just upgrade your GPU, you exactly have the issue described above. Use the adapter or buy new PSU. And if you decide to upgrade the rest of the rig 1-2 years later, you need to get a new PSU yet again. (if you bought a new one with the GPU that is). I just don't think they'll do such a staggered release but go all out and release 12-Pin PCIe and 10-Pin ATX at the same time with full compatible PSUs.

Nah you're right that not all the AIBs are on board with this 12Pin plan if the rumours are to be believed.

Add option C of a new cable for modular supplies and you're about right. I don't expect B to be janky, though. Time will tell.

I see what you're getting at with the knock on effect of buying a new PSU and then system, but the sad fact is that Nvidia aren't Intel nor are they currently working together on this. The PSU & motherboard manufacturers of course play a role but I'd be pleasantly surprised to see Nvidia reach out to Intel and vice versa on it and come up with a plan to launch/push hard for this sort of thing at the same time.

I guess I'm pessimistic on the planning not being a shit show, and optimistic on the hardware side of things while you're the opposite of that

Last edited:

CuNi

Member

Ahh fair enough.

Nah you're right that not all the AIBs are on board with this 12Pin plan if the rumours are to be believed.

Add option C of a new cable for modular supplies and you're about right. I don't expect B to be janky, though. Time will tell.

I see what you're getting at with the knock on effect of buying a new PSU and then system, but the sad fact is that Nvidia aren't Intel nor are they currently working together on this. The PSU & motherboard manufacturers of course play a role but I'd be pleasantly surprised to see Nvidia reach out to Intel and vice versa on it and come up with a plan to launch/push hard for this sort of thing at the same time.

I guess I'm pessimistic on the planning not being a shit show, and optimistic on the hardware side of things while you're the opposite of that

I'm quit pessimistic too, but I hope that they see that with a smooth release, they get higher adoption rates which translate to higher profits and sales hehe.

True, I haven't considered modular PSUs getting a compatible cable, good point you got there!

Whit "janky" I'm talking about like those old 2x MOLEX to 6-Pin stuff that was very cheaply made and also looked quit bad inside of a build.

I mean if you think about it, those 12-Pins are probably going to be on the very High-End cards only, and those guys are known enthusiasts. I doubt anyone of them would want to throw a adapter into a clean build etc. especially with cheap looks.

But yeah Option C, now that you mentioned it, seems if most likely if they do end up deciding on that road!