playsaves3

Member

I was just answering the guys question on what specs Sony would use in this instanceWould be more cost-efficient for Sony to just release a PC version of a game.

I was just answering the guys question on what specs Sony would use in this instanceWould be more cost-efficient for Sony to just release a PC version of a game.

we really should want a 699 pro for them to go all inUh, looks like I slightly goofed on the FP32 compute; just divide by half.

So FP32 for the Pro looks like it's gonna be ~ 33.5 TF. But I'm wondering if that's dual-issue or single-issue shader. 33.5 TF for single-issue shader throughput in a mid-gen refresh sounds monstrous. It also sound like at least $700, if not more.

I'm thinking it's 33.5 TF dual-issue, 16.75 TF single-issue. Most games would target the 16.75 but those using dual-issue instructions could get up to 33.5 TF compute in areas that need it. Pretty interesting, but I'm even more interested in the PSSR stuff.

Not exactly equivalent

It’s seeming as though RDNA is getting reconfigured here

Yeah it's 16.75 TF in "real" performance (single-issue shader mode). It's a 56 CU design; to hit 33.5 TF the GPU'd have to be clocked more than 4.5 GHz. I don't think any GPU's are hitting that at this size for a few years from now, even from AMD (whose GPUs are usually higher-clocked than Nvidia ones).

all rt modes from the base model will run completely maxed out now maybe they can go in and a new rt mode that's more like high end pcWhat are the implications of 2-4x RT?

RT isn’t hardly used at all on PS5 games. In this going to make it far more ubiquitous without tanking performance?

Will it only be used sparingly like for shadows or will we get reflections?

I still think these raster improvement estimates seem super low and conservative Im still expecting a 60-80% raw raster increase everything else looks legit thoughFrankly I struggle to see how the 7900xtx can overcome PS5 Pro IMAGE QUALITY OUTPUT (not raw raster) in RT workloads if those numbers and PSSR is legit.

uh isnt that what nearly every leak has been saying for almost a year now. it would be weird if it was much lower than that.Much more realistic. Otherwise, this "leak" is saying AMD was able to put roughly the equivalent of a 7800 XT on to an APU. That is a massively leap in APU tech, isn't it?

Nah....not buying it. Don't get me wrong. I would absolutely LOVE to be wrong. Just not holding my breath.

the guy got a little confused go easy on him and be nicerWe’re talking about a PS5 Pro in 2024 and strictly in terms of raw horsepower at a TDP similar to the regular PS5. Why are you talking about 2027, upscalers and a 250W TDP?

This would make it faster than the 7700 XT which is right around 45% faster than the 6700 we compare the regular PS5. That’s without the bandwidth increase too.Are we 100% sure about this? I don't think a 60% boost in TF via more CUs and a fractional increase in bandwidth would be enough to yield 45% Raw performance uptick. Consider the PS4 vs PS4 Pro which doubled CU ANDdoubledbumped bandwidth 25% to reach checkerboard 1800p-4k from base PS4 1080p resolution. I would assume even more diminished returns as CU/bandwidth ratio gets larger.

33 TF comparable to RDNA2 is plausible imo if the 45% raw raster uplift is to be believed.

we really should want a 699 pro for them to go all in

Are we 100% sure about this? I don't think a 60% boost in TF via more CUs and a fractional increase in bandwidth would be enough to yield 45% Raw performance uptick. Consider the PS4 vs PS4 Pro which doubled CU ANDdoubledbumped bandwidth 25% to reach checkerboard 1800p-4k from base PS4 1080p resolution. I would assume even more diminished returns as CU/bandwidth ratio gets larger.

33 TF comparable to RDNA2 is plausible imo if the 45% raw raster uplift is to be believed.

this site is bi-polar.

Either Moore's law Is Dead is full of shit, or they are 100% accurate.

Funny how some pick and choose when to declare them FOS just depending on the basic subject matter.

This would make it faster than the 7700 XT which is right around 45% faster than the 6700 we compare the regular PS5. That’s without the bandwidth increase too.

I think it’s better to lean more conservative with our estimates. Whatever the case, 34 TFLOPs dual-issue compute should put it above a 45% performance increase in rasterzation even though it’s actually only around 60% in single-issue compute. That’s also without considering the lower bus width and bandwidth of the 7700 XT.The numbers get tricky depending on what resolution you're using to compare. Let's say we put PS5 at 2080ti for comparison sake. Yes it's on the more optimistic end for PS5 but not unheard of in select games. I do this to normalize since techpowerup has 4k baseline for 2080ti cards and better. 45% pure raster would place it slightly above 6900xt level which is 23 TF RDNA2 card.

uh isnt that what nearly every leak has been saying for almost a year now. it would be weird if it was much lower than that.

And protip, don’t believe a word of what Moore’s Law is Dead is claiming. The guy just carpet bombs an entire area with info he finds over the web and claims he has "sources". He’s just a little better than RedGamingTech, which isn’t saying much.

MLID is shit, 100% throwing shit at his wall

veto ban his content!

For single-issue the actual compute increase is to 16.75 TF so it's like a 63%. It could be the CPU not getting a big revision or super-high clock to leverage it wholly though.

Same. PSSR and any other customizations for upscaling, AI, caches etc. are more interesting to me than whatever the TF is going to be.

He wasn't specifying single-issue or dual-issue mode, though. Realistically, you'd need a 112 CU GPU at PS5's GPU clock just to get 32 TF in single-issue mode.

So the 33.5 TF is definitely dual-issue mode, meaning games would have to target dual-issue shader throughput to get the benefits on that front.

Yeah it's 16.75 TF in "real" performance (single-issue shader mode). It's a 56 CU design; to hit 33.5 TF the GPU'd have to be clocked more than 4.5 GHz. I don't think any GPU's are hitting that at this size for a few years from now, even from AMD (whose GPUs are usually higher-clocked than Nvidia ones).

Good news is the custom stuff like PSSR seem to be doing a lot of the heavy lifting, and are very easy for devs to tap into. Can definitely see Sony doing $349 PS5 digital/$449 PS5 disc/$549 PS5 Pro digital this holiday.

A bit of a different take. It's not FP16 vs FP32. It's FP32 but single-issue vs dual-issue. The numbers AMD reports for FP32 compute on RDNA3 cards are for dual-issue FP32. They probably got tired of all those huge NVIDIA TFLOPs numbers that made them look weak and decided to play the same game.Pretty sure the video claimed it was FP16 67 TF. FP32 would be half that.

Good news is the custom stuff like PSSR seem to be doing a lot of the heavy lifting, and are very easy for devs to tap into. Can definitely see Sony doing $349 PS5 digital/$449 PS5 disc/$549 PS5 Pro digital this holiday

A bit of a different take. It's not FP16 vs FP32. It's FP32 but single-issue vs dual-issue. The numbers AMD reports for FP32 compute on RDNA3 cards are for dual-issue FP32. They probably got tired of all those huge NVIDIA TFLOPs numbers that made them look weak and decided to play the same game.

Just like with double and single precision, just halve the number to get the "real" world performance.

It's explained in more details here if you're interested.

https://chipsandcheese.com/2023/01/07/microbenchmarking-amds-rdna-3-graphics-architecture/

Well, yeah, but there are some interesting implications nonetheless.Ah......ok. I misreadthicc_girls_are_teh_best then. So marketing bullshit has finally made teraflops completely meaningless, it would seem.

On the other hand, VOPD (vector operation, dual) does leave potential for improvement. AMD can optimize games by replacing known shaders with hand-optimized assembly instead of relying on compiler code generation. Humans will be much better at seeing dual issue opportunities than a compiler can ever hope to. Wave64 mode is another opportunity. On RDNA 2, AMD seems to compile a lot of pixel shaders down to wave64 mode, where dual issue can happen without any scheduling or register allocation smarts from the compiler.

It’ll be interesting to see how RDNA 3 performs once AMD has more time to optimize for the architecture, but they’re definitely justified in not advertising VOPD dual issue capability as extra shaders. Typically, GPU manufacturers use shader count to describe how many FP32 operations their GPUs can complete per cycle. In theory, VOPD would double FP32 throughput per WGP with very little hardware overhead besides the extra execution units. But it does so by pushing heavy scheduling responsibility to the compiler. AMD is probably aware that compiler technology is not up to the task, and will not get there anytime soon.

Wasn’t PS5 revealed just before GDC?

I wonder if Sony will announce the pro @ GDC

Well, yeah, but there are some interesting implications nonetheless.

Will we see real gaming applications of that? Probably not. At least not anytime soon because Sony won't start to program games for the PS5 and PS5 Pro differently since RDNA2's SIMD's don't have dual-issue capablities.

This is a bit reminiscent of Intel's scores getting invalidated when enabling Advanced Performance Optimizations in 3D Mark. Right around or before the release of their Arc GPUs, Intel had Advanced Performance Optimization enabled in 3D Mark. This resulted in much higher benchmark scores due to some of the shaders being replaced by their own and boosting performance substantially.Yeah, but the article downlplays its impact a bit due to the fact that it relies so much on the compiler which it says are not up to it. Shades of "waiting for tools". Also the articles notes that this comes into play for optimization and avoiding bottlenecks. That's not something that can be calculated in a formula like the one used to determine teraflops.

Interesting stuff either way.

Wasn’t PS5 revealed just before GDC?

I wonder if Sony will announce the pro @ GDC

Weren’t most/all PS5 leaks wrong?Not really that weird for most leaks to be completely wrong, no. Not saying it is impossible. Just unlikely.

Weren’t most/all PS5 leaks wrong?

The APU was fully leaked, though.Some got pretty close, as I recall, but don't think anyone got it dead on.

I'm curious as to what Sony would do about the RAM situation. This upscalers apparently uses 250MB of RAM to use and enhanced RT effects won't exactly be light on RAM utilization either.

I'm not doubting the RT performance, I'm wondering if it might have more RAM then the base PS5.Well it looks like they found a way. 2-4x RT performance uplift is excellent.

Maybe a bit more OS dedicated RAM that frees some more memory for developers. It depends on how much they will try to enable 8K or not. Part of the extra 1 GB of RAM freed for developers was to allow for 4K buffers and UI.I'm not doubting the RT performance, I'm wondering if it might have more RAM then the base PS5.

I'm not doubting the RT performance, I'm wondering if it might have more RAM then the base PS5.

I'm curious as to what Sony would do about the RAM situation. This upscalers apparently uses 250MB of RAM to use and enhanced RT effects won't exactly be light on RAM utilization either.

So its going to be a little slower than a 3080 in raster and will be more bandwidth-limited...I won't lie that is quite disappointing.

Because even in real-world performance this is looking like its going to end up inferior to a 3080/4070 which I thought was the minimum bar for a worthy mid gen upgrade. Its bandwidth starved, is quite limited in terms of a compute jump compared even to something like the ps4pro, and it would be optimistic to say its going to equal nvidia in reconstruction and rt In amds first real try with dedicated blocks.In the context of real world performance, why?

Eh, not buying the 45% raw performance increase. I think it'll be more like 70%.

Just went up. Seems Sony docs have 67 TF FP-16. IIRC, you divide by 4 to get the FP32 compute, right?

If so that'd be 16.75 TF FP32 compute. Not bad, not "beastly". I'm expecting its the customizations for RT and other stuff doing the heavy lifting for improvements.

Maybe a bit more OS dedicated RAM that frees some more memory for developers. It depends on how much they will try to enable 8K or not. Part of the extra 1 GB of RAM freed for developers was to allow for 4K buffers and UI.I'm not doubting the RT performance, I'm wondering if it might have more RAM then the base PS5.

Also, 1080p takes less RAM than full 4K buffers, so the extra memory requirements will not hurt as badly and I do think they will offer a bit of extra RAM to devs to compensate.I'm not doubting the RT performance, I'm wondering if it might have more RAM than the base PS5.

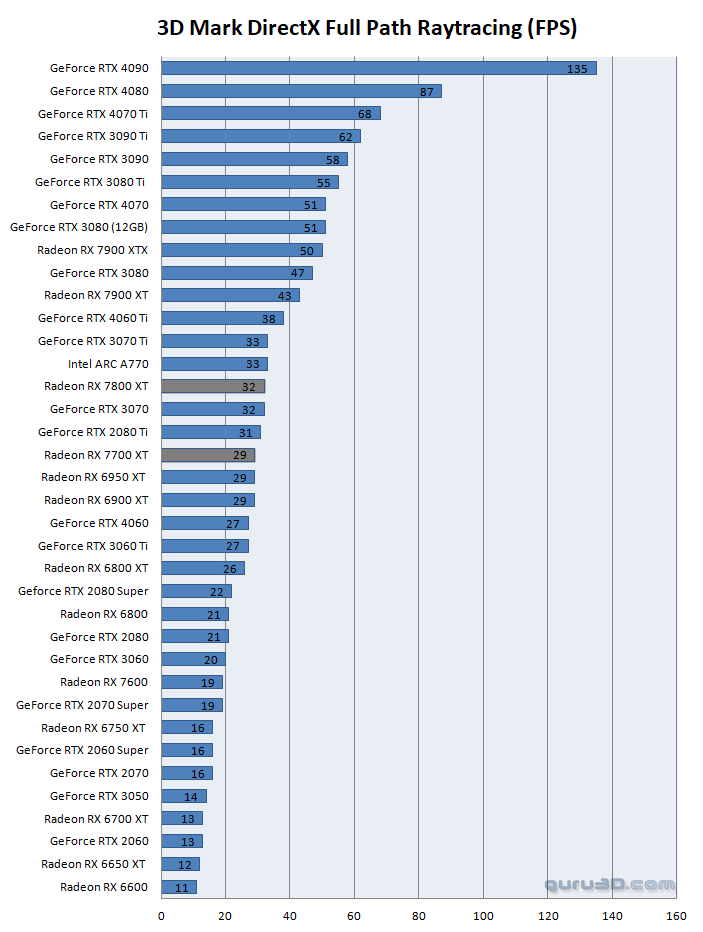

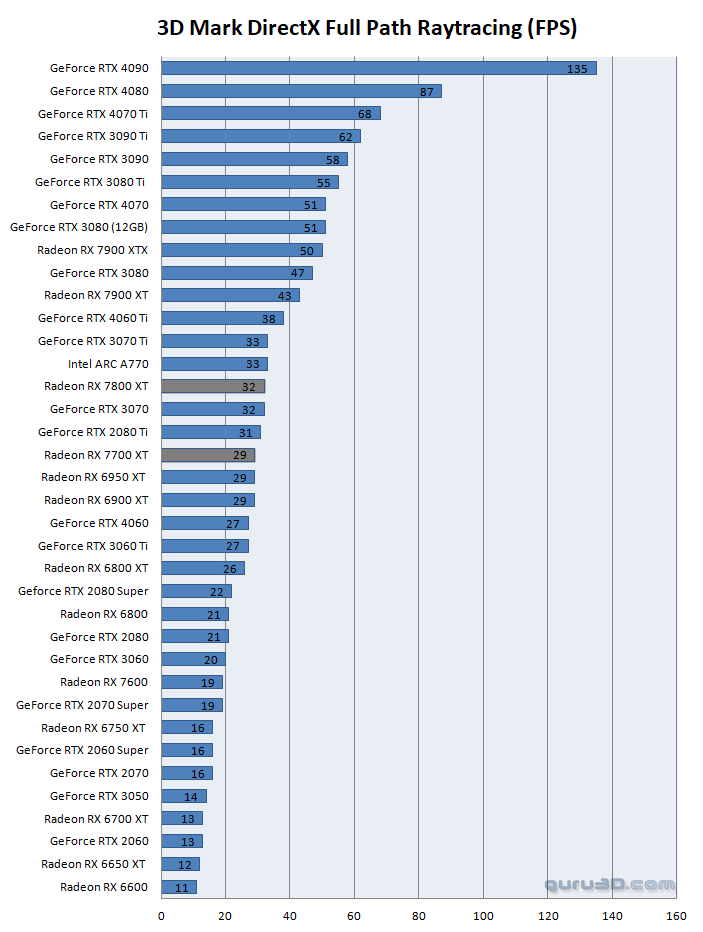

Even without LFC this is well within VRR window. It would be lovely if consoles actually offered locked 50 Hz framerates most displays would support… such an unused option…I was looking around to find what the ray tracing performance of the Pro might be like using those 2-4x RT performance increase figures.

Using the 6700 XT as a baseline, we'd end up with RT performance anywhere between a 6800 XT (2x) to a 3080 12GB (4x). This is a pure ray tracing benchmark (path tracing). Obviously, we probably won't get a fully path-traced game on consoles this generation but we can perhaps extrapolate a bit using this little graph. This would make the pure RT performance of the Pro on par with the 3080 so assuming a similar workload, the Pro should lose about as much performance as the 3080 12GB when turning RT features on in a best-case scenario. I do think the ray tracing on PlayStation is more efficient than on AMD GPUs on PC though. This might seem disappointing but do note that in a best-case scenario in a path-traced benchmark, the Pro would be a bit faster than a 7900 XTX. That's quite good I'd say.

A bit shocked at how bad AMD is at ray tracing I gotta say. Also, the 4090 is a fucking monster.

Don't think so. 45% would only put it on the level of a 6800 and in some cases, barely above the 2080 Ti. Look at A Plague Tale for instance which seems to favor compute power.I think you'll be disappointed then.

Having the SSD act as virtual memory is great, but it doesn't fundamentally address my point. A higher resolution, more advanced upscalers, and additional RT effects will all lead to additional memory utilization compared to the game running on a regular PS5. I'm wondering how they are addressing it, be it more RAM or the OS running on slower DDR4 like the PS4 Pro did. It's speculation on my part, nothing more.I really tried, but you left me no choice.

The whole thing is odd and numbers do not fit. 300 tops 8bit is a lot and suggest 2450 Mhz GPU clock which doesn't fit with the rest. Why would they downclock the GPU when using 36CUs for PS5 BC?Eh, not buying the 45% raw performance increase. I think it'll be more like 70%.