Bus width is linked to VRAM allocation. It is not like we have a 1060 12GB to determine if slow down is due to bus width or due to memory amount. Probably more the former in the majority of cases because the entire 10 series were very well balanced parts with an appropriate amount of VRAM for the amount of performance on offer.

You can start to see the limits of 6GB though here.

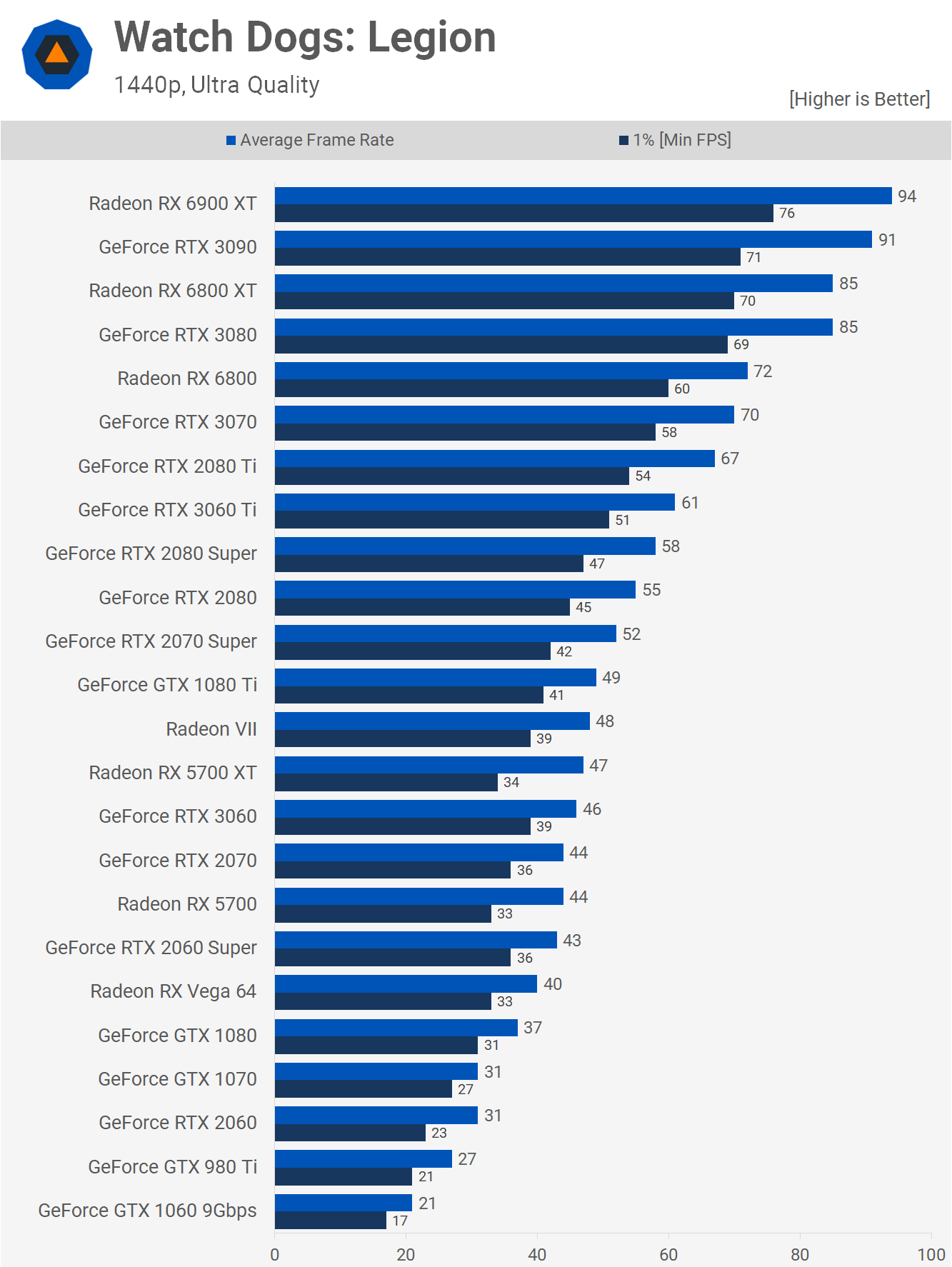

Even though the 2060 has more bandwidth than the 1070 and 1080 and it has better colour compression as well the 1% lows are falling quite a long way behind that of the 1080.

Here the 2060 is falling behind the normally slower 8GB 10 series parts

Same thing in Anno.

And as we saw with Watch Dogs Legion the averages don't tell the whole story at all so who knows what other games TPU tested where the average looked okay but the 1% lows were worse.

I made no comment about console settings. I simply said that 6GB started to struggle and we can see that it clearly did in some titles, either due to lack of bandwidth or due to VRAM constraints which when a chip is designed for a given performance target goes hand in hand most of the time.

Yes a 3070 with 16GB of VRAM would have far more VRAM than it can truely take advantage of but it would not be losing to the 3060 12GB in some games.

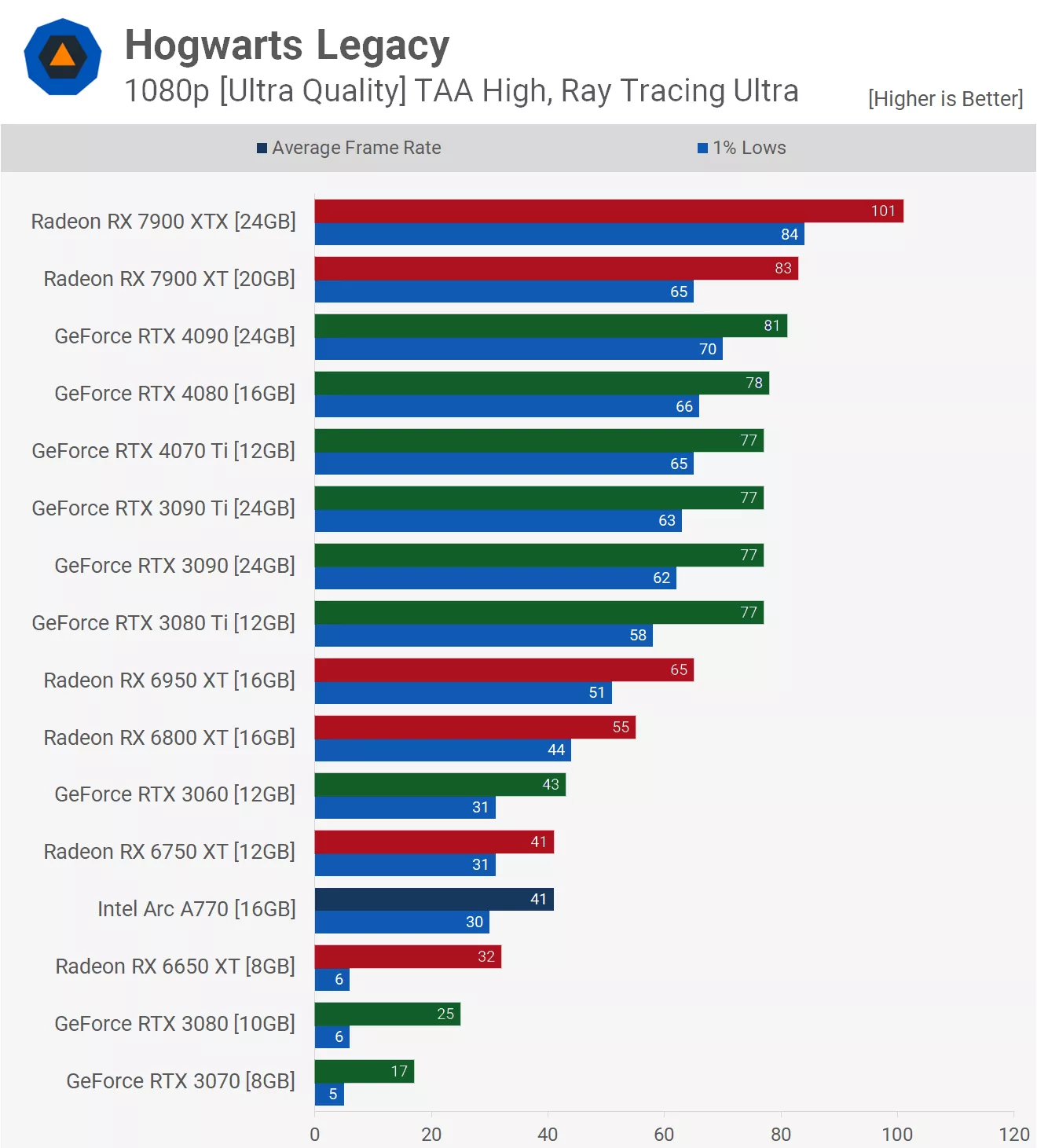

This is something that should not happen. The 3060 is providing a solid 30 fps 1080p ultra + RT gaming experience here. The 3080 and 3070 and getting absolutely crushed. That is a clear example of the 3070 and 3080 being VRAM limited. Even the 12GB+ RDNA2 cards are offering a far better gaming experience here. Anybody who purchased the 3070 over the 6800 or the 3080 over the 6800 XT for the RT capabilities is not going to be happy yet those who went 3060 12GB are absolutely fine if they are happy with 30 FPS.

Or here in RE4 where the 3070 can't even play the game but the 3060 can handle 45+ fps.

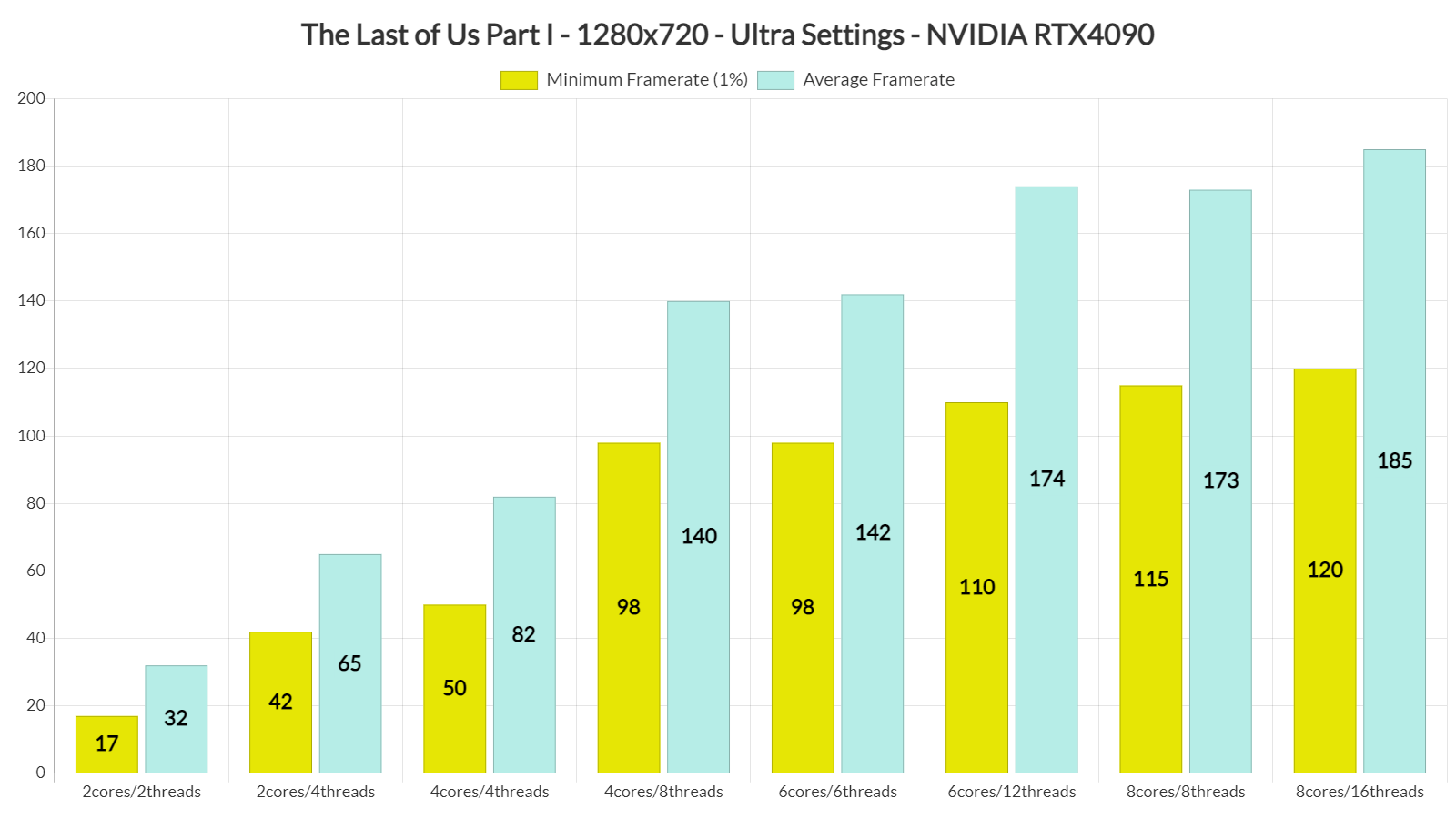

You can try and paint this as TLOU being an outlier but there are more titles where this is becoming an issue with the 3060Ti, 3070, 3070Ti and on occasion 3080 as well.

NV went cheap on VRAM for the 3070 and 3080. I mean having 8GB for the 1070 was great, having 8GB on the 2070 was perfectly fine but in 2020 the 3070 also having 8GB was bound to cause problems long term. For those who upgrade every few years then not a problem but for those who like to keep their card for 5 or so years it is something that will come back to bit you. Also with 4070Ti pricing many people who may have upgraded to a 40 series part have decided to hold on and even the 4070Ti at $800 damn dollars is showing signs of being limited at 4K which for that much money I just don't see as acceptable.

www.dsogaming.com

www.dsogaming.com